Supercharge your Amazon Bedrock agents with Tavily search

Follow this practical guide to learn how to empower your agentic AI built on Amazon Bedrock with real-time web search results.

Amazon Bedrock

Tavily

LangChain

Python

As AI engineers, we’ve all faced the same frustrating limitation: our LLMs are frozen in time, unable to access current information or verify recent facts. Whether it’s hallucinations on complex queries or simply being unaware of events that happened after their training cutoff, these limitations can severely restrict the practical applications of our AI agents.

I recently discovered a game-changing solution that transformed how we build AI agents. After struggling with traditional search APIs in our production environment, we switched to Tavily — a search engine built specifically for LLMs — and the results were remarkable. The integration was so successful that I want to share our experience.

In this guide, I’ll walk you through exactly how to supercharge your Amazon Bedrock agents with Tavily web search, complete with practical examples and lessons learned from production deployment.

The problem: why your LLMs need real-time data access

Before diving into the solution, let’s address the elephant in the room: why do even the most advanced LLMs struggle without web search capabilities?

The knowledge cutoff problem

Every LLM has a training cutoff date — a point in time after which it has no knowledge of world events. This means your AI agent can’t tell you today’s weather, current stock prices, recent product releases, or even who won last night’s game. Whether it’s breaking news, updated documentation, or simply checking if a service is currently online, LLMs are blind to anything that happened after their training. They’ll either admit they don’t know, or worse — confidently provide outdated information.

Hallucinations and uncertainty

When LLMs lack information, they don’t always admit it. Instead, they might:

- Generate plausible-sounding but incorrect information

- Mix outdated facts with speculation

- Provide confident answers based on patterns rather than facts

Why traditional search APIs fall short

You might think, “I’ll just integrate Google Search API.” We tried that. Here’s why it didn’t work:

- Raw, unstructured data: Traditional search APIs return HTML pages, snippets, and links — not clean, LLM-ready content.

- Ads and irrelevant content: Results cluttered with advertisements and SEO-optimized fluff.

- Complex parsing requirements: Significant engineering effort needed to extract useful information.

- Not optimized for AI: These APIs were built for human consumption, not machine reasoning.

Enter Tavily: search built for AI

Tavily isn’t just another search engine — it’s a search infrastructure designed from the ground up to feed LLMs. Here’s what makes it special:

Clean, structured output

Instead of messy HTML and links, Tavily returns clean, structured JSON perfectly formatted for LLM consumption. No parsing, no cleaning, just ready-to-use context.

AI-optimized relevance scoring

Each result comes with a relevance score, allowing your agent to assess source quality and make informed decisions about which information to trust.

Built-in LLM summary generation

Tavily can generate LLM-powered summaries of search results — and surprisingly, this feature doesn’t cost extra API credits!

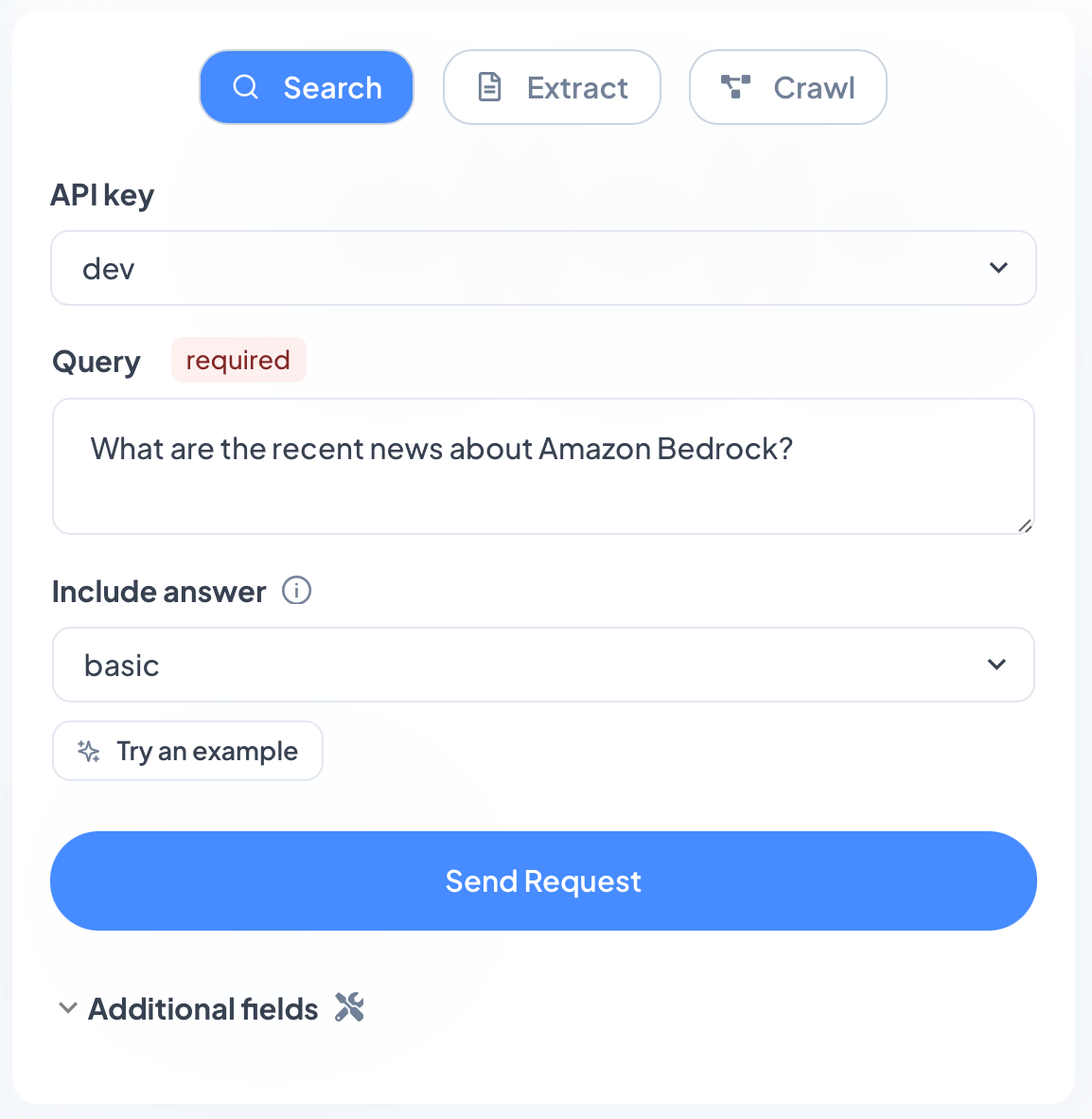

Hands-on: exploring the Tavily playground

Let’s start by exploring what Tavily can do through its interactive playground. This is the best way to understand its capabilities before writing any code.

Getting started

First, head to tavily.com and sign up for a free account (you get 1,000 API credits monthly — more than enough for experimentation).

Once logged in, you’ll see the API playground with three main modes:

- Search: The primary mode for web searching

- Extract: For pulling data from specific URLs

- Crawl: For systematically exploring websites

Your first search

Let’s try a practical example. In the search box, enter: “What are the recent news about Amazon Bedrock?”

Hit send, and you’ll see the results in a structured JSON format:

{"query": "What are the recent news about Amazon Bedrock?","follow_up_questions": null,"answer": "Amazon Bedrock now offers access to advanced foundation models from OpenAI and includes new guardrails for responsible AI implementation.","images": [],"results": [{"url": "https://aws.amazon.com/blogs/aws/category/featured/","title": "Featured | AWS News Blog","content": "AWS continues to expand access to the most advanced foundation models with OpenAI open weight models now available in Amazon Bedrock and Amazon SageMaker","score": 0.66229486,"raw_content": null},{"url": "https://aws.amazon.com/blogs/aws/author/danilop/","title": "Danilo Poccia | AWS News Blog","content": "AWS continues to expand access to the most advanced foundation models with OpenAI open weight models now available in Amazon Bedrock and Amazon SageMaker","score": 0.6410185,"raw_content": null},{"url": "https://aws.amazon.com/blogs/aws/category/artificial-intelligence/amazon-machine-learning/amazon-bedrock/amazon-bedrock-guardrails/","title": "Amazon Bedrock Guardrails | AWS News Blog","content": "Amazon Bedrock Guardrails introduces enhanced capabilities to help enterprises implement responsible AI at scale, including multimodal toxicity detection, PII","score": 0.6320719,"raw_content": null}],"response_time": 1.59,"request_id": "3b3d9613-9a54-4ae4-b39d-378b66cac25c"}

Notice how the results are:

- Sorted by relevance score (0.0 to 1.0)

- Include clean, extracted content

- Provide source URLs for verification

- Free from ads and clutter

Advanced search parameters

Under Additional fields, Tavily offers several powerful parameters to fine-tune your searches:

Key parameters to know:

Search depth

basic: Single API credit, suitable for most queriesadvanced: Two API credits, deeper search for complex topics

Max results

- Default is 5, adjustable based on your needs

- More results = more context but higher token usage in your LLM

Time range

- Filter for recent content (today, this week, this month)

- Essential for time-sensitive queries

Include answer

basic: Generates a compact, concise summary of the search resultsadvanced: Provides a more detailed, comprehensive analysis of the findings- Surprisingly, using any of these costs no additional credits!

The magic of include_answer

The “Include answer” feature is what truly sets Tavily apart. When you enable include_answer, Tavily doesn’t just return search results — it generates a coherent LLM-powered summary of all the sources it found.

For example, when querying about Amazon Bedrock’s latest updates with include_answer set to basic, Tavily returned: “Amazon Bedrock now offers access to advanced foundation models from OpenAI and includes new guardrails for responsible AI implementation.” This was information from just days — completely beyond any LLM’s training data.

The best part? This AI-generated summary doesn’t cost any additional API credits. You’re essentially getting a free, context-aware summarization on top of your search results. This feature alone can eliminate an entire LLM call from your pipeline, reducing both latency and costs.

Building your first Tavily-powered agent

Now that you understand Tavily’s capabilities, let’s build an intelligent agent that knows when and how to use web search.

Architecture overview

We’ll create an agent using:

- Amazon Bedrock: For foundational LLM capabilities (using Claude 3 Haiku)

- LangGraph: For agent orchestration and tool management

- Tavily: As our web search tool

The key insight: our agent should be smart enough to decide when web search is necessary. Not every query needs real-time data!

Setting up the environment

- First, install the required packages:

pip install langgraph langchain langchain-aws tavily-python- Second, setup your environment variable. If you’re working with script from below, create a

.envfile with your Tavily API key:

TAVILY_API_KEY=your_api_key_here- Third, login into your AWS account through CLI and ensure you have the necessary permissions to access Bedrock.

Complete implementation

Here’s the full code for creating your Tavily-powered agent:

import osimport dotenvfrom langgraph.prebuilt import create_react_agentfrom langchain_tavily import TavilySearchfrom langchain_core.messages.ai import AIMessagefrom langchain_aws import ChatBedrockdotenv.load_dotenv()# Set your Tavily API keyos.environ["TAVILY_API_KEY"] = os.getenv("TAVILY_API_KEY")# 1. Define the Tavily search tooltavily_search_tool = TavilySearch(max_results=3,topic="general",verbose=True,time_range="week")# 2. Connect to Bedrock LLM (e.g. Claude 3 Haiku)llm = ChatBedrock(model_id="anthropic.claude-3-haiku-20240307-v1:0", # or any other available Bedrock modelmodel_kwargs={"temperature": 0.2},)# 3. Create the agent using LangGraph's create_react_agentagent = create_react_agent(llm, [tavily_search_tool])# 4. Run the agent with a question (streaming)while True:user_input = input("\nYou: ")if user_input == "q":breakfor step in agent.stream({"messages": user_input}, stream_mode="values"):if isinstance(step["messages"][-1], AIMessage):if step["messages"][-1].tool_calls:for tool_call in step["messages"][-1].tool_calls:print(f"\nTool: {tool_call['name']}, Args: {tool_call['args']}")else:print(f"\nAI: {step['messages'][-1].content}")

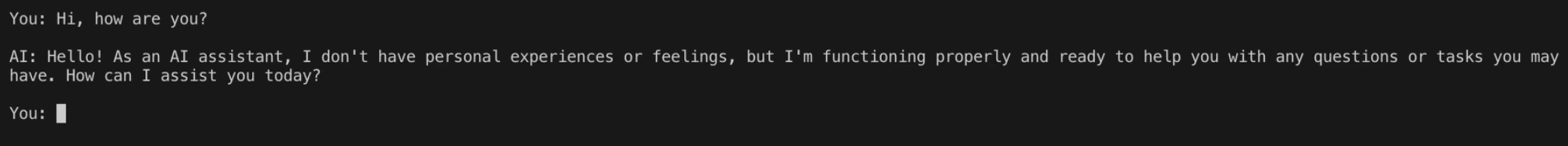

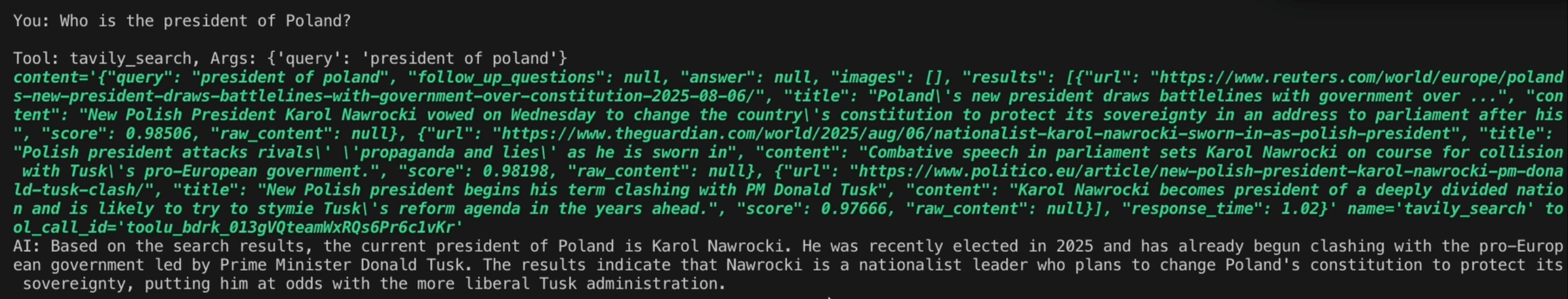

Testing Your agent

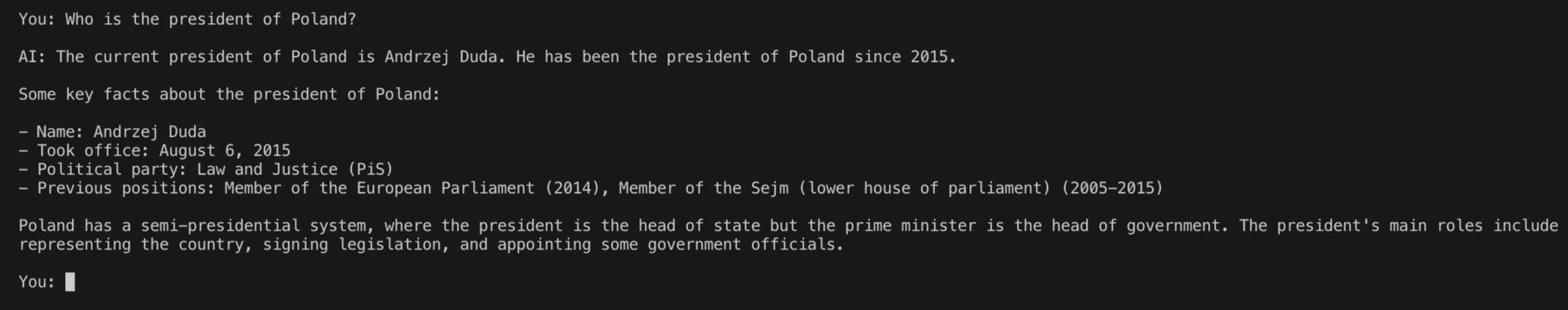

Let’s run the agent script and see how it handles different types of queries:

Query 1: Simple greeting

Note: No tool use — the agent correctly identifies this doesn’t need web search.

Query 2: Current information

Note: The agent recognizes this requires current information and automatically searches.

The proof: without Tavily vs. with Tavily

To really drive home the difference, let’s now disable Tavily. Delete it from the agent’s tool list.

agent = create_react_agent(llm, [])Now, let’s run the same query again.

Wrong! The agent provides outdated information from its training data. This is exactly the problem Tavily solves.

Production lessons: what we Learned

After deploying Tavily in production at ChaosGears, here are the key insights that will save you time and money:

1. The free LLM answer is gold

The most surprising discovery: Tavily’s LLM-generated answers don’t cost extra credits. This means you can:

- Get pre-summarized search results without additional LLM calls

- Reduce latency by skipping a summarization step

- Save on token costs in your primary LLM

Pro tip: Consider using Tavily as a standalone Q&A service for some use cases — you might not even need to process the results further!

2. Basic search depth is usually enough

After extensive testing, we found:

- Basic search (1 credit) handled 95% of our queries perfectly

- Advanced search (2 credits) possibly improved results for highly complex, multi-faceted questions, but it wasn’t always a clear win

- The cost-benefit rarely justified using advanced search

Recommendation: Start with basic search and only upgrade to advanced for specific, validated use cases.

3. Stick with the “General” search topic

Tavily offers three search topics:

- General

- Financial

- News

Despite our initial assumptions, the “General” topic consistently provided the best results, even for news queries. The specialized topics occasionally missed relevant content that the general search found, wrongly narrowing the sources.

4. The managed solution advantage

Switching from Google Search API to Tavily reduced our development time from months to days. Why?

- No parsing infrastructure: Clean JSON out of the box

- No maintenance overhead: Tavily handles the web scraping complexity

- Better scalability: Higher rate limits and more reliable service

- Faster iteration: Change search parameters without rewriting parsers

Performance optimization tips

Token management

- Start with

max_results: 3for most queries - Increase only when dealing with complex, multi-source topics

- Use relevance score thresholds to filter low-quality sources

Cost optimization

- Leverage the free

include_answerfeature to reduce LLM calls - Use basic search depth as your default

- Implement caching for frequently asked questions

The future of AI-powered search

We’re witnessing a fundamental transformation in how humans interact with information. This isn’t just an incremental improvement — it’s a complete paradigm shift that’s as significant as the move from libraries to search engines.

The new search paradigm

Remember the “old way”? Type keywords, get ten blue links, open multiple tabs, scan through pages filled with ads and cookie popups, try to piece together information from different sources.

Now, what about this? You ask a question in natural language, and within seconds you get a comprehensive, accurate answer backed by current sources. No clicking, no scanning, no ads. Just the information you need, when you need it.

This is already happening. Tools like Perplexity AI and Tavily-powered agents are showing us what’s possible when AI and search converge. Complex research that once took hours — comparing products, understanding technical topics, gathering market intelligence — now happens in a single conversation.

The transformation in action

What makes this shift so powerful is the conversational nature. You can:

- Ask follow-up questions to dive deeper

- Request clarification on specific points

- Combine multiple queries into complex research tasks

- Get explanations tailored to your level of understanding

Your AI agent becomes a research assistant that never gets tired, can process hundreds of sources in seconds, and always provides citations for verification. It’s not replacing human judgment — it’s augmenting human capability in ways we’re only beginning to explore.

Challenges to consider

Of course, this transformation brings new questions we need to address:

Trust and Verification: How do we ensure AI-summarized information remains accurate and unbiased? Who decides what sources are authoritative?

The Economics of Information: If users never visit websites directly, how do content creators sustain their work? Will we see new models emerge where AI providers compensate original sources?

Manipulation Risks: As AI becomes the primary interface to information, the incentive to influence these systems grows. Will we see SEO-style optimization attempts for AI search results?

These are important considerations, but they shouldn’t overshadow the incredible potential. Every major technological shift brings challenges — what matters is that we’re building something that makes information more accessible, research more efficient, and knowledge more actionable than ever before.

Conclusion: your next steps

AI agents need real-time, reliable data to reach their full potential. Tavily provides the missing piece — web search designed specifically for LLMs. The integration is simple, the results are impressive, and the impact on your agents’ capabilities is transformative.

Ready to get started?

- Sign up for Tavily: Get your free API key (1,000 credits/month)

- Explore the Playground: Test queries relevant to your use case

- Build a Prototype: Use the code from this guide as your starting point

- Iterate and Optimize: Apply the production lessons to your specific needs

The combination of Amazon Bedrock’s powerful models, LangGraph’s elegant orchestration, and Tavily’s AI-first search creates agents that aren’t just smart — they’re current, accurate, and genuinely useful.

Don’t let your LLMs remain stuck in the past. Give them the power to access real-time information, and watch as they transform from impressive demos into indispensable tools.

Resources

This post is based on a webinar I presented about our experience integrating Tavily into Amazon Bedrock Agent. The full webinar recording is available here.