Challenges with S3 migrator

Have you ever thought about moving buckets between accounts/regions?

Amazon EC2

AWS Lambda

AWS Fargate

Amazon S3

AWS Tools and SDKs

Boto3 (AWS SDK for Python)

Python

Three services can handle our task, but we will choose only one. Let’s start with Lambda.

AWS Lambda

Its great scalability is a benefit when we want to copy ten, ten thousand or even more objects. In typical use cases we don’t need to copy buckets 24 hours a day, so it’s really beneficial for us to pay only for the time when Lambda works. So, what’s the drawback? Unfortunately, it’s the maximum time it can run. The hard limit is set to 15 minutes and there is no way to increase it even if we start another lambda to handle previous tasks. As we want to make it as simple as possible it can be quite challenging to save the state of task between lambdas. There will be a need to store the already copied objects and the ones that still need to be processed. This jumping between lambdas, saving and retrieving states, could be really vulnerable during the migrator process.

AWS EC2

So, let’s check another possibility. EC2 is a great service with many advantages, but just as many disadvantages. Let’s see how it handles our task. For starters, it doesn’t have any time limit. Various instance types can offer enough computing power to handle the migration process. And why we don’t use it? We pay while EC2 instance works, so even if we do not copy any bucket, the instance is draining our wallets… We need to remember to stop it after the process is finished. If we define a task too “powerful” to be handled by a selected EC2 instance, we need to take care of replacing it with a “bigger one”. Of course, there are possibilities to solve that problem with an auto-scaling group, but as I’ve mentioned at the beginning, we want to create a solution that is as simple as possible. And the least most important fact… EC2 isn’t serverless, and we do love serverless ;)

AWS Fargate

And so, we come to the last one. Fargate allows us to run containers and pay only for the time we use it, like Lambda, plus it doesn’t have any time limit, like EC2. We don’t need to maintain anything when the process is running. We didn’t debate long and quickly decided that Fargate is the service we need and want to use.

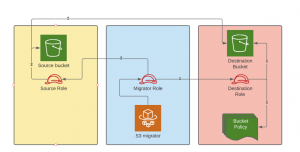

Assume role

The first problem we faced was how to connect to different accounts and get access to S3 objects. To achieve our goals we needed at least 4 roles (5 if we count a role for logging):

- Source role which gives access to source S3 bucket and allows migrator role to assume that role.

- Destination role which gives access to destination S3 bucket and allows migrator role to assume that role.

- Migrator general role which allows both to assume on source and destination roles.

- Migrator execution role which simply allows to run containers and Fargate task.

"Version": "2012-10-17","Statement": [{"Effect": "Allow","Action": ["sts:AssumeRole"],"Resource": ["arn:aws:iam::xxxxxxxxxxxx:role/src_role_name*","arn:aws:iam::xxxxxxxxxxxx:role/dst_role_name*"]}]

There are two main problems that need to be handled. How to get access to both buckets (source and destination) using one of the roles? We assume that source and destination bucket accounts are different. Let’s look at the boto3 copy function:

client.copy_object(Bucket=bucket_name,CopySource=copy_source,Key=key)

If we use source role, source client will get access only to source bucket and vice versa, using destination role. In other words, something like this will fail:

source_client.copy_object(Bucket=destination_bucket_name,CopySource=source_bucket_name,Key=key)

The same happens when we replace the source client with destination one.

destination_client.copy_object(Bucket=destination_bucket_name,CopySource=source_bucket_name,Key=key)

To solve this issue we need a workaround. Fortunately, s3 buckets have a feature called bucket policy. We can use it to give extra permission to the bucket. In our case, we will give source account access to the destination bucket using bucket policy. So, the flow should look like this:

- Assume on destination role

- Use S3 client on destination account, to modify bucket policy and give access to source account.

- Assume on source role

- Use source role with boto3

copy_objectfunction

Assume role flow

First problem is solved. What about the second one? Assume the role has a big drawback called 12h time limit. It’s a hard limit for users. To make things worse, when we use services like Fargate the max session time is sometimes reduced to 1 hour. As we mentioned at the beginning, the copy process can take from minutes to days, and at times even more, so such limit can cause real pain. Fortunately, a single task instance can refresh assumed session any time it needs it.

What does it mean in practice? During the process, the migrator checks the time it is assumed on the role, and if it exceeds the defined limit, it assumes the same role again and the counter resets. So, the question is how to define it? We can check if the assumed role is still valid and if not, make assumptions again to resume the copy process. And what if the assumption is about to finish but it’s close to passing the validation block?

Let’s say our migrator assumes a role for 59 minutes and 59 seconds. The validation process is passed, but we have to take into account that the copy of a single object might take longer than a while… It is a possibility that we will exceed a role session time while copying a single object. To prevent such situation we can define a time limit inside the migrator that can’t be exceeded. At first we set it at 50 minutes, but after some tests with bigger files it turned out that 10 minutes window is simply too short.

At the end, we’ve decided to set expiration time for 15 minutes to prevent any unwanted access denied exceptions in the copying process (unless you copy a single object which exceeds 45 minutes).

Refresh role process

Copy object and copy

Python AWS SDK gives us two functions that allow us to copy objects between buckets. Copy object was the first choice, we use it together with multithreading to decrease the time our copy process needs to complete its task.

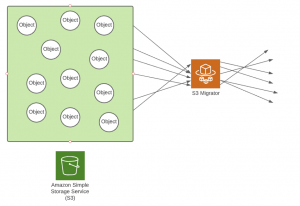

Multithreading inside S3 migrator

In case you needed a proof, below is the performance data depending on the number of threads.

100GB data

- 5 threads - 3203s

- 10 threads - 2245s

- 20 threads - 1720s

As you can see, migrators finish tasks nearly twice as fast when they use 4 times more threads. Results above 20 threads are not so impressive, so we regard that number as a hard limit for our tool.

Problem occurred when we run tests with bigger objects. Unfortunately, the copy_object function is only valid for objects smaller than 5GB. When we exceed this size, functions return errors. So, what’s the solution? We use copy function instead of copy_object. Instead of using threads to copy multiple small objects at once, AWS uses them to separate big objects into smaller parts and copy them faster than using a single thread.

Multithreading with AWS SDK

S3 migrator merges these two functions. It creates its own threads to copy objects smaller than 5GB, and it uses AWS threads to separate objects bigger than 5GB into smaller parts. These two functions allow us to reduce time needed to finish the copy process to minimum.

IMPORTANT! During the development process, we’ve checked the copy function for small objects with migrator’s own threads and it turned out to be a complete disaster. When we sum migrator threads and AWS threads, their number exceeds the max connection pool available in S3 and the migrator works endlessly… trying to get access to S3. As AWS takes care of threads it is not recommended to create your own.

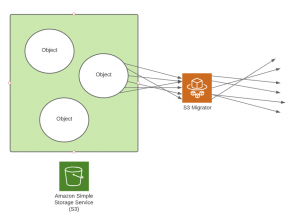

Copy vs migration

It’s not the biggest challenge we’ve faced during the development process, but it deserves more explanation. Copy and migration are the main functionalities offered by S3 migrator.

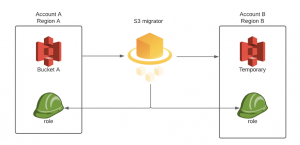

Copy creates a bucket on the destination account and copies every object from source to destination. That’s an excellent example of what “as simple as possible” means.

Copy scenario

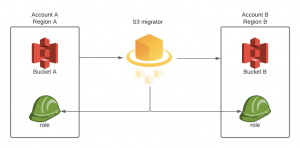

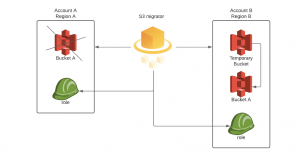

The harder part is migration as we need to copy the source bucket along with its name. Why is it harder? Because bucket names are globally unique, so if you or your neighbor has bucket with name “A”, you can’t create another one with the same name. To make it work, you need to follow these steps:

- Create temporary bucket on destination account

- Copy all objects from source to temporary bucket

- Delete source bucket

- Wait until source bucket name is again available

- Create destination bucket with the name of source bucket

- Copy all objects from temporary bucket to the destination one

- Delete temporary bucket

Yeah, that’s a lot of places when something can go wrong. Fortunately, S3 migrator takes care of all those steps, so when you define a task, you don’t need to supervise or manage the step that is handled at the moment.

Migration scenario

Logging and progress bar

Another great S3 migrator feature but very problematic to handle. S3 migrator needs an extension that starts periodically and asks CloudWatch for S3 migrator logs and somehow counts the already copied objects and the ones waiting in the queue. Once again, we had to choose the right service to achieve our goals. As it turned out, the decision was very easy to make, because Lambda fitted perfectly here. The process shouldn’t take much time, as we want to ask about the current step, and so we don’t need to worry about the 15 minutes limit. And costs shouldn’t kill us either as we don’t want to ask for services in real time, but to periodically start it and ask about the current state instead.

Let’s think about the execution role. Lambda needs basic permissions to start, permission to get access to S3 migrator log group and… access to source and destination account buckets. Yes, to achieve our goals we will need to assume role on both accounts yet again (fortunately this time without adding any bucket policy).

Starting with cloud watch access, it is quite obvious. Lambda needs to use boto3 Cloudwatch Client to periodically get logs. As we decided to start lambda every 15 minutes, it is important to return every time log from those 15 minutes as well. If we omit this step, lambda will collect more and more logs every time it is started. When we take into consideration a bigger process, it’s easy to see that, at some point, we may exceed a hard limit on lambda execution time just by collecting such a large amount of data.

client.get_log_events(logGroupName=log_group,logStreamName=log_stream,startTime=start,endTime=end)

Another great feature is the startTime and endTime fields inside get_log_events that allow us to define the time window in which we will collect data. Every time we start lambda, startTime field is set to the current time minus 15 minutes, and endTime to the current time. For example, if we set endTime at 1.50PM, startTime will be set at 1.35PM. This function accepts only timestamps, so both values will be converted before being used inside the function.

As first functionality is done, let’s jump to assuming a role. To update the progress bar we need the percentage of the process done. The easiest way to do this is to count the number of objects inside the destination bucket, do the same with objects in the source bucket, and get the percentage value of destination objects / source objects. This task is a little more complicated with the migration scenario where we have two copy processes and a waiting part created after source bucket deletion. To make it more clear tables below show percentage value of progress bar depending on the task steps:

Copy scenario

| Percentage | Step |

|---|---|

| 0% | Task started |

| 5% | Destination bucket created successfully |

| 6%-99% | Copy step |

| 100% | Task finished |

Migration scenario

| Percentage | Step |

|---|---|

| 0% | Task started |

| 5% | Temporary bucket created successfully |

| 6%-49% | Copy step |

| 50% | Waiting for source bucket name dns release |

| 55% | Destination bucket created successfully |

| 56%-99% | Copy step |

| 100% | Task finished |

As you can see we need two points when assuming a role in the migration scenario. First one is done in the same step as in the copy scenario, when we create a bucket on a destination account. The second one appears only in the migration scenario and requires just one assumed role (because the temporary bucket is already on the destination account). And that’s it when it comes to lambda, two functionality and many, many issues connected with it.

End of story?

When you plan to make a tool as simple as possible it is nearly guaranteed that you will have to jump over many tall walls on your way there :D But in the end, it’s really worth it. S3 migrator gives you big advantage when you have to tackle s3 bucket issues, and even though you will face many obstacles, experience gained during the development is something that will stay with you forever. You may even get a chance to share your experience with others in some boring article or something ;) See you next time.

Have you ever thought about moving buckets between accounts/regions? Have you ever wondered if there is any possibility to do such task in a reliable time frame, and be able to supervise that process along the way? Here it is, s3 migrator, and it can solve your problem. It offers multithreading to minimize copying time, and GUI which make it easier to start a process or to supervise all steps while it lasts. It’s worth adding that the solution is fully serverless, so its architecture is as simple as possible and does not require much management.