CI/CD on AWS: What does AWS have to offer and how can I extend it

Native AWS services in the context of CI/CD and other tools for managing resource configuration.

AWS CodePipeline

AWS CodeBuild

AWS CodeDeploy

AWS CodeStar

AWS CloudFormation

AWS Lambda

AWS Elastic Beanstalk

Terraform

Welcome to a series of articles which will demonstrate the use of native AWS services in CI/CD solutions. By using real-life examples, we want to share the knowledge and show how it helps us build Serverless pipeline for managing EC2 configuration and, consequently, improve the work of our team and, more importantly, our client.

- What AWS has to offer, and how can I extend it? In first chapter, we will make some introduction and talk briefly about CI/CD and native AWS services that we can use in context of CI/CD. We will also mention other tools for managing resource configuration that can be used to extend the capabilities of our pipelines.

- Client requirements, and how we overcame all challenges with CI/CD in AWS In the second chapter, we will examine a specific use case, i.e. a client’s project and the problems we would like to resolve. Here, you will also get to know what solutions we used in every stage of the process and why we chose them.

- Solution Architecture and implementation of serverless pipelines in AWS The last part will contain detailed information about the architecture of the CI/CD pipeline which we implemented using AWS native services, plus all of its configuration steps. We will wrap things up with conclusions after a few months of use. We will also answer some questions: what was good, what could be better and what we plan for the future.

Introduction

Why do we want to use CI/CD, and what does it even mean?

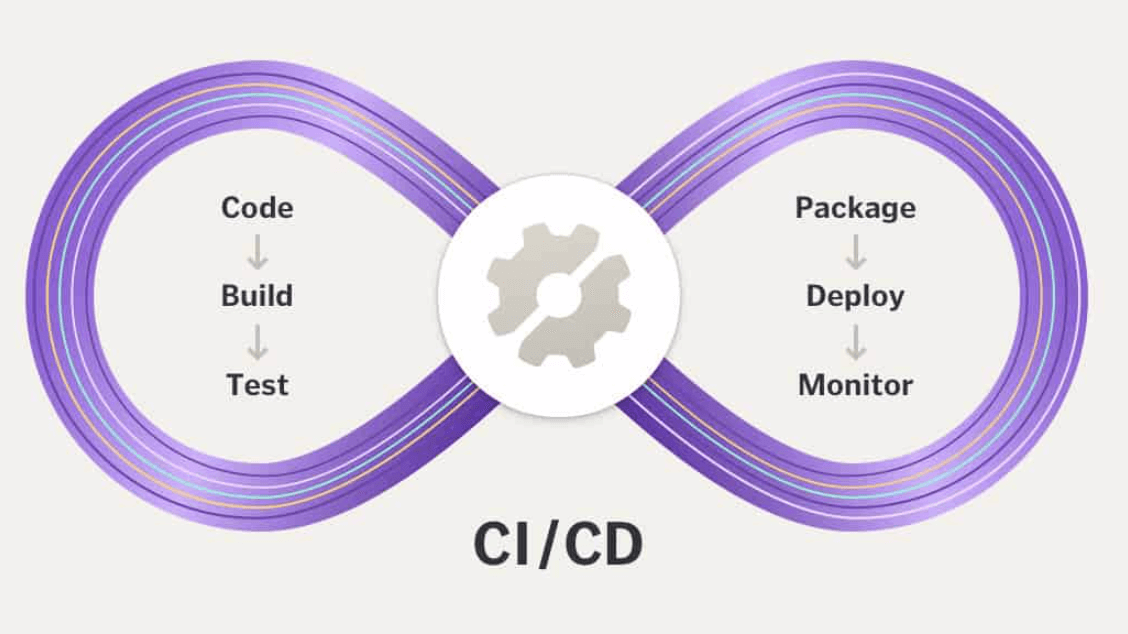

Continuous integration and continuous deployment — or CI/CD — means that your changes are automatic. It also means, you’ll no longer have to worry about when and how to run or build your code. With CI/CD integration and deployment are managed by pipelines, all in proper order, so we can focus on developing our code, not taking care of a whole process.

Continuous integration and continuous deployment concepts

Today, I will talk mostly about self-maintain automation of EC2 instances configuration. No deployment servers to manage anymore, just focus on the code. I will also tell more about AWS native services and show how they’ve helped my team and a client to optimize workflows. In the era of non-vendor-locking these solutions are frequently forgotten, but in my opinion often could bring more value than the more popular ones.

What does AWS have to offer?

Before we begin, let me remind you about AWS native services for CI/CD.

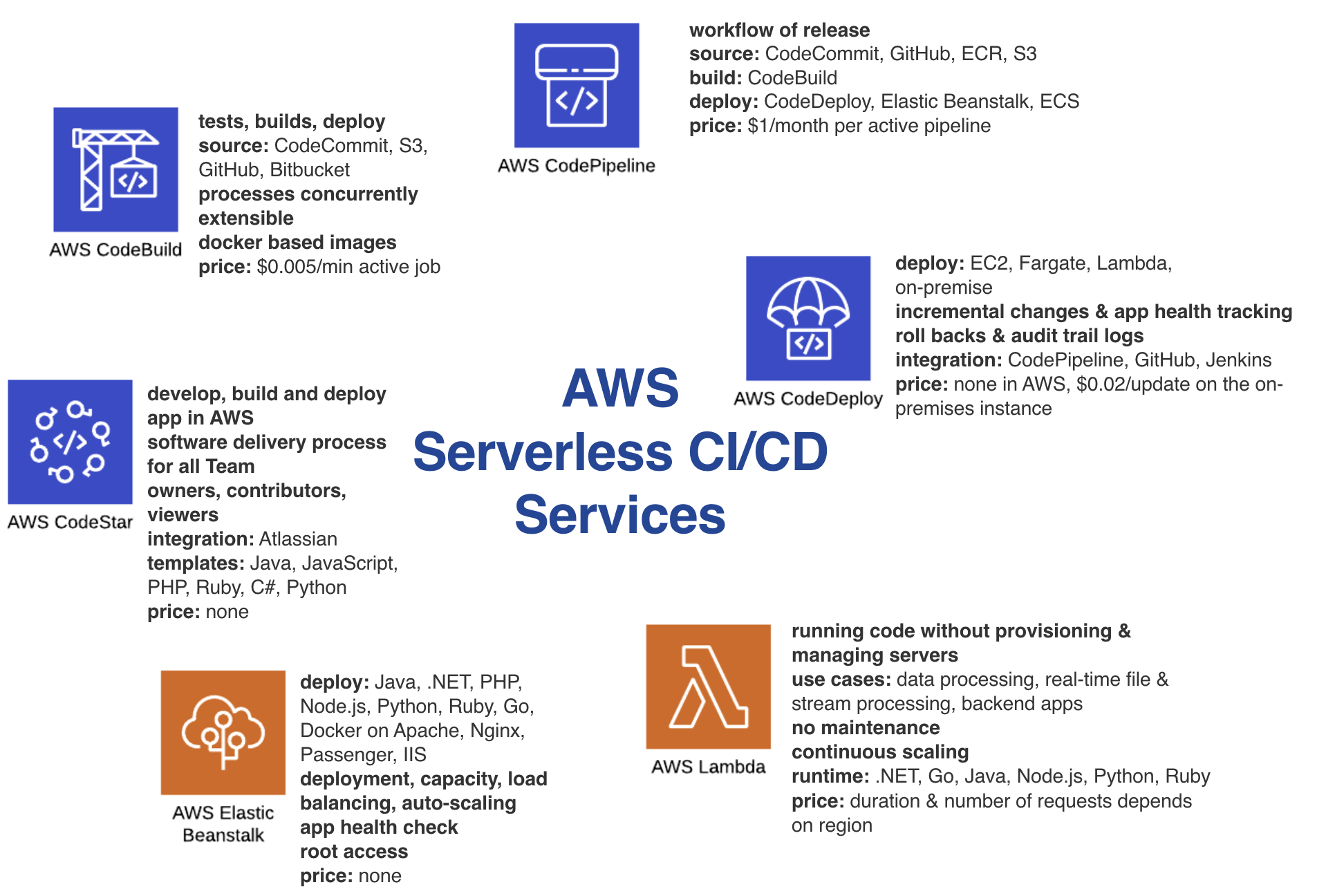

AWS native services used to build CI/CD — no server management required

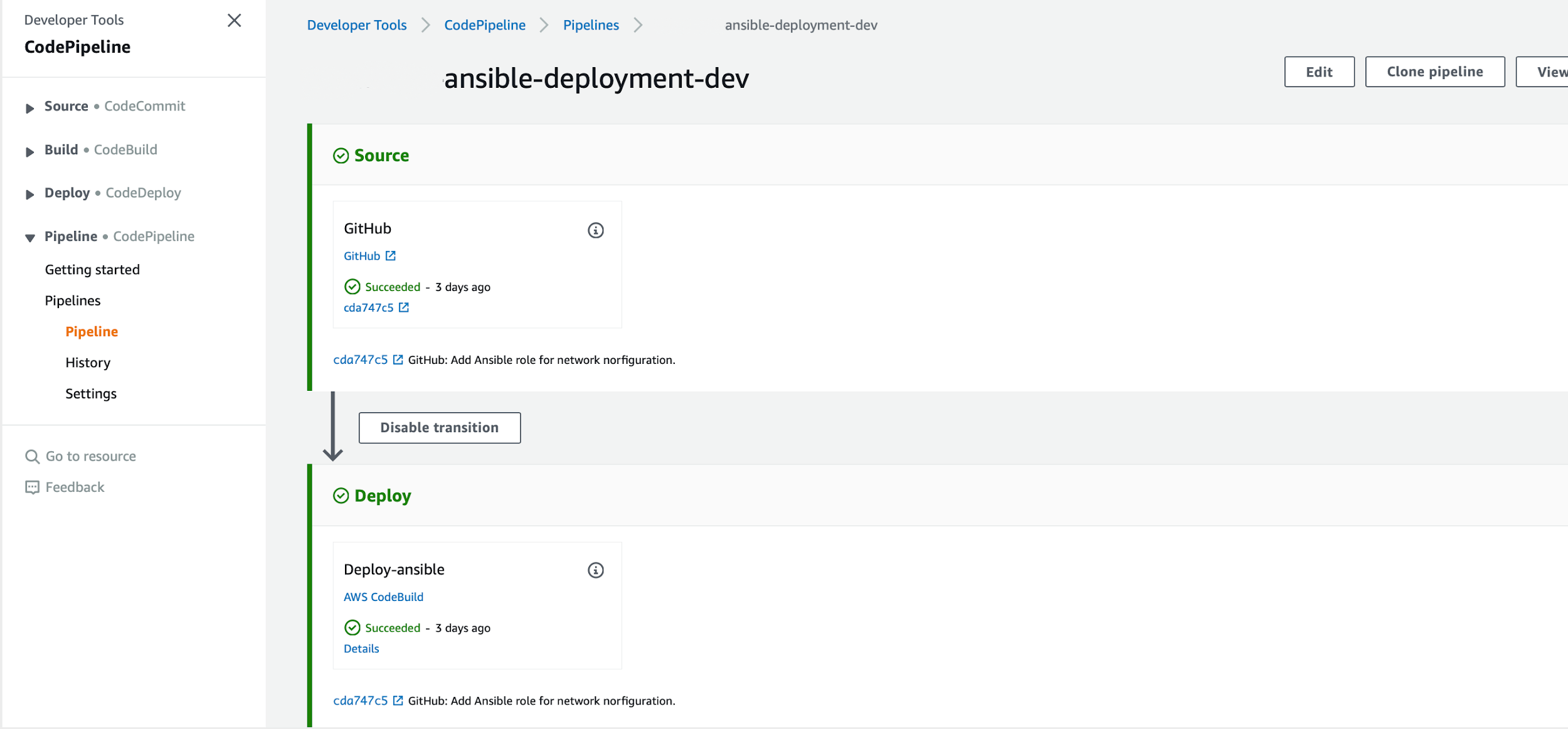

AWS CodePipeline

AWS CodePipeline dashboard

It allows you to define workflow for releases and configuration of its next steps and transitions. For production environments you can implement Approval Steps.

CodePipeline integrates (at the time of writing; out-of-the box)

- Source: GitHub, ECR and S3

- Build: CodeBuild, Jenkins instance

- Test: CodeBuild, Device Farm, Jenkins instance, BlazeMeter, Ghost InspectorUI Testing, Runscope API Monitoring

- Deploy: CodeDeploy, ECS, EC2, Elastic Beanstalk, CloudFormation, ServiceCatalog

- Invoke: Lambda

You pay $1.00 for one active pipeline. That is, one pipeline existing for more than 30 days with at least one code change run through it during that month.

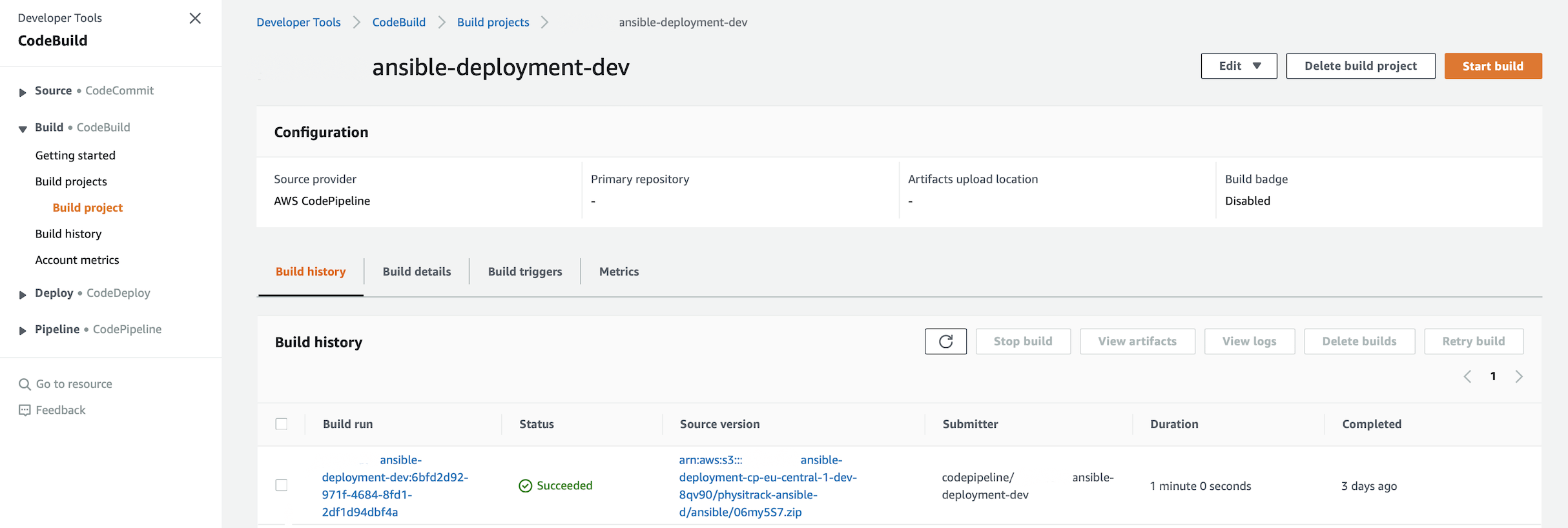

AWS CodeBuild

AWS CodeBuild dashboard

It can compile the source code, build images and packages, but also test and deploy changes to the AWS and EC2 instances. It can process many jobs concurrently, which means that each job starts in a separate container. No more queues! Job timeout can be set between 5min to 8h.

CodeBuild integrates with CodeCommit, S3, GitHub and Bitbucket as a source.

With this service, you pay for the usage - $0.005/min per active job.

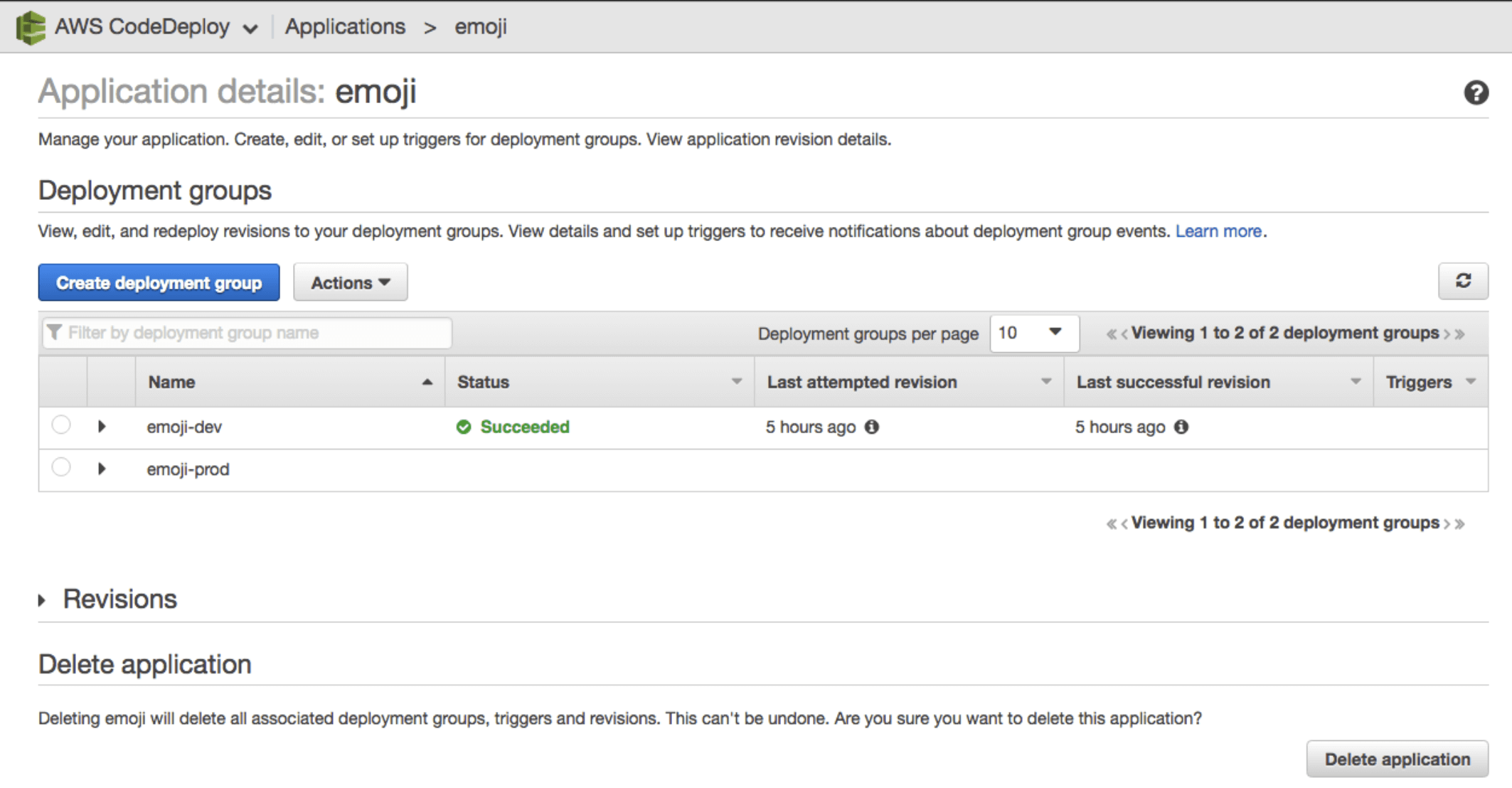

AWS CodeDeploy

AWS CodeDeploy dashboard

It is used to deploy applications, for instance in AWS and on-premises cloud. It deploys changes incrementally. In case of errors, it can automatically roll back and restore applications to the previous version, minimizing downtime. CodeDeploy monitors every call, and gives you insight into history and every application status. It is integrated with CodePipeline, EC2 Auto Scaling, ELB, but also with GitHub, Jenkins and on-premises instances. With it, you can implement Blue/Green and Canary deployment.

CodeDeploy is completely free if you use it for AWS. Otherwise, you pay $0.02 for every update in on-premises.

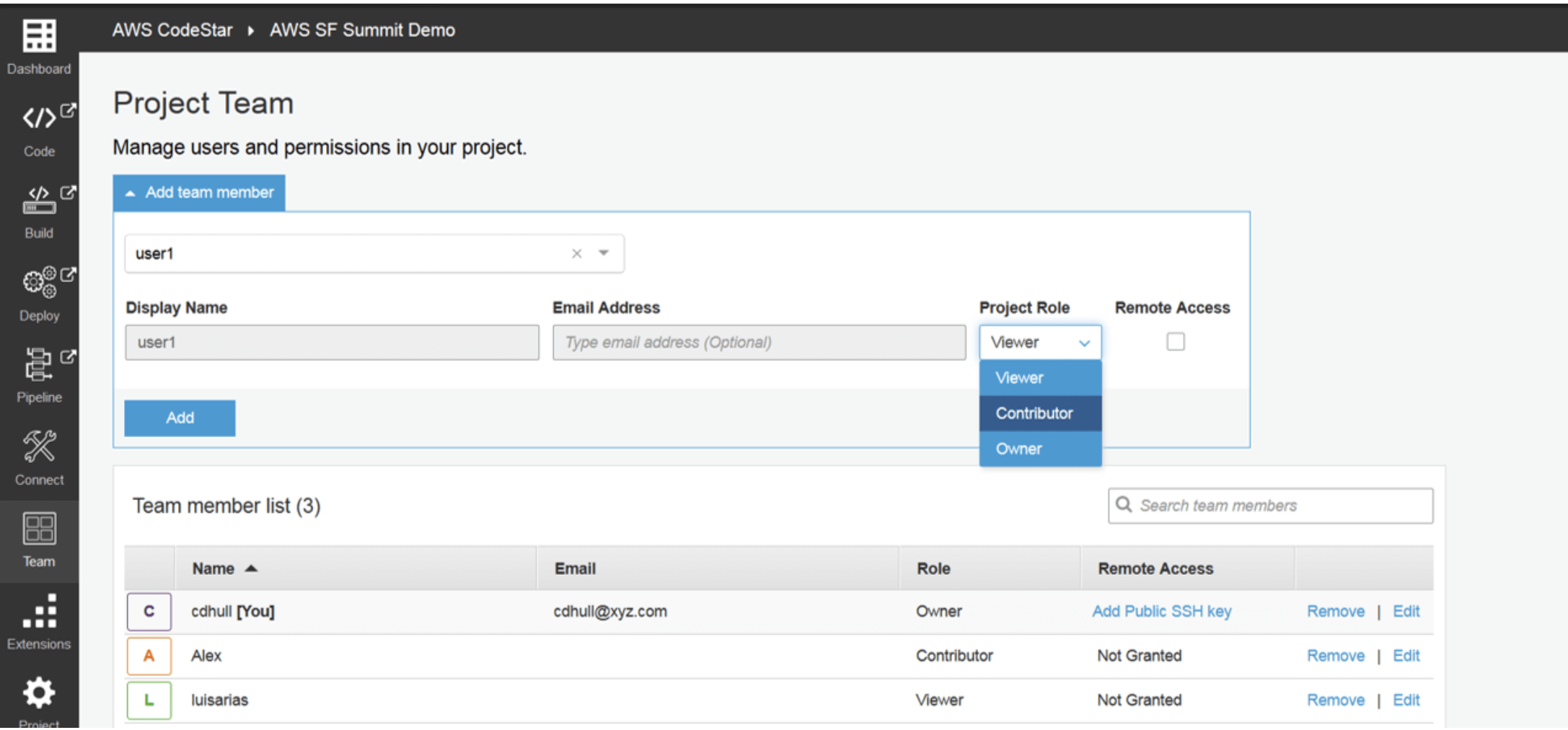

AWS CodeStar

AWS CodeStar dashboard

It is designed to facilitate the work of the development Team, from the moment a feature is in the backlog until its deployment. It allows to monitor progress and errors through integration with Atlassian Jira. CodeStar has defined templates for websites, applications and web services like Alexa skills. Predefined templates are being prepared for: Java, JavaScript, PHP, Ruby, C#, Python.

You pay nothing for that service, only for used resources.

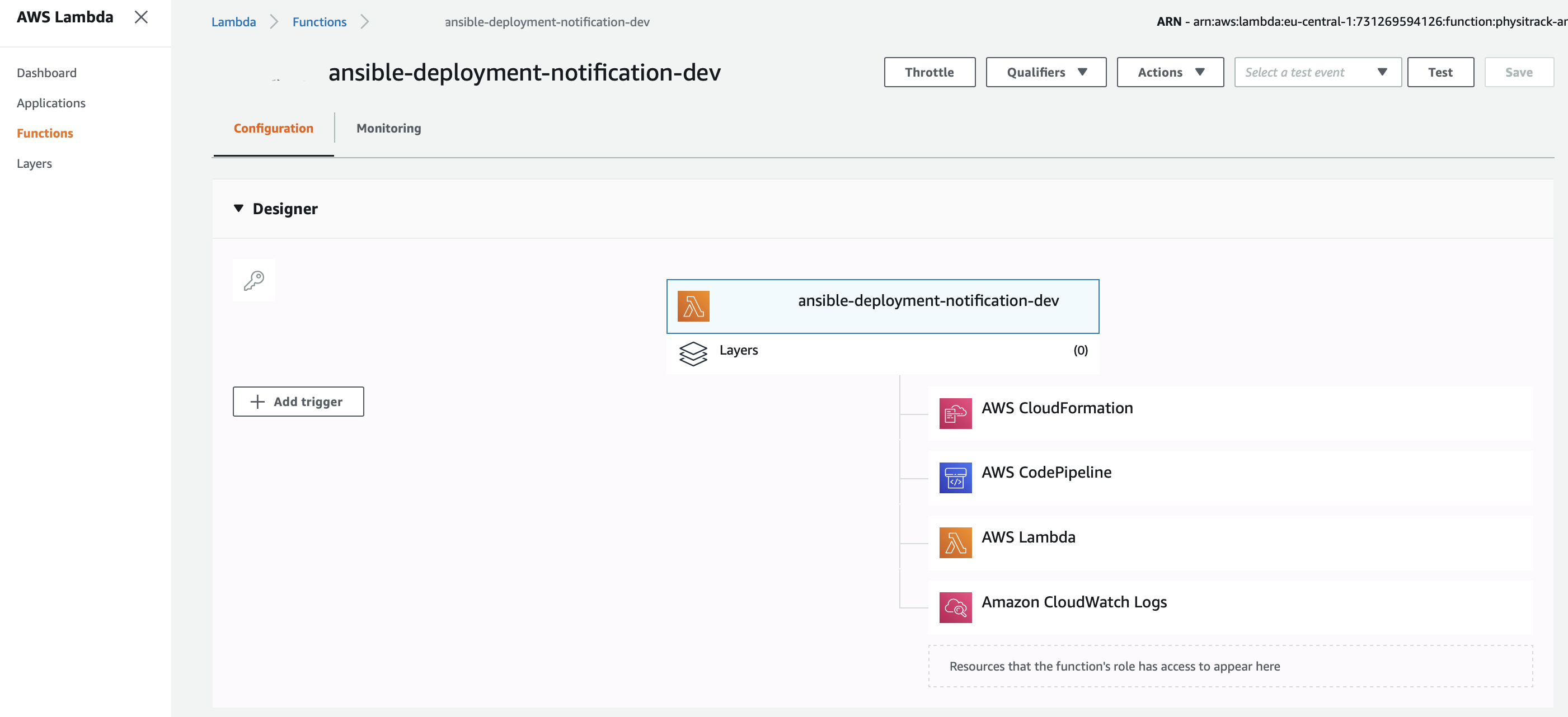

AWS Lambda

AWS Lambda dashboard

Another seemingly inconspicuous service, but very well integrated with the rest of AWS services. It can be used to implement deployments in AWS, as well as applications, using various programming languages. By combining it with Step Functions, you can create workflows. But that’s not all. Lambda has plenty use cases, e.g. data processing, real-time file & stream processing, backend apps. Every invocation is scaling and running continuously. Lambdas can be written in .NET, Go, Java, Node.js, Python, Ruby.

You pay very, very low price for duration & number of requests, depending on region.

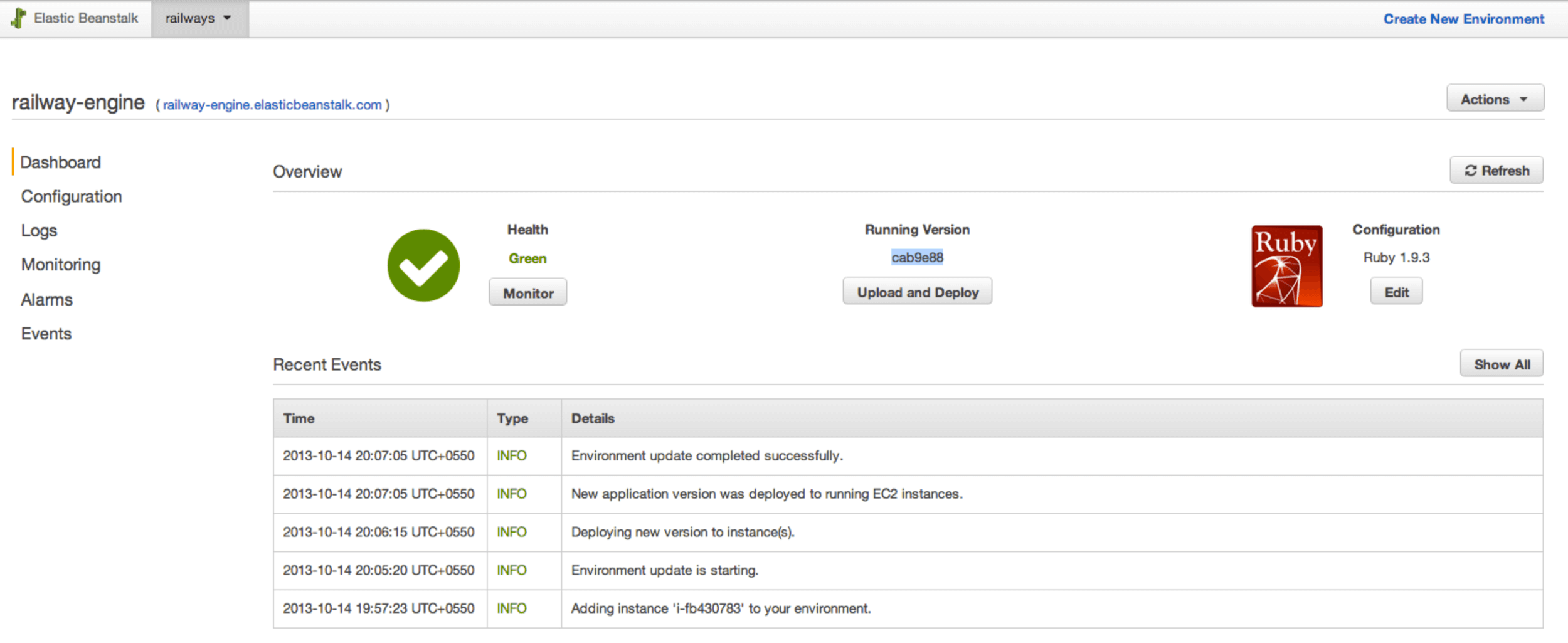

AWS Elastic Beanstalk

AWS Elastic Beanstalk dashboard

It facilitates the deployment of web applications and services deployed with Java, .NET, PHP, Node.js, Python, Ruby, Go, and Docker on familiar servers, such as Apache, Nginx, Passenger, and IIS. You can simply upload your code and Elastic Beanstalk automatically handles the deployment, from capacity provisioning, load balancing and auto-scaling, to application health monitoring. At the same time, you retain full control over the AWS resources powering your application and can access the underlying resources at any time.

No costs, you pay only for resources needed to store and run your applications.

In all services you run code without provisioning & managing servers. You don’t need to deal with the operational layer, providing the newest and most secure versions correspond to AWS. That’s what Serverless really means.

Only if you use your predefined docker images, the packaging will be on your side. But you already use pipelines for that, right?

How can I extend it?

Currently, there are a lot of tools on the market that facilitate infrastructure, operating system or application management, and are additionally defined with a code. Some of them also have UI versions. I will tell a few words about the most popular ones and explain what to consider, when you choose between them.

Examples of configuration management tools (AWS CloudFormation, Terraform, Ansible, Chef, Puppet, AWS CLI, Bash)

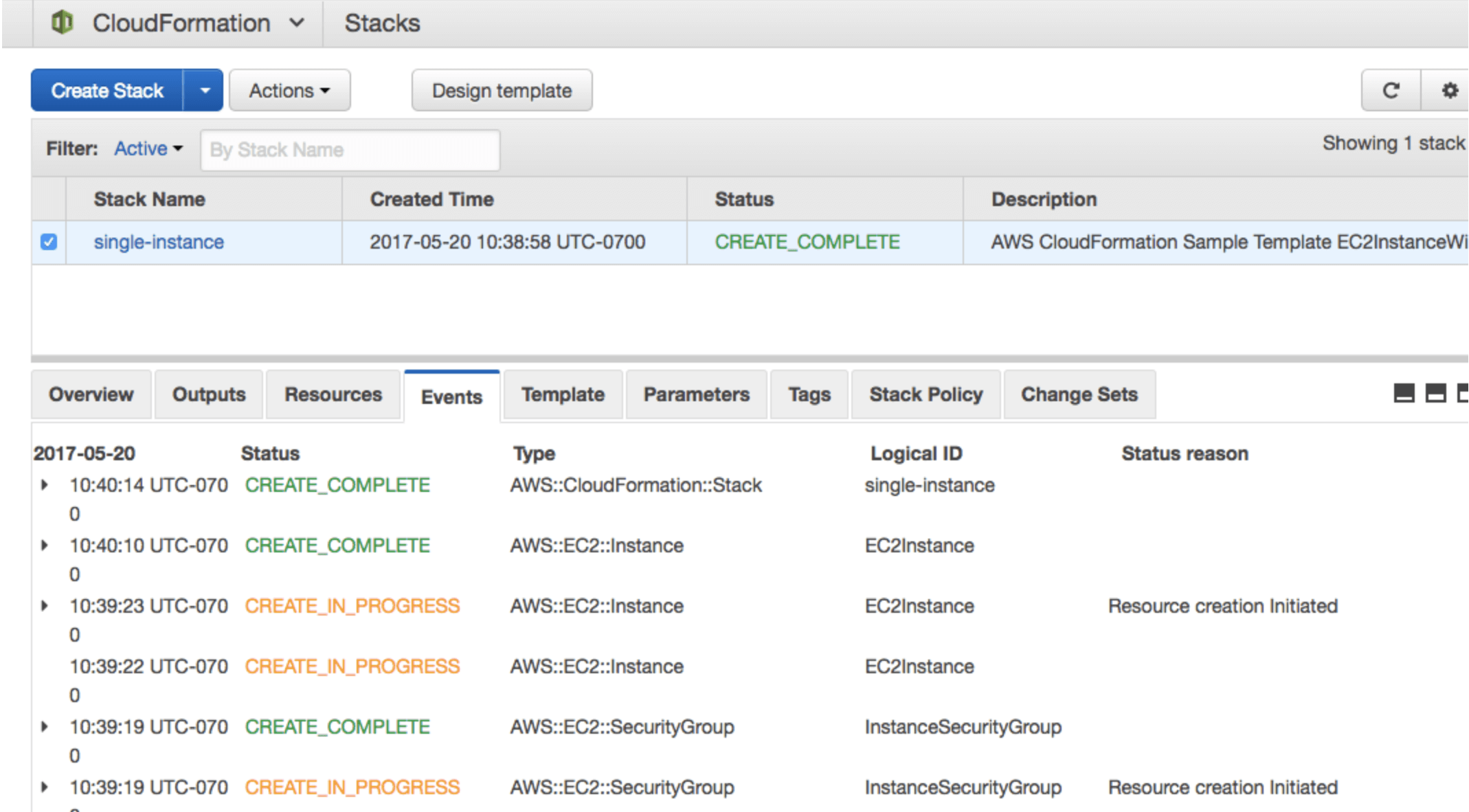

AWS CloudFormation

AWS CloudFormation stack dashboard

It is a native AWS service. With it you can divide infrastructure into stacks, for example: stack for ALB, VPC and RDS. You define them, using templates. After provisioning, you can see all created resources in Resources inventory, so you know exactly what you’ve got. Moreover, in case of failed deployment CloudFormation automatically rolls back to the previous version to avoid any downtime. It’s meant only for AWS resources management and works regionally, which means you need to have stacks in all regions that you use. It works pretty well with simple stacks, but you’ll have a hard time, getting information about detailed changes with a very nested stack architecture.

If you are looking for simple, AWS-native solution and don’t want to be bothered with complicated configuration, CloudFormation will be a good fit for you.

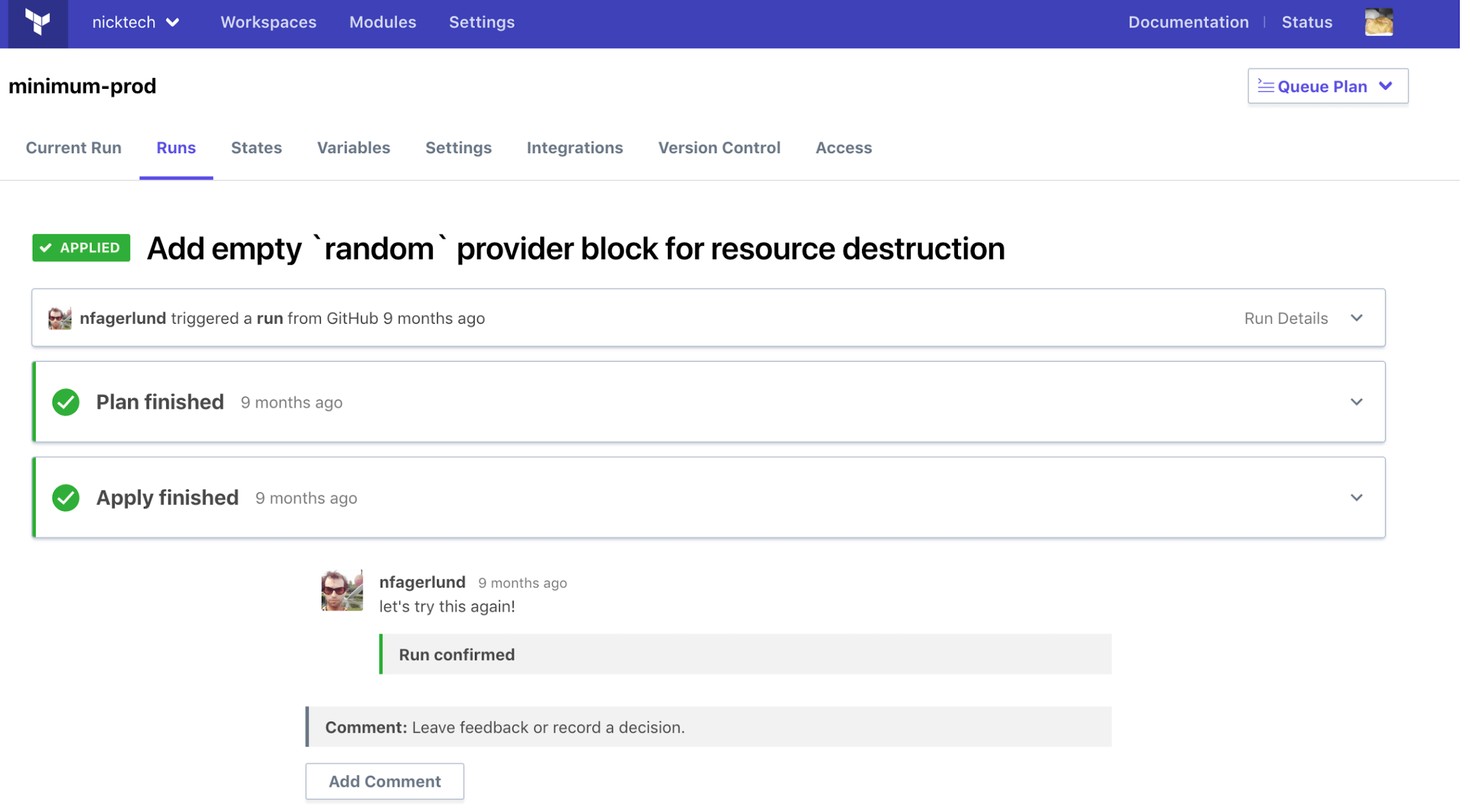

Hashicorp Terraform

Hashicorp Terraform Cloud dashboard

This is an open source and a stateful tool. With it you can declare what you want to achieve. The plan shows you what resources will be created, and after making changes, the new state appears in the JSON file. Terraform shows detailed information about changes and manages the order of execution. It also shows dependency between resources, works with various providers (cloud & on-premise), and enables parameterization of – almost – every argument, making it very modular. With proper scripting, you can deploy changes across AWS accounts and regions. Terraform doesn’t handle interruptions and errors during deployment very well, so tests of every single change are crucial here. You can create your account in Terraform Cloud to manage all workspaces and states from one place.

If you are looking for a free actively developed solution that will work nicely with different environments, don’t forget to check Terraform.

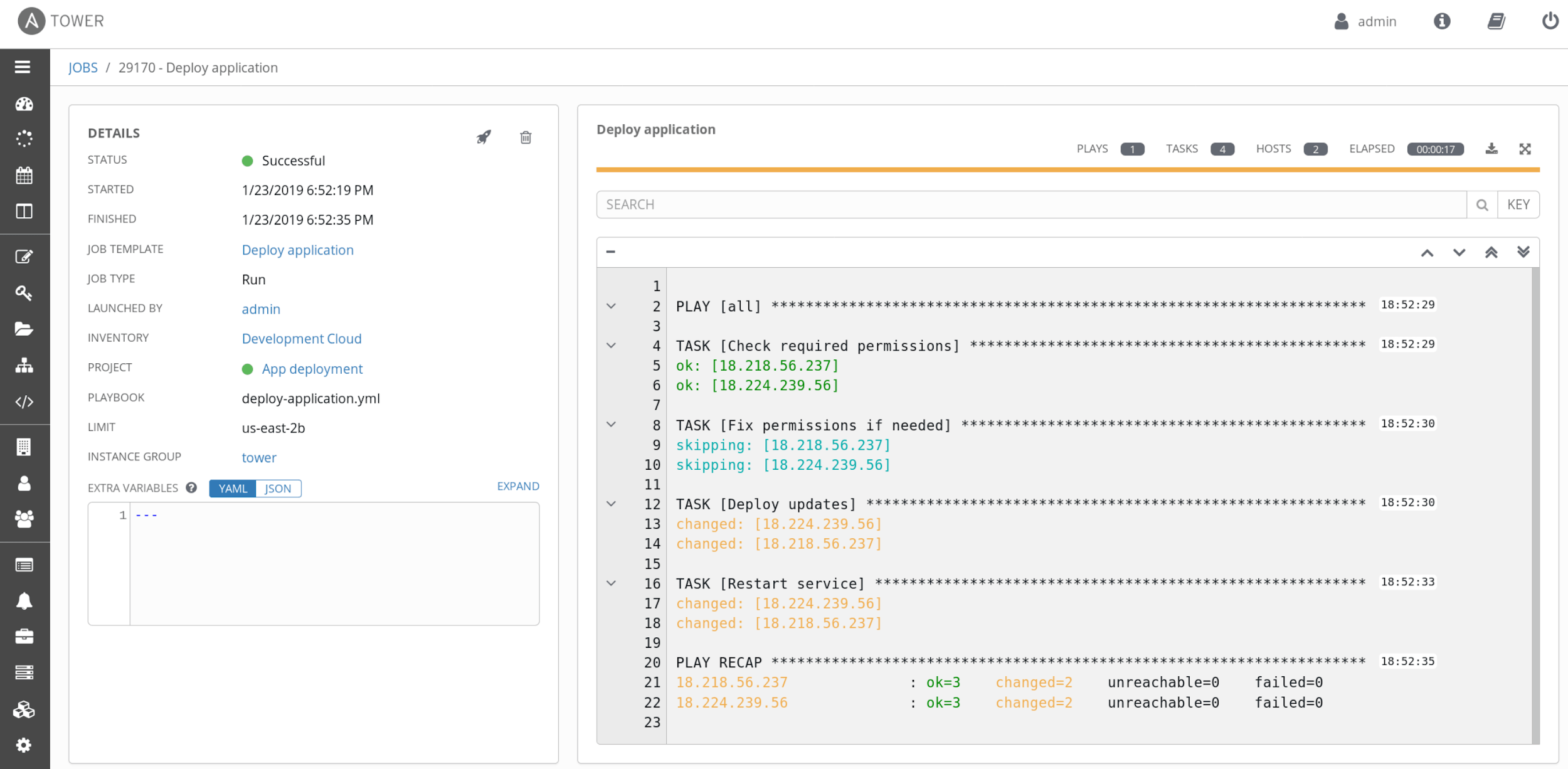

Ansible

Ansible Tower dashboard

This is an open source solution. Ansible is based on Python, and Python developers typically feel more at home with it (no need to learn DSL). It also allows you to define the Infrastructure, but due to its stateless nature works better as an operating system management or application configuration. Unlike with a declarative solutions, when you use Ansible, you must define what tasks to perform, and in what order. For example, you can define steps which need to be made during network configuration. You can use usual shell commands or previously defined modules. You can even write your own module. Ansible works with various providers (cloud & on-premise), and communicates with instances, using SSH (no agents). If your configuration needs tobe managed by a whole Team, you can make it easier with Ansible Tower web console.

If you want to automate actions made with OS or APP – like managing storage volumes, packages, services, processes, and so on – for many providers, and don’t want to use simple shell script anymore, give Ansible a try.

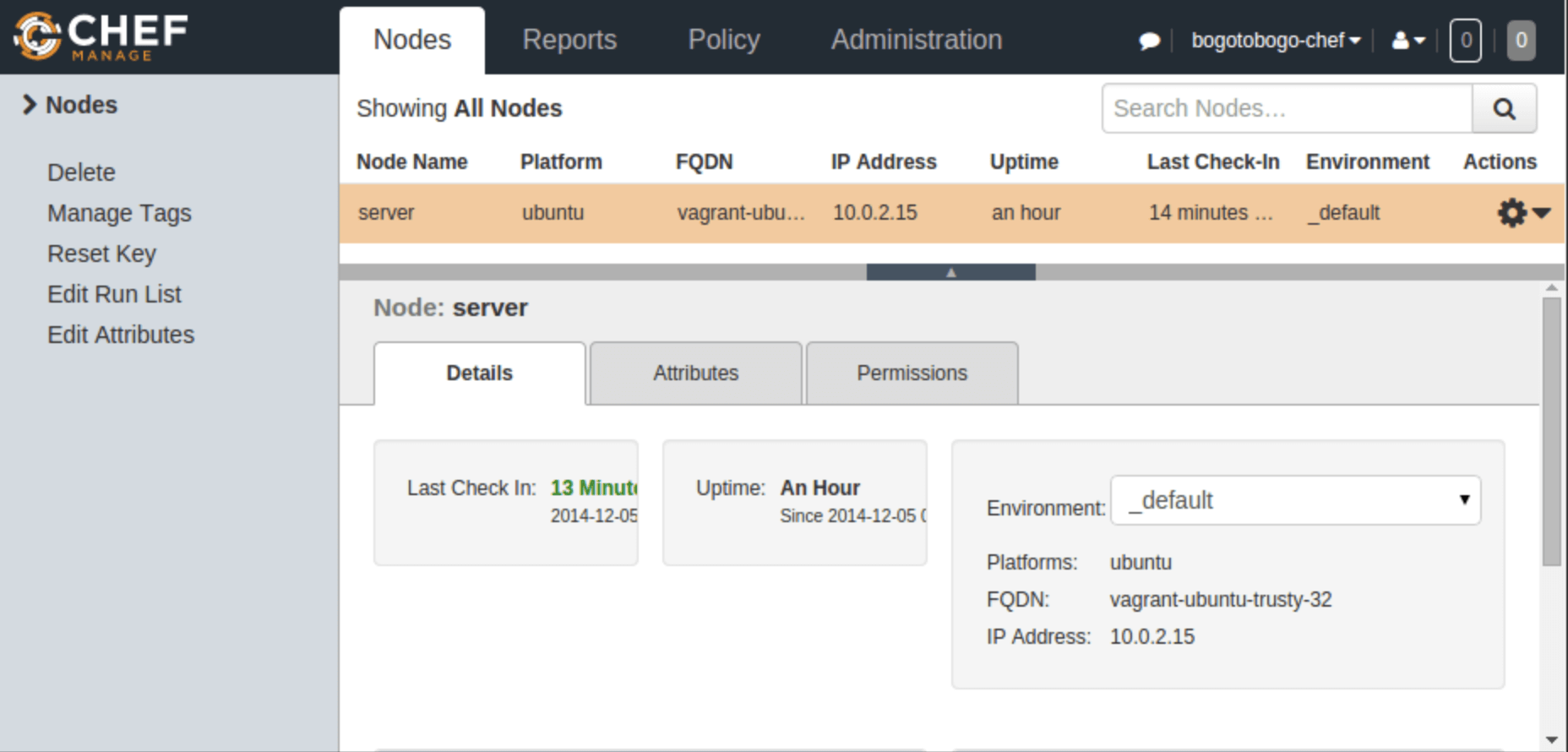

Chef

Chef dashboard

This agent based platform uses Ruby as its reference language for creating cookbooks and defining recipes. So, if you don’t know much about developing, you’ll likely have trouble, learning it. Chef is used for infrastructure configuration management, automating compliance assurance and automating modern applications in microservice architectures.

AWS Opsworks service is based on Chef and helps automate deployments into AWS, but it also works with other providers. Using the knife utility, it can be easily integrated with any cloud technology. It is the best tool for any organization that wishes to distribute its infrastructure in a multicloud environment (works efficiently for AWS, at least).

If you need a nicely integrated solution for many providers, Chef is a good choice. But you need to remember, that without people experienced with Ruby and with no time entries, you’ll struggle to produce something worthwhile straight away.

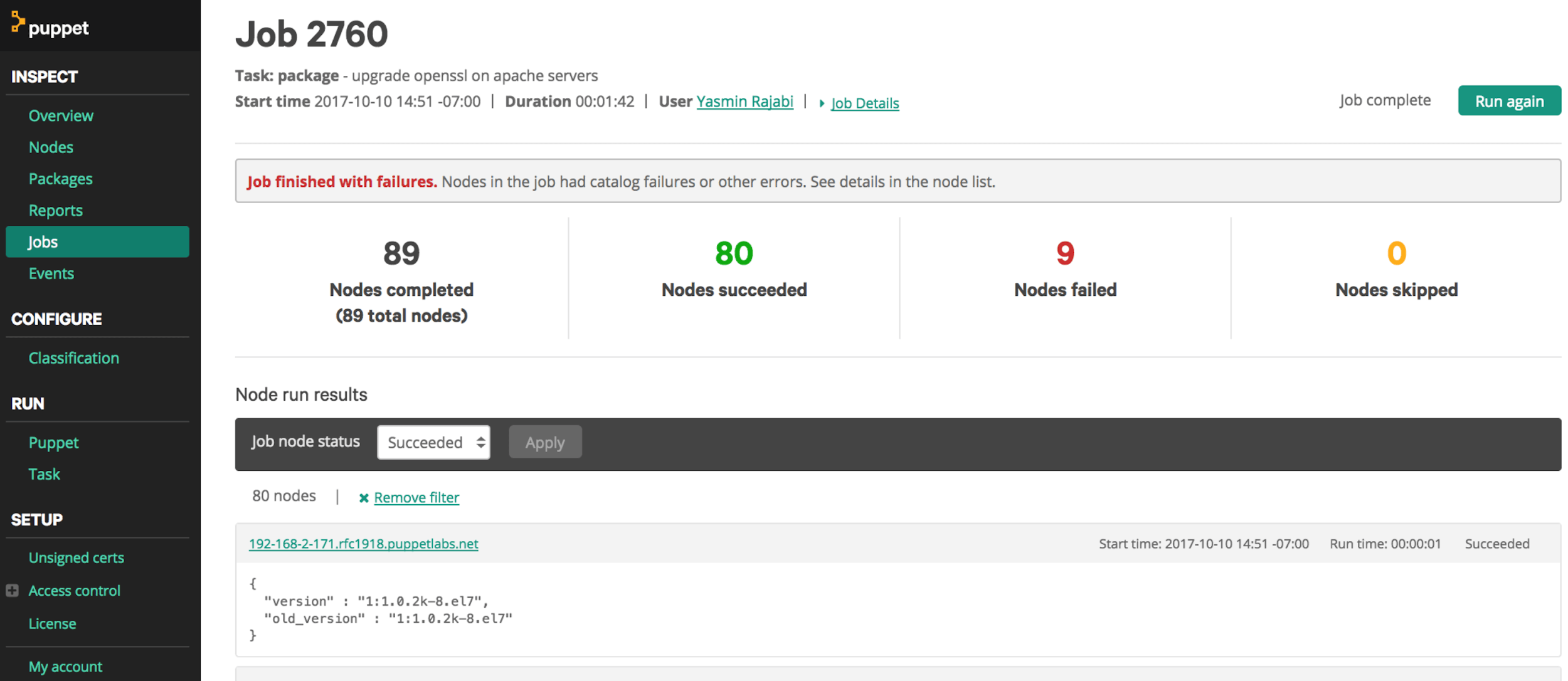

Puppet

Puppet dashboard

Puppet manages configurations on your nodes, using agents. It requires a Puppet master server to fetch configuration catalogs from. It has its own language, uses a declarative, model-based approach to automation of the infrastructure and enforces system configuration.

Puppet supports Idempotency which makes it unique. Similarly to Chef, with Puppet you can run the same set of configuration multiple times safely, and on the same machine. Puppet checks for the current status of the target machine and makes changes only when there is a specific change in the configuration. It really helps to keep the machine updated for years, so you don’t have to rebuild it multiple times with every configuration change.

If you need to keep your machine updated for years, rather than rebuild it multiple times, and you can use crontabs — instead of SSH connection — to make a configuration change, and have time to learn how Puppet works, don’t cross this tool out.

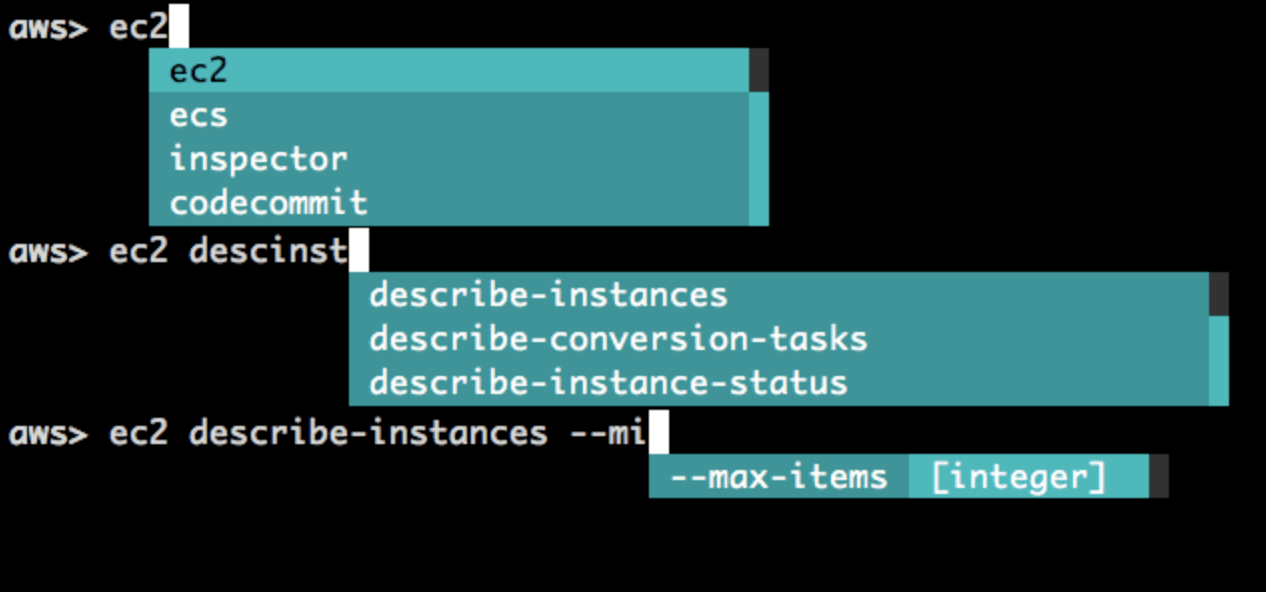

Bash & AWS CLI

AWS CLI usage

Bash & AWS CLI are simple shell languages or plugins used to control multiple AWS services from the command line and automate them through scripts. For example, for Mac OS you can find Oh-My-ZSH plugin which prompts syntax. You can combine them with other Configuration Management tools – such as CloudFormation, Terraform or Ansible – that allow the use of bash shell, or other AWS services to get better access to resources or use them ad-hoc.

Sometimes you need to get information about existing resources with complex filters which other limited tools don’t provide. Or just make some action directly in the script or command line. In such cases you’ll be happy with bash or AWS CLI.

Summary

Of course, there’s a lot more to talk about. But the main point of this article was to show you many different options to choose from. Even if the native AWS services do not seem sufficient to help you achieve your goal, you can always use other tools and thus keep the solutions simple, so you can change the amount of work and time needed to build them.

In next part 2 - "CI/CD on AWS - Client requirements, and how we overcame all challenges with CI/CD in AWS” we will talk more about real use cases and problems which we had to solve. We will also show implemented solution and AWS native services used to build Serverless pipelines for EC2 configuration management.

Interested? See you in the next chapter.