Cleaning up AWS services with automated workflows

Examining a feasible approach to cloud waste by getting rid of stale & empty S3 buckets created en masse during tests.

Amazon S3

AWS Lambda

AWS Step Functions

Amazon CloudWatch

AWS CloudFormation

Amazon DynamoDB

AWS Tools and SDKs

Boto3 (AWS SDK for Python)

Python

In this article I’ll present how we solved a problem with empty S3 buckets which kept getting created in large quantities during some tests and then left forgotten. I don’t like the idea of leaving unnecessary services in the environment — after some time, they always prompt the questions: Why the hell do we have those buckets? Is anybody using them?

While empty S3 buckets don’t generate costs other than being a potential nuisance, in this case they are purely meant to serve as an example — a proof of concept if you will. They can represent any other AWS service that is potentially stale and useless.

This case is based on my last encounter with provisioning Lambda functions via CloudFormation. Further articles will explore using the Serverless Framework for a part of the workload instead.

So, let’s jump into AWS…

Forming a stack for… cleaning up

Having a wide variety of solutions at my disposal within AWS, I’ve decided to stay with KISS. Therefore, the following players have been chosen:

- AWS Lambda

- AWS DynamoDB

- CloudFormation

- Step Functions

- CloudWatch events

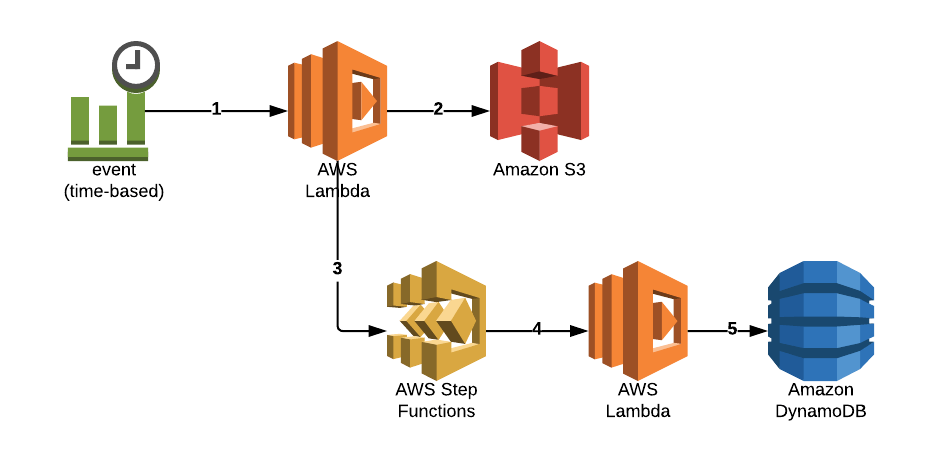

- A CloudWatch event is emitted periodically (cron job) and invokes a Lambda function which scans the given environment for the existence of empty buckets,

- The result of the above — a count of all deleted buckets along with their names — is forwarded to a Step Functions workflow. The payload has a simple shape:

responseData = {'NumDeletedBuckets': count,'DeletedBuckets': deleted}

- Our Step Functions flow gets invoked. Based on the input it has got two choices:

- If

NumDeletedBucketsis 0, a “NO CHANGE” response is sent. - Otherwise, it invokes another function to store metadata about the deleted buckets in a DynamoDB table for later processing. Those could include:

- bucket name,

- date of deletion,

- owner name,

- random hash (for further actions).

- If

def put_dynamo_items(self, bucketlist, owner='chaosgears'):try:for bucket in bucketlist:response = self.table.put_item(Item={'bucketname': bucket,'date': datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S"),'hash': generate_random_string(),'owner': owner})return responseexcept ClientError as err:print(err.response['------Error']['Code']+':', err.response['Error']['Message'])

Customize it however you want. In other similar states, I sometimes use SNS to push notifications whenever I want to be informed about some action.

CloudFormation stacks and modules

I don’t feel comfortable with the AWS Management console — I prefer using CloudFormation, which is nothing more than your AWS description language (JSON, YAML). It gives you an easy way to define your infrastructure in centralized code (easy to maintain or to modify).

While CloudFormation is not a programming language, I recommend to approach it with development best practices regardless. Dividing your code into small pieces/modules not only allows to keep everything orderly and well-organized, it also allows you to more easily reuse your modules across different CloudFormation templates.

In the Resource section of the CloudFormation template I’ve used the TemplateURL attribute to point my module file. Generally, the rule is simple — you combine all necessary components inside a small module and then put it into a single stack or several stacks in case you’d like to implement multiple instances of the same chunk of your infrastructure.

Conditions:IfVirginia: !Equals- !Ref AWS::Region- us-east-1Resources:LambdaStack:Type: AWS::CloudFormation::StackProperties:Parameters:S3Bucket: !Ref ResourcesBucketParentStackName: !Ref ParentStackNameStepFunctionsArn: !GetAtt MyStateMachineStack.Outputs.StepFunctionsNameS3Key: lambda/functions/s3_cleaner/s3_cleaner.zipTemplateURL: !If [IfVirginia, !Sub 'https://s3.amazonaws.com/${ResourcesBucket}/modules/aws/lambda_s3_cleaner.yml', !Sub 'https://s3-${AWS::Region}.amazonaws.com/${ResourcesBucket}/modules/aws/lambda_s3_cleaner.yml']TimeoutInMinutes: 5DependsOn:- MyStateMachineStackDynamoDbStack:Type: AWS::CloudFormation::StackProperties:Parameters:ParentStackName: !Ref ParentStackNameS3Bucket: !Ref ResourcesBucketS3Key: lambda/functions/dynamodb/dynamodb.zipDynamodbTable: !Ref TableNameAccountId: !Ref AccountIdTemplateURL: !If [IfVirginia, !Sub 'https://s3.amazonaws.com/${ResourcesBucket}/modules/aws/lambda_dynamodb.yml', !Sub 'https://s3-${AWS::Region}.amazonaws.com/${ResourcesBucket}/modules/aws/lambda_dynamodb.yml']TimeoutInMinutes: 5MyStateMachineStack:Type: AWS::CloudFormation::StackProperties:Parameters:LambdaArn: !GetAtt DynamoDbStack.Outputs.DynamodbFunctionArnEnvironment: !Ref EnvironmentTemplateURL: !If [IfVirginia, !Sub 'https://s3.amazonaws.com/${ResourcesBucket}/modules/aws/step_functions.yml', !Sub 'https://s3-${AWS::Region}.amazonaws.com/${ResourcesBucket}/modules/aws/step_functions.yml']TimeoutInMinutes: 5

[!NOTE] The !GetAtt MyStateMachineStack.Outputs.StepFunctionsName statement provides a reference to the output (Step Functions ARN) of another CloudFormation stack (in this example, to a stack which creates a short Step Functions workflow which I’ll describe later). We have a similar one in MyStateMachineStack which takes a reference to the output of DynamoDbStack (Lambda Function ARN).

Let’s now look at how CloudFormation modules look like. Out first one is a Lambda function whose purpose is to delete the empty buckets from our prior steps:

AWSTemplateFormatVersion: 2010-09-09Description: Lambda for S3 bucket cleanerParameters:S3Bucket:Description: S3 bucket containing the zipped lambda functionType: StringS3Key:Description: S3 bucket key of the zipped lambda functionType: StringLambdaName:Type: StringDefault: s3cleanerStepFunctionsArn:Type: StringEnvironment:Type: StringDescription: Type of environment.Default: devAllowedValues:- dev- dev/test- prod- test- poc- qa- uatConditions:IfSetParentStack: !Not- !Equals- !Ref ParentStackName- ''Resources:S3CleanerRole:Type: AWS::IAM::RoleProperties:AssumeRolePolicyDocument:Version: 2012-10-17Statement:- Effect: AllowPrincipal:Service:- lambda.amazonaws.comAction:- sts:AssumeRolePath: /Policies:- PolicyName: S3CleanerPolicyDocument:Version: 2012-10-17Statement:- Effect: AllowAction:- lambda:ListFunctions- lambda:InvokeFunctionResource:- "*"- Effect: AllowAction:- s3:*Resource:- "*"- Effect: AllowAction:- states:*Resource:- "*"- Effect: AllowAction:- logs:CreateLogStream- logs:PutLogEvents- logs:CreateLogGroup- logs:DescribeLogStreamsResource:- 'arn:aws:logs:*:*:*'S3CleanerFunction:Type: AWS::Lambda::FunctionProperties:Description: Empty S3 bucket cleanerFunctionName: !Sub '${ParentStackName}-${Environment}-${LambdaName}'Environment:Variables:StepFunctionsArn: !Ref StepFunctionsArnCode:S3Bucket: !Ref S3BucketS3Key: !Ref S3KeyHandler: s3_cleaner.lambda_handlerRuntime: python2.7MemorySize: 3008Timeout: 300Role: !GetAtt S3CleanerRole.Arn

Outputs:S3CleanerArn:Description: Lambda Function Arn for empty S3 buckets cleaningValue: !GetAtt S3CleanerFunction.ArnExport:Name: !If- IfSetParentStack- !Sub '${ParentStackName}-${LambdaName}'- !Sub '${AWS::StackName}-${LambdaName}'S3CleanerName:Description: Lambda Function Name for empty S3 buckets cleaningValue: !Ref S3CleanerFunction

The role is simple enough. Still, remember about logs. Always log your Lambda invocations. To avoid increasing cost, you may change your retention policies to have the logs automatically expire in an acceptable time frame. You should limit policies to appropriate resources, like states which attach policy actions for Step Functions.

Literally, you’re allowing actions on Step Functions on your Lambda function. The rest of the code, apart from outputs which are obvious, is the Lambda Function object (AWS::Lambda::Function). The most confusing part could be:

Environment:Variables:StepFunctionsArn: !Ref StepFunctionsArn

This Environment fragment provides the system variables you’d like to pass into a function like the following Lambda handler:

def lambda_handler(event, context):bucket = gears3('s3', event, context)stepfunctions_arn = os.environ['StepFunctionsArn']bucket.call_step_functions(arn=stepfunctions_arn)

The parameter !Ref StepFunctionsArn is going to be passed like here:

Resources:LambdaStack:Type: AWS::CloudFormation::StackProperties:Parameters:S3Bucket: !Ref ResourcesBucketParentStackName: !Ref ParentStackNameStepFunctionsArn: !GetAtt MyStateMachineStack.Outputs.StepFunctionsName

As mentioned before, the output of MyStateMachineStack (ARN of StepFunctions workflow) gets passed after the creation of the Step Functions stack. We then get this parameter in the Environment section of the module definition.

But wait, what’s inside MyStateMachineStack in the first place? There are two main parts: StateExecutionRole, which implements an IAM Role for Lambda execution. One of the steps in our workflow is:

"DeletedBuckets": {"Type": "Task","Resource": "${LambdaArn}","End": true}

It references the LambdaArn variable passed as a parameter in the main product file (i.e.: LambdaArn: !GetAtt DynamoDbStack.Outputs.DynamodbFunctionArn).

In other words: take the output from DynamoDbStack and give me that ARN which I need in my Step Functions workflow.

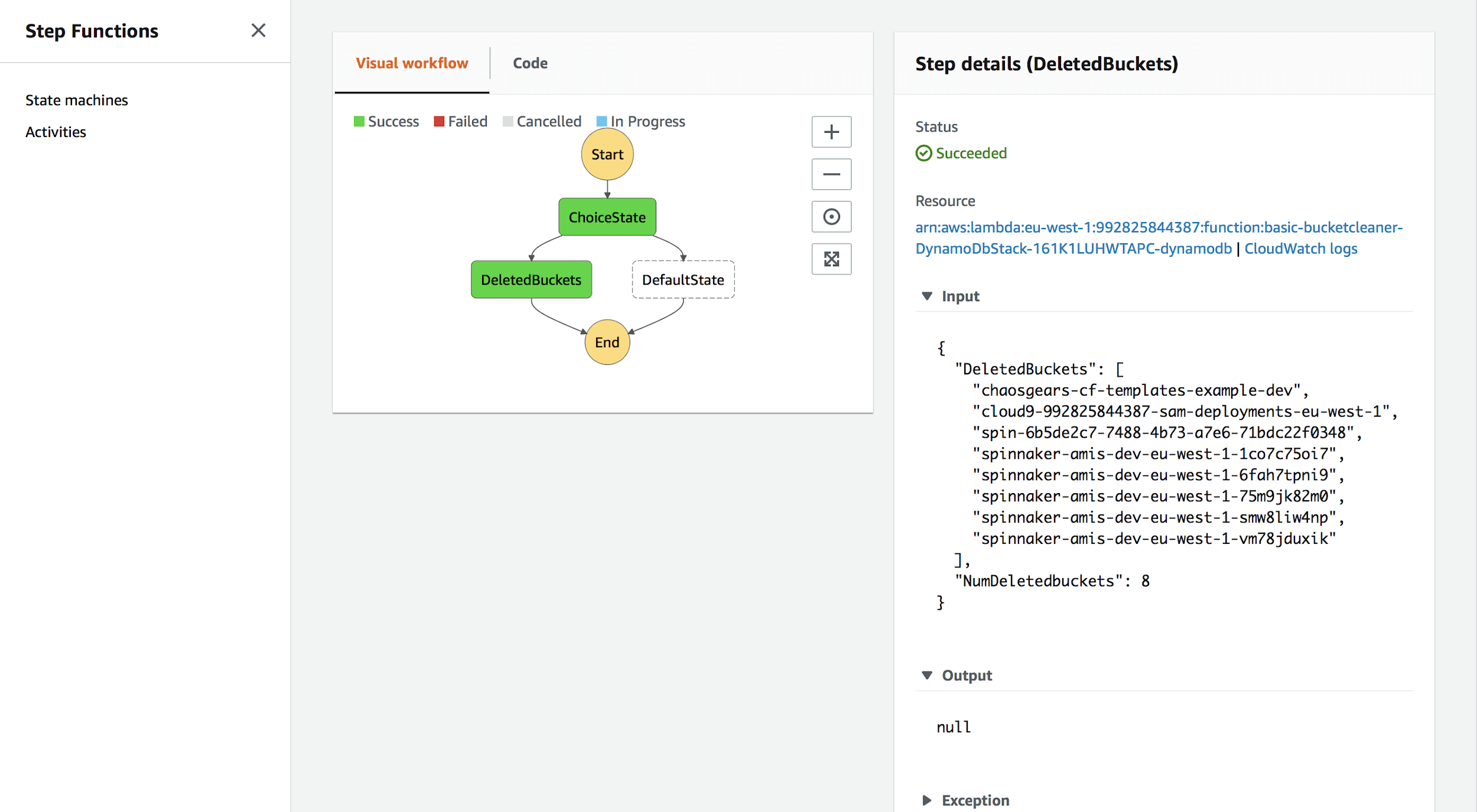

The workflow itself is pretty easy and depends on a basic “Choice”:

"ChoiceState": {"Type" : "Choice","Choices": [{"Variable": "$.NumDeletedbuckets","NumericGreaterThan": 0,"Next": "DeletedBuckets"}],"Default": "DefaultState"}

The next step gets chosen depending on NumDeletedbuckets (which is contained in the output from our Lambda cleaner function). Then we either put the names of all deleted buckets in a DynamoDB table (via a Lambda invoked function), or simply print “NOTHING CHANGED” — just to notify that none of the buckets has been deleted.

Parameters:LambdaArn:Description: LambdaArnType: StringEnvironment:Type: StringDescription: Type of environment.Default: devAllowedValues:- dev- dev/test- prod- test- poc- qa- uatResources:StatesExecutionRole:Type: AWS::IAM::RoleProperties:RoleName: !Sub 'StatesExecution-${Environment}'AssumeRolePolicyDocument:Version: 2012-10-17Statement:- Effect: AllowPrincipal:Service:- !Sub states.${AWS::Region}.amazonaws.comAction:- sts:AssumeRolePath: /Policies:- PolicyName: InvokeLambdaFunctionPolicyDocument:Version: "2012-10-17"Statement:- Effect: AllowAction: lambda:InvokeFunctionResource: *MyStateMachine:Type: AWS::StepFunctions::StateMachineProperties:RoleArn: !GetAtt StatesExecutionRole.ArnDefinitionString: !Sub |{"Comment": "An example of the Amazon States Language using a choice state.","StartAt": "ChoiceState","States": {"ChoiceState": {"Type" : "Choice","Choices": [{"Variable": "$.NumDeletedbuckets","NumericGreaterThan": 0,"Next": "DeletedBuckets"}],"Default": "DefaultState"},"DeletedBuckets": {"Type": "Task","Resource": "${LambdaArn}","End": true},"DefaultState": {"Type": "Pass","Result": "Nothing Changed","End": true}}}

Generally, the flow is as follows: Create StepFunctions -> pass the output to LambdaStack -> take that output and pass it as a Parameter -> use it as an input parameter to a Lambda function (StepFunctionsArn: !Ref StepFunctionsArn)

To tie all of this together, we need two more things:

- DynamoDB

- CloudWatch Events

I’ve prepared the first one via another CloudFormation stack called DynamoDBTableStack. The table was added with minimal WCU/RCU (Write/Read capacity units) and tags for easier management. The module used in this stack is unfortunately too long to feasibly present here.

DynamoDBTableStack:Type: AWS::CloudFormation::StackProperties:Parameters:TableName: !Ref TableNameFirstAttributeName: !Ref FirstAttributeNameFirstAttributeType: !Ref FirstAttributeTypeFirstSchemaAttributeName: !Ref FirstSchemaAttributeNameProvisionedThroughputRead: !Ref ProvisionedThroughputReadProvisionedThroughputWrite: !Ref ProvisionedThroughputWriteCustomer: !Ref CustomerContactPerson: !Ref ContactPersonEnvironment: !Ref EnvironmentProject: !Ref ProjectApplication: !Ref ApplicationJira: !Ref JiraAWSNight: !Ref AWSNightTemplateURL: !If [IfVirginia, !Sub 'https://s3.amazonaws.com/${ResourcesBucket}/modules/aws/dynamoDB.yml', !Sub 'https://s3-${AWS::Region}.amazonaws.com/${ResourcesBucket}/modules/aws/dynamoDB.yml']TimeoutInMinutes: 5

In terms of events, I haven’t migrated code into a module, due to external circumstances at that point in time — i.e. pure laziness ;) It would, however, be prudent to do — unless you tackle the issue in a different way altogether, which we will do in future articles (using Serverless Framework for some of this work).

That said, Events::Rule has been set with a cron expression (EventsCronExpression). Additionally, we’ve included the permission for events to invoke our Lambda, defined in the Lambda::Permission section.

EventsCronExpression:Description: Cron expressionType: StringDefault: '0 23 ? * FRI *'ScheduledRule:Type: AWS::Events::RuleProperties:Name: !Ref EventNameDescription: ScheduledRuleScheduleExpression: !Sub 'cron(${EventsCronExpression})'State: "ENABLED"Targets:- Arn: !GetAtt LambdaStack.Outputs.S3CleanerArnId: AsgManageFunctionDependsOn:- LambdaStackPermissionForEventsToInvokeLambda:Type: AWS::Lambda::PermissionProperties:FunctionName: !GetAtt LambdaStack.Outputs.S3CleanerNameAction: lambda:InvokeFunctionPrincipal: events.amazonaws.com

We’re now more or less done with the Cloudformation part. It’s high time to dive into Lambda code.

I’m not a programmer, and yet my experience says that repeatable tasks can ultimately be completed easier/faster with code than manually. Of course, it depends on your coding skills, but if you do it once and spend some time on abstracting away the problem for analogous use, you’re going to be more satisfied because you won’t ever do the same thing over and over again. It will be automated.

I am aware that some people argue against automation and consider it a waste of time (we can do the same via GUI

), but they tend to forget some key points:

- code versioning — it simplifies teamwork and gives a better overview of the infrastructure and deployed services due to the declarative nature of infrastructure as code definitions,

- it’s easy to reuse that code in different environments (dev, qa, prod),

- it’s less prone to human error (especially common during menial, repetitive tasks where our minds just blank out),

- it simplifies processes and — contrary to humans — it never sleeps,

- tasks can be launched simultaneously,

- it speeds up your work in the longer term, so you’ve got more time for other tasks,

- tasks can be launched periodically in an automated manner.

Those are only some key aspects, but the more you automate, the more benefits you will encounter.

One function to find them…

Let’s finally dive into our actual business logic, as part of a class gears3 to encapsulate it.

Within delete_empty_buckets() we first check whether there’s something to be deleted. If not, only a NOCHANGE message is sent. Otherwise, we count the number of buckets to be deleted, remove them, and send a proper message containing said number of deleted buckets along with their names.

def delete_empty_buckets(self):count = 0deleted = []try:todelete = self.return_empty_s3()length = len(todelete)if length == 0:responseData = {'NumDeletedbuckets': count,}sendResponse(self.event, self.context, 'NOCHANGE', responseData)return responseDataelse:for bucket in todelete:self.resource.Bucket(bucket).delete()count += 1deleted.append(bucket)if count != length:raise Exception('Failed to delete all' + str(length) + 'buckets')else:responseData = {'NumDeletedbuckets': count,'DeletedBuckets': deleted}sendResponse(self.event, self.context, 'SUCCESS', responseData)return responseDataexcept ClientError as e:print(e.response['Error']['Code']+':', e.response['Error']['Message'])

sendResponse is a helper function used for building human-readable messages:

def sendResponse(event, context, responseStatus, responseData):responseBody = {'Status': responseStatus,'Reason': 'See the details in CloudWatch Log Stream: {}'.format(context.log_stream_name),'PhysicalResourceId': context.log_stream_name,'Data': responseData}print('Response Body:', { json.dumps(responseBody) })

This is the Lambda responsible for triggering Step Functions. Below is my “9mm” gun for calling Step Functions service. The ARN value comes from an environment variable defined as follows:

stepfunctions_arn = os.environ['StepFunctionsArn']def call_step_functions(self, arn):try:client = boto3.client('stepfunctions')response = client.start_execution(stateMachineArn = arn,name = generate_random_string(),input = json.dumps(self.delete_empty_buckets()))except ClientError as e:print(e.response['Error']['Code']+':', e.response['Error']['Message'])

The final part consists of simply defining the lambda handler in which the object is created and the call_step_functions method invoked.

def lambda_handler(event, context):bucket = gears3('s3', event, context)stepfunctions_arn = os.environ['StepFunctionsArn']bucket.call_step_functions(arn=stepfunctions_arn)

… and one function to bind them

The second Lambda in the flow was a small class called Dynamo which, apart from put_dynamo_items() which is shown below, simply stores items in a particular DynamoDB table and has some additional helper methods for working with DynamoDB.

def put_dynamo_items(self, bucketlist, owner='chaosgears'):try:for bucket in bucketlist:response = self.table.put_item(Item={'bucketname': bucket,'date': datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S"),'hash': generate_random_string(),'owner': owner})return responseexcept ClientError as err:print(err.response['------Error']['Code']+':', err.response['Error']['Message'])

When the dust settles

This short story shows how a combination of serverless AWS services might ease the pain with repeatable tasks. Honestly, it’s a trivial case and the benefits — quite obvious:

- We stopped monitoring empty buckets which after different kinds of tests become a huge group of maggots that can slip your memory. That small step allows us to, at the end of the day, spend time on new challenges/automation tasks rather than coming back to keep cleaning up unnecessary buckets;

- We’ve gained some knowledge about integration between Lambdas and Step Functions, got new skills with

boto3; - We deployed a whole solution via CloudFormation, which showed us that Serverless Framework saves much more time, and makes it easier to keep everything in one place.