How to automate Docker image creation on AWS

A practical introduction to CI/CD tools in AWS' roster, focused on creating an automated pipeline for building Docker images.

AWS CodeBuild

AWS CodePipeline

Amazon ECR

Terraform

We recently talked about automation in the context of cleaning useless S3 buckets, but this time we would like to focus on native AWS services focused on CI/CD automation (CodePipeline, CodeBuild, ECR) to help us improve our everyday workflow.

Docker basics

The solution we are going to implement will be extremely easy, but it will help illustrate how AWS automation services work and what sort of benefits they bring to the table. We will create the entire environment in accordance with the infrastructure as code principle using HashiCorp Terraform, the features of which are often compared to AWS CloudFormation.

Terraform is one of the most popular open-source tools to define and manage infrastructure for many providers. Based on HashiCorp Configuration Language (HCL), it codifies APIs into declarative configuration files that can be shared amongst team members.

The guiding idea behind Docker images is the need to have a common compatible environment for the whole team with exactly the same version of packages installed. In this scenario, we would like to have the environment prepared for launching Terraform. Yes, the same images we used to define our pipeline in Terraform code and deploy on AWS (inception, yeah!). However, before we start the game, we need to go shopping within AWS’ automation catalogue:

- AWS CodePipeline — allows you to automate the release process for your application or service

- AWS CodeBuild — helps to build and test code with continuous scaling

- Amazon ECR — private Docker registry to store your images

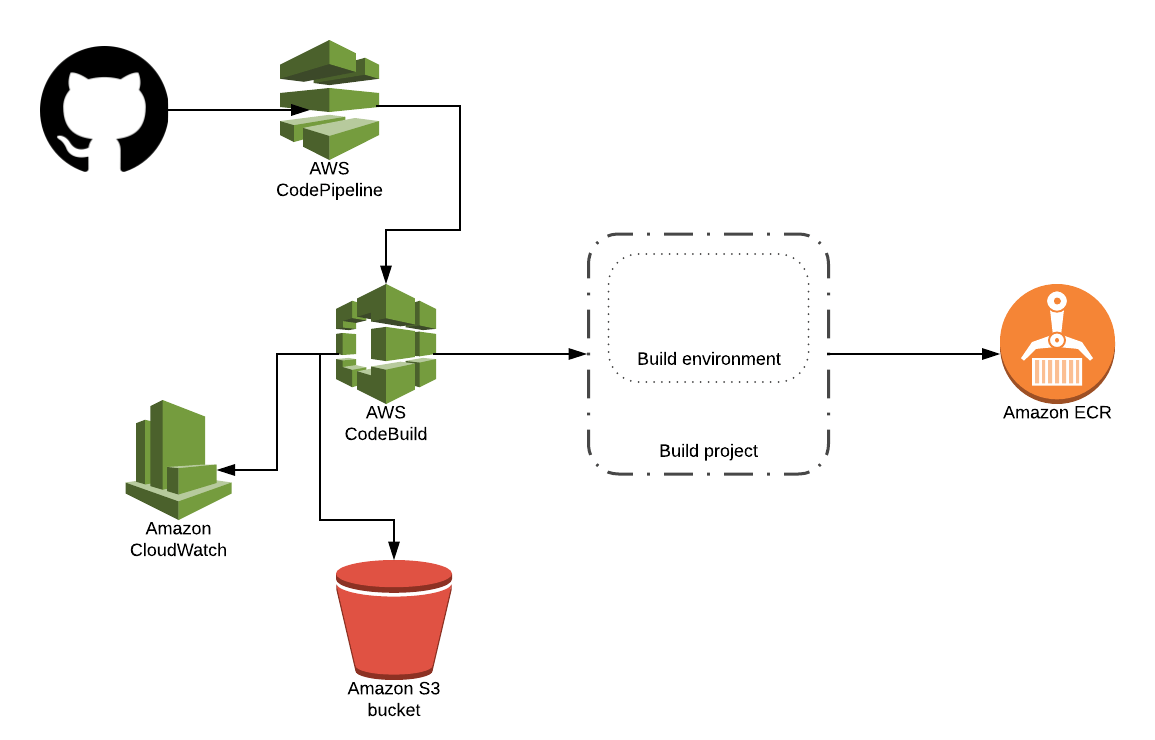

Every great thing grows from a small seed — and the architecture we are aiming for looks roughly as follows:

Docker image building pipeline

- The

Dockerfileis stored in a VCS repository (Git on GitHub), - AWS CodePipeline fetches the source code from said repository,

- AWS CodeBuild runs a build job which is specified in a

buildspec.ymlfile included alongside the source code, - The build project installs all required packages (builds the image) and pushes the resulting artifact (image) to Amazon ECR,

- AWS CodeBuild stores pushes its logs to Amazon CloudWatch.

Dockerfile

The form and content of your image really depend on your needs. Remember, however, not to create too heavy images and try to build large applications in separate images.

FROM alpine:latestENV TERRAFORM_VERSION=0.11.10ENV TERRAFORM_SHA256SUM=43543a0e56e31b0952ea3623521917e060f2718ab06fe2b2d506cfaa14d54527RUN apk add --no-cache git curl openssh bash && \curl https://releases.hashicorp.com/terraform/${TERRAFORM_VERSION}/terraform_${TERRAFORM_VERSION}_linux_amd64.zip> terraform_${TERRAFORM_VERSION}_linux_amd64.zip && \echo "${TERRAFORM_SHA256SUM} terraform_${TERRAFORM_VERSION}_linux_amd64.zip" > terraform_${TERRAFORM_VERSION}_SHA256SUMS && \sha256sum -cs terraform_${TERRAFORM_VERSION}_SHA256SUMS && \unzip terraform_${TERRAFORM_VERSION}_linux_amd64.zip -d /bin && \rm -f terraform_${TERRAFORM_VERSION}_linux_amd64.zip

Buildspec.yml

When you use a long list of commands or just want to repeat this step in another stage its recommends using YAML-formatted Buildspec file. You can define multiple build specifications and still store with the same repository but only one Buildspec file for a one build project.

Buildspec version 0.1 each command runs in isolation from all other commands (isolated shell in the build environment), that’s why it is recommended to use version 0.2 which solves this limitation. We can specify run-as indicating parameter which user runs particular commands or all of them, but the root is a default. Environment variables can be defined in Buildspec or passed from the CodeBuild project. They are inexplicably stored in any logs.

version: 0.2phases:install:commands:- echo "install step..."- apt-get update -ypre_build:commands:- echo "logging in to AWS ECR..."- $(aws ecr get-login --no-include-email --region $AWS_REGION)build:commands:- echo "build Docker image started on `date`"- docker build -t $ECR_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG$IMAGE_NAME/.post_build:commands:- echo "build Docker image complete `date`"- echo "push Docker image to ECR..."- docker push $ECR_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG

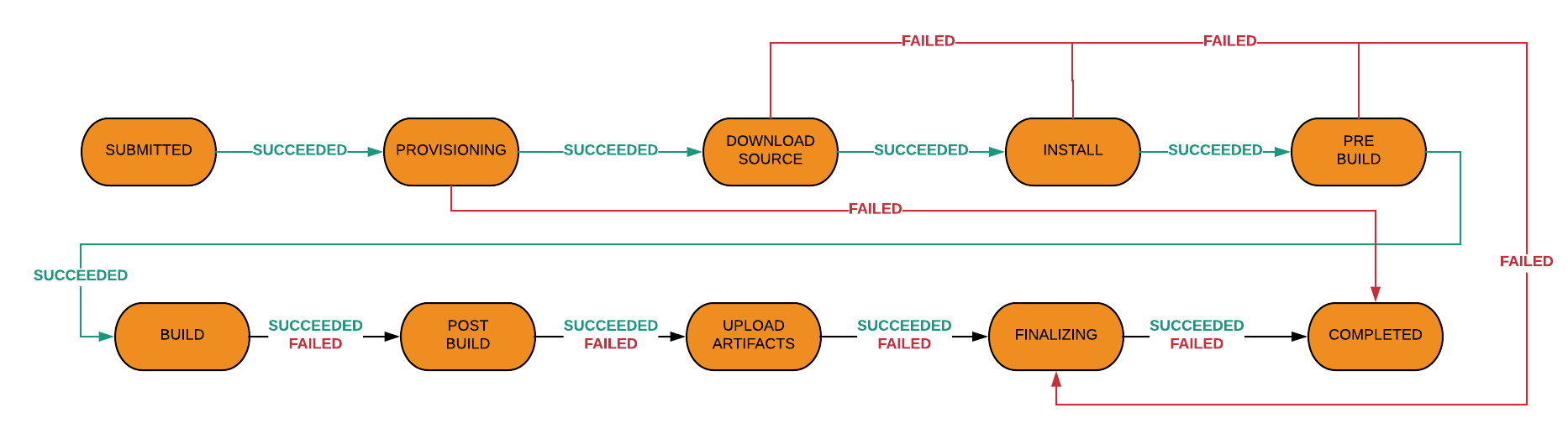

Builds in AWS CodeBuild proceed in a few phases:

install— for installing packagespre_build— for installing dependencies or signing in to another toolbuild— for running build or testing toolspost_build— for building artifacts into a JAR/WAR file, pushing a Docker image into Amazon ECR or sending a build notification through Amazon SNS

AWS CodeBuild project action phases

It is important to be aware of the transition rules in every phase. Especially that post_build will execute even if build fails. This allows you to recover partial artifacts for debugging build/test failures.

When building and pushing Docker images to Amazon ECR, or running unit tests on your source code but not actually building it, there is no need to define artifacts. In every other case, AWS CloudBuild can upload your artifact to an Amazon S3 bucket of your choosing.

Amazon ECR

We run our private repository on AWS. While there are some limits to that, those are generous — up to 10 000 images per repository, with up to 100 000 repositories is more than enough for most practical use cases. Plus, it’s trivial to create lifecycle policy roles to rotate out unnecessary images.

To be able to host your own private Docker repository on AWS, all you have to do is to create it and attach an access policy to it. When defining permissions, it’s good practice to follow the principle of least privilege and restrict access only to the services which are actually used.

resource "aws_ecr_repository" "terraform" {name = "tf-images"}resource "aws_ecr_repository_policy" "terraform_policy" {repository = "${aws_ecr_repository.terraform.name}"policy = <<EOF{"Version": "2008-10-17","Statement": [{"Sid": "new policy","Effect": "Allow","Principal": {"Service": "codebuild.amazonaws.com"},"Action": ["ecr:BatchCheckLayerAvailability","ecr:BatchGetImage","ecr:CompleteLayerUpload","ecr:GetDownloadUrlForLayer","ecr:InitiateLayerUpload","ecr:PutImage","ecr:UploadLayerPart"]}]}EOF}

AWS CodePipeline

Stage-based tools can build, test, and deploy source code every time there is a code change, detect issues occurring on any step and thereby prevent automatic deploying into environment. CodePipeline is a fully managed AWS service providing Continuous Integration and Delivery features wrapping other services depending on action type. Amongst others:

Source actions

- Amazon S3

- AWS CodeCommit

- GitHub

- Amazon ECR

Build actions

- AWS CodeBuild

- CloudBees

- Jenkins

- Solano CI

- TeamCity

Test actions

- AWS CodeBuild

- AWS Device Farm

- BlazeMeter

- Ghost Inspector

- HPE StormRunner Load

- Nouvola

- Runscope

Deploy actions

- AWS CloudFormation

- AWS CodeDeploy

- AWS ECS

- AWS OpsWorks Stacks

- AWS Service Catalog

- XebiaLabs

Approval actions

- Amazon Simple Notification Service (SNS)

Invoke actions

- AWS Lambda

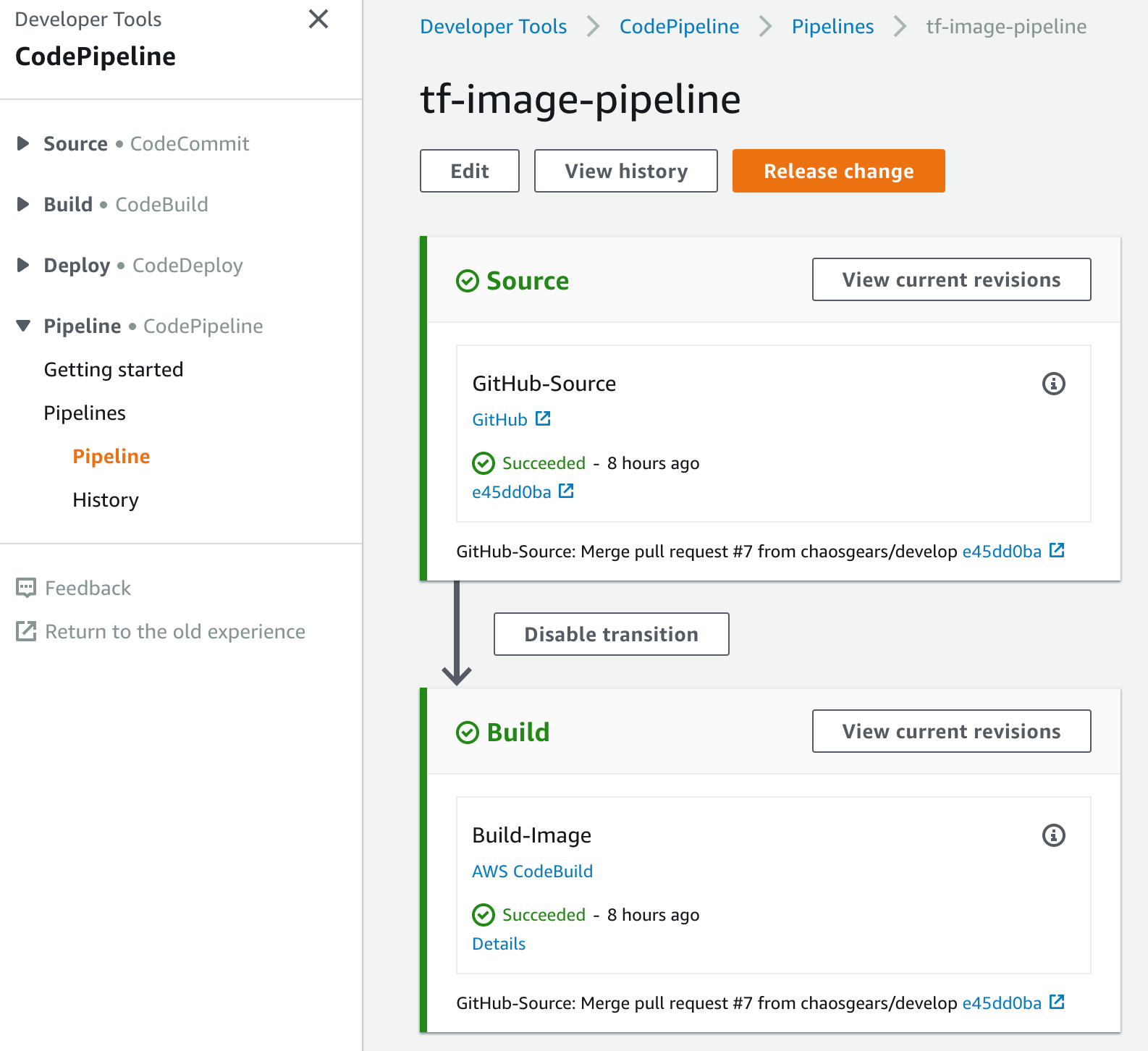

AWS CodePipeline basic stages for building Docker images

Once you add a GitHub source repository, you can use dedicated webhooks that trigger your pipeline whenever changes in your repository occur.

Currently, granting AWS CodePipeline access implicitly means granting it permissions to all repositories to which your given GitHub account has access to. If you want to limit the access AWS CodePipeline has to a specific set of repositories, create a GitHub account, and grant that access to only the repositories you want to integrate with your pipeline.

resource "aws_codepipeline" "tf_image_pipeline" {name = "tf-image-pipeline"role_arn = "${aws_iam_role.codepipeline_role.arn}"stage {name = "Source"action {name = "GitHub-Source"category = "Source"owner = "ThirdParty"provider = "GitHub"version = "1"output_artifacts = ["terraform-image"]configuration {Owner = "chaosgears"Repo = "common-docker-images"Branch = "master"OAuthToken = "${data.aws_ssm_parameter.github_token.value}"}}}stage {name = "Build"action {name = "Build-Image"category = "Build"owner = "AWS"provider = "CodeBuild"input_artifacts = ["terraform-image"]version = "1"configuration {ProjectName = "${aws_codebuild_project.tf_image_build.id}"}}}}

If you have data that you don’t want users to alter or reference in clear text, such as tokens, passwords, or license keys, then create those parameters using the Secure String data type in parameter store provided by AWS Systems Manager (SSM).

data "aws_ssm_parameter" "github_token" {name = "github_token"}

To retrieve custom environment variables stored in SSM, you need to add the ssm:GetParameters action to your AWS CodePipeline service role and required permissions which allow to run CodeBuild project and ask AWS Secure Token Service (STS) to get temporary security credentials to make API calls to AWS CodePipeline service.

resource "aws_iam_role" "codepipeline_role" {name = "codepipeline-terraform-images"assume_role_policy = <<EOF{"Version": "2012-10-17","Statement": [{"Effect": "Allow","Principal": {"Service": "codepipeline.amazonaws.com"},"Action": "sts:AssumeRole"}]}EOF}resource "aws_iam_role_policy" "codepipeline_policy" {name = "codepipeline-terraform-policy"role = "${aws_iam_role.codepipeline_role.id}"policy = <<EOF{"Version": "2012-10-17","Statement": [{"Action": ["codebuild:BatchGetBuilds","codebuild:StartBuild"],"Resource": "${aws_codebuild_project.tf_image_build.arn}","Effect": "Allow"},{"Action": ["ssm:GetParameters"],"Resource": "${data.aws_ssm_parameter.github_token.arn}","Effect": "Allow"}]}EOF}

It is worth mentioning, that when using multi-staging pipelines workflow, it increases the time a bit as opposed to the total launch time of individual stages. You can speed up this process by keeping your CodePipeline container ‘warm’ using git pushing garbage into a garbage branch e.g. every 15 seconds. Moreover, if a pipeline contains multiple source actions, all of them run again, even if a change is detected for one source action only.

AWS CodeBuild

With CodeBuild you are charged for computing resources based on the duration that it takes for your build to execute. The per-minute rate depends on the selected compute type. This seems to be more expensive if you have longer running builds or a lot of builds per month. Until the build server becomes a Single Point of Failure, builds start taking too long, everyone complains about delays you could want to have hosted solution what rids you of maintaining a build server.

resource "aws_codebuild_project" "tf_image_build" {name = "tf-image-build"service_role = "${aws_iam_role.codebuild_role.arn}"artifacts {type = "CODEPIPELINE"}# The cache as a list with a map object inside.cache = {type = "S3"location = "${aws_s3_bucket.codebuild.bucket}"}environment {compute_type = "BUILD_GENERAL1_SMALL"image = "aws/codebuild/docker:17.09.0"type = "LINUX_CONTAINER"privileged_mode = "true"environment_variable = [{"name" = "AWS_REGION""value" = "${data.aws_region.current.name}"},{"name" = "ECR_ACCOUNT_ID""value" = "${data.aws_caller_identity.current.account_id}"},{"name" = "IMAGE_NAME""value" = "terraform"},{"name" = "IMAGE_REPO_NAME""value" = "${aws_ecr_repository.terraform.name}"},{"name" = "IMAGE_TAG""value" = "0.11.10"}]}source {type = “CODEPIPELINE"}}

Build environment

A build environment includes an operating system, programming language runtime and tools required to run a build. For non-enterprise or test workloads you can just reach for ready-to-use images provided and managed by AWS and choose available language e.g. Android, Java, Python, Ruby, Go, Node.js, or Docker. Windows Platform is only supported as a base image with Windows Server Core 2016 version.

If not, you should consider a custom environment image from ECR store or another external repository. For Docker images up to 20GB uncompressed in Linux and 50GB uncompressed in Windows, regardless of the compute type. Compute power level support three different levels from 3GB/2vCPUs/64GB up to 15GB/8vCPUs/128GB.

For any data about the region, account ID, the user which is authorized or other values that are already established is recommended to extracting them dynamically. It can be achieved by Terraform data sources.

data "aws_caller_identity" "current" {}data "aws_region" "current" {}

Enabled cache for AWS CodeBuild project can save notable build time. It also improves resiliency by avoiding external network connections to an artifact repository. Cached data will be stored in S3 bucket and can include whole files or only that will not change frequently between builds.

resource "aws_s3_bucket" "codebuild" {bucket = "codebuild-terraform-images"acl = "private"region = "${var.aws_region}"versioning {enabled = true}tags {Service = "CodepBuild",Usecase = "Terraform docker images",Owner = "ChaosGears"}}

AWS CodeBuild will require IAM service role to access created ECR repository, S3 bucket for cached data, specific log group in CloudWatch Logs and make calls to AWS Secure Token Service (STS).

resource "aws_iam_role_policy" "codebuild_policy" {name = "codebuild-terraform-policy"role = "${aws_iam_role.codebuild_role.id}"policy = <<EOF{"Version": "2012-10-17","Statement": [{"Effect":"Allow","Action": ["ecr:BatchCheckLayerAvailability","ecr:CompleteLayerUpload","ecr:InitiateLayerUpload","ecr:PutImage","ecr:UploadLayerPart"],"Resource": "${aws_ecr_repository.terraform.arn}"},{"Effect": "Allow","Resource": ["arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:/aws/codebuild/${aws_codebuild_project.tf_image_build.name}","arn:aws:logs:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:log-group:/aws/codebuild/${aws_codebuild_project.tf_image_build.name}:*"],"Action": ["logs:CreateLogGroup","logs:CreateLogStream","logs:PutLogEvents"]},{"Effect":"Allow","Action": ["ecr:GetAuthorizationToken"],"Resource": "*"},{"Effect":"Allow","Action": [“s3:*”],"Resource": ["${aws_s3_bucket.codebuild.arn}","${aws_s3_bucket.codebuild.arn}/*"]}]}EOF}resource "aws_iam_role" "codebuild_role" {name = "codebuild-terraform-images"assume_role_policy = <<EOF{"Version": "2012-10-17","Statement": [{"Effect": "Allow","Principal": {"Service": "codebuild.amazonaws.com"},"Action": "sts:AssumeRole"}]}EOF}

How can this help you?

AWS Code* automation services have been available since ~2015, but they keep being underestimated. Even in AWS cloud environments, we more often meet Jenkins in practice — despite all its shortcomings. Of course, any technology choice regarding CI/CD tools should be made based on actual requirements. However, sometimes that decision is rooted in insufficient knowledge about the capabilities given to us by a tool.

What we have shown today is just a drop in the sea of potential native AWS services. In the near future, we aim to publish deeper dives into AWS’ Code* native automation tools.

Thank you for reading — and stay tuned for more!