How to test your Python-based AWS Lambda in production

Step into the world of testing Step Functions, Python wrappers and Lambda function aliases and versions.

AWS Lambda

AWS Step Functions

Long time no see, guys! This time I am coming back with the third part of our series about our fully serverless project, one of many, we’ve delivered at the beginning of this year. Get ready, as I’m about to take you into the world of Step Functions, Python wrappers and Lambda Function Aliases and Versions. Yes, we will need all of that to prove that we can test our Lambda in the production environment. Curious? I bet you are. So, let’s not waste any more time and jump straight to the solution.

Feed the machine

Customer’s goal, emphasized as the top priority, was to abstract the AWS ecosystem from the development team. Their responsibility would be to code new algorithms needed for the cross-platform configuration translations. Each algorithm had to be implemented as a Lambda function (right now they are Python supported) with all necessary external services, like:

- message brokers,

- S3 buckets

- Step functions workflows for algorithms testing

- IAM roles

The only interaction with the application was via GUI that allowed uploading the zip file containing a new algorithm code, dependencies and simple tests.

handler.pymatadata.jsontests/packages/

Basically, the first part (uploading the code) was a pretty easy step to make. We leveraged an S3 bucket with API Gateway and Lambda function to generate pre-signed posts and seamlessly upload the package to AWS S3 service. In order to extract the metadata we’ve used S3 events which notified a AWS SQS queue (with a dead letter queue configured). Then, message was polled by another Lambda function and with a little piece of code we were able to save the data we needed to a DynamoDB table.

Saving new package metadata in DynamoDB allowed us to trigger the creation of all aforementioned external services with a combination of CloudFormation and AWS Lambda usage.

Up to this point, we’ve managed to implement the following points: ZIP file in S3, metadata about package in DynamoDB and CloudFormation stack with Lambda function and all mandatory external services required per each algorithm.

Roll up the sleeves and test

We’ve been thinking about an easy-to-implement and flexible solution for algorithms’ functions tests and finally came across this repo: //github.com/alexcasalboni/aws-lambda-power-tuning. Alex did a great job, so we’ve decided to rely on his idea to avoid reinventing the wheel.

Basically, we used the same pattern with some additional notifications and status updates tailored to our needs. For the sake of initial simplicity we were basing on 3 memory values (128, 256, 512) set in a metadata.json file attached in an algorithm’s ZIP archive:

{"className": "YOUR_ALGORITHM_CLASS","parallelInvocation": false,"strategy": "cost","num": 3,"powerValues": [128, 256, 512]}

num– this variable defines how many times the test should be executed per algorithm mem value.strategy[cost, duration] – from customer’s point of view, newly developed algorithms have to be cost and duration effective. Strategy parameter allowed us to do those analyses.

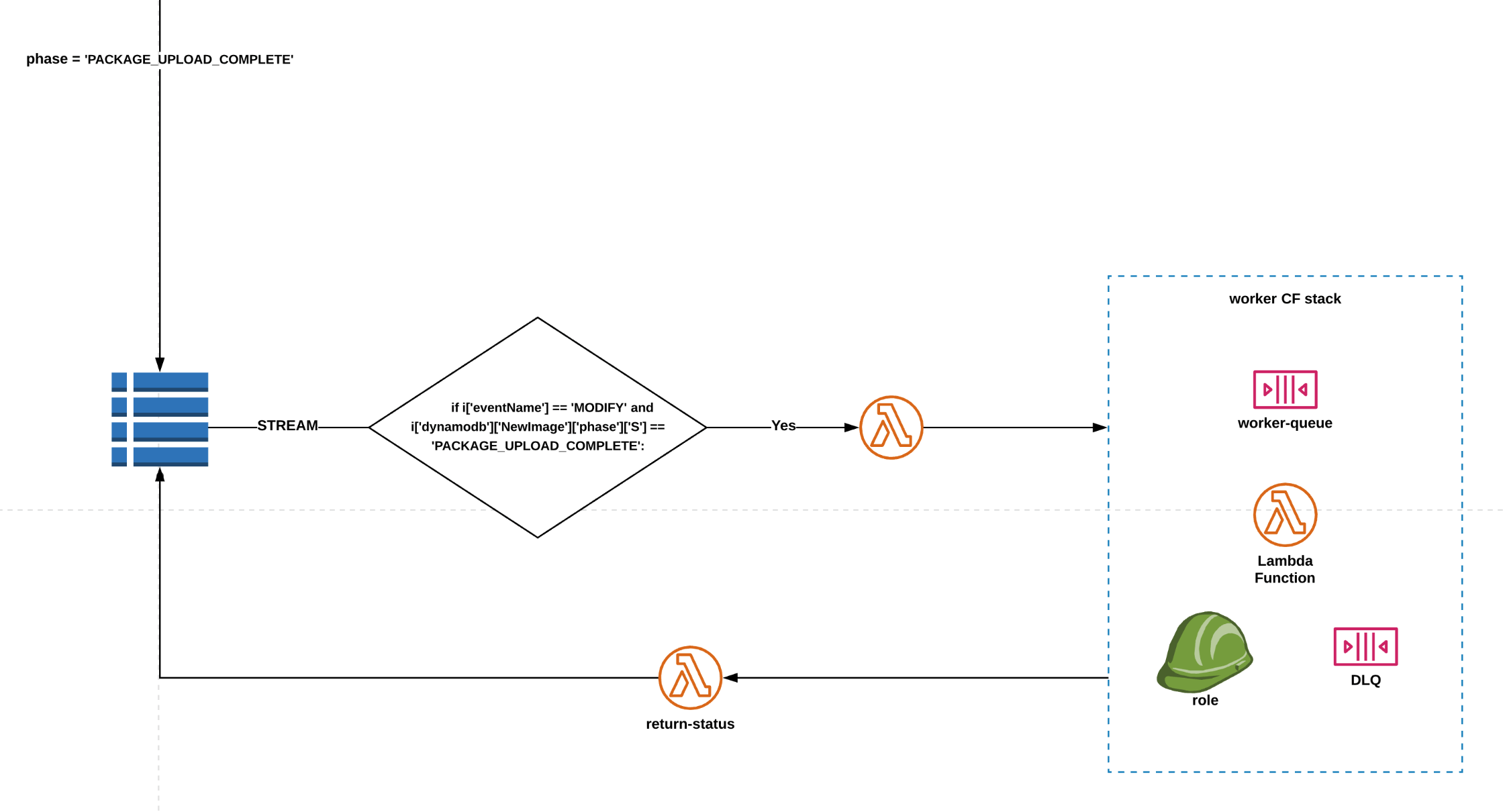

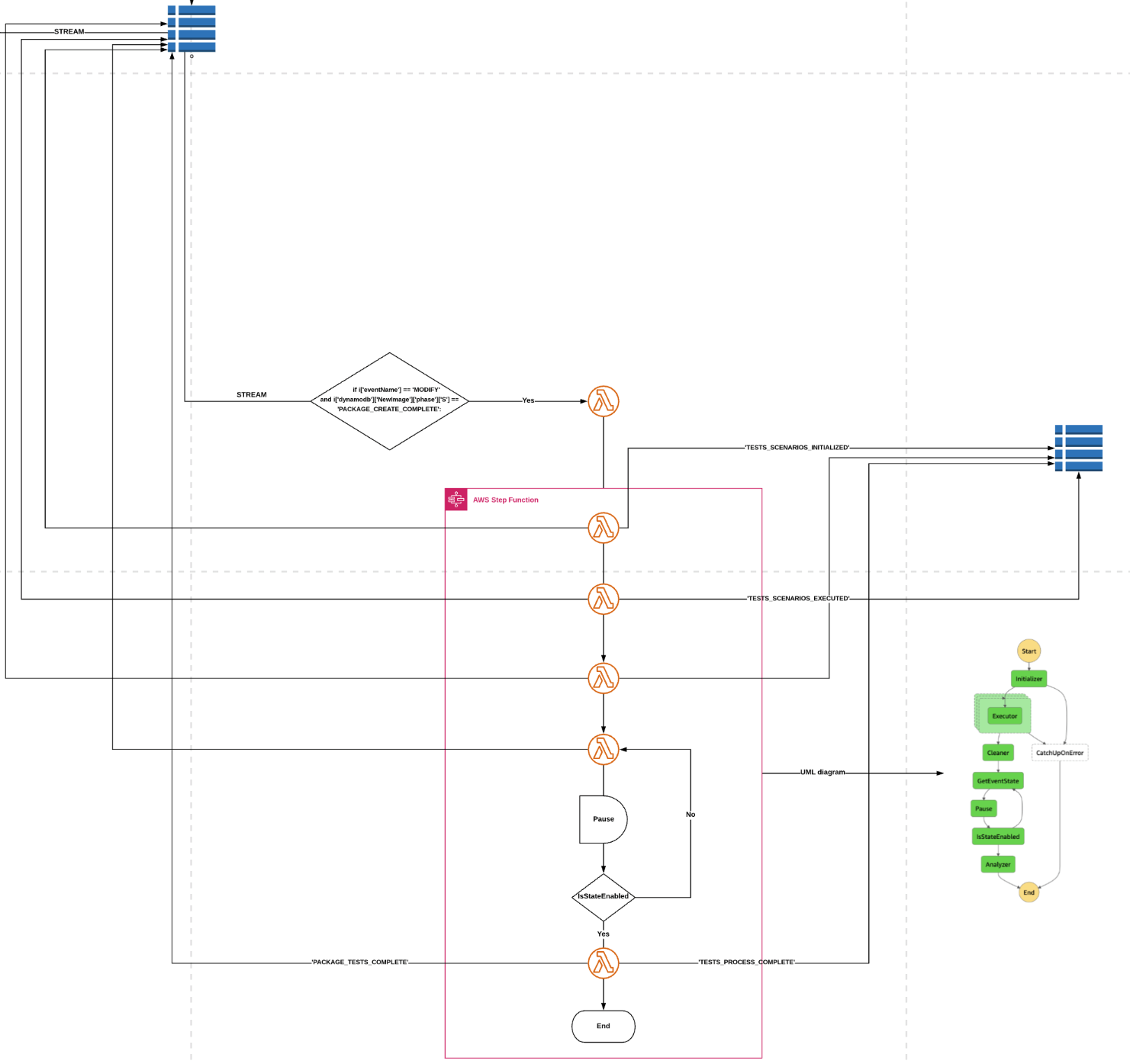

The return status Lambda from the last diagram shown in the previous part of this article is changing the status Attribute in DynamoDB which then, via DynamoDB Streams, calls another Lambda function, launching the Step Functions flow (see picture below).

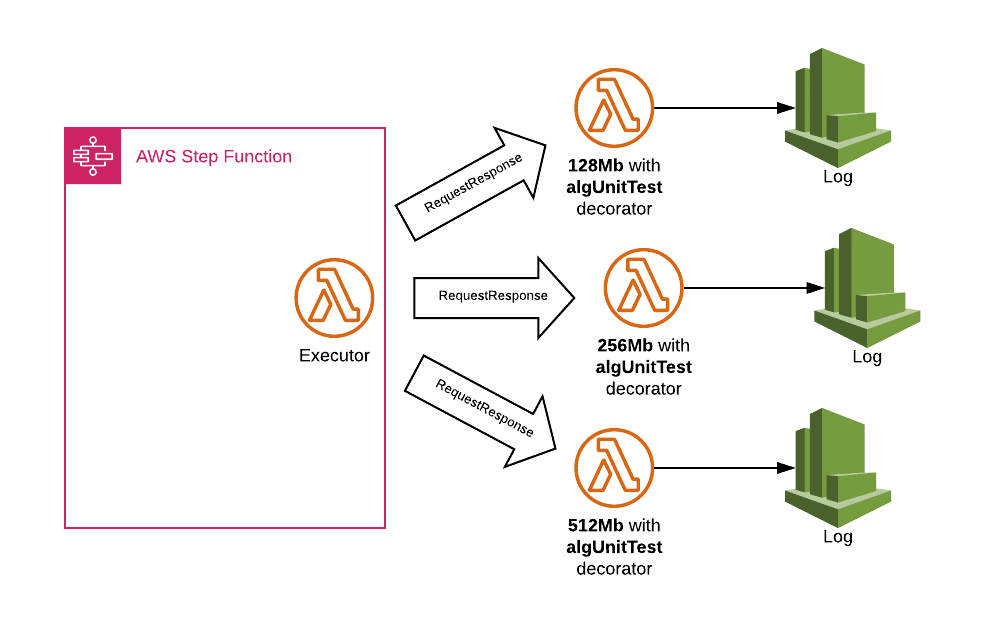

The core of Step Functions flow is the “Executor”, that executes the given Lambda function num times, extracts execution time from logs, and computes average cost per invocation. However, in order to invoke those Lambda functions the “Initializer” has to create N Lambda versions and aliases corresponding to the power values provided as input in metadata.json file beforehand.

From the Step Functions definition you have to configure Type: Map and…

{"ProcessAllTests": {"Type": "Map","Next": "Cleaner","ItemsPath": "$.initial.powerValues","ResultPath": "$.stats","Parameters": {"value.$": "$$.Map.Item.Value","functionArn.$": "$.functionArn","className.$": "$.className","internalBucket.$": "$.internalBucket","uploadHash.$": "$.uploadHash","num.$": "$.num","testId.$": "$.testId","parallelInvocation.$": "$.parallelInvocation"},"MaxConcurrency": 0,"Catch": [{"ErrorEquals": ["States.ALL"],"Next": "CatchUpOnError","ResultPath": "$.error"}],"Iterator": {"StartAt": "Executor","States": {"Executor": {"Type": "Task","Resource": "${executorArn}","Retry": [{"ErrorEquals": ["TimeOut"],"IntervalSeconds": 3,"BackoffRate": 2,"MaxAttempts": 3}],"End": true}}}}}

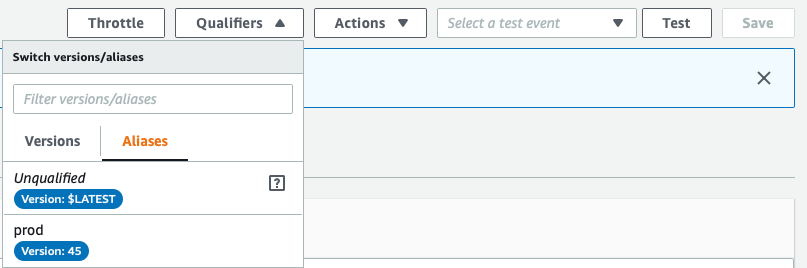

At this point, I have to emphasize one important thing. The production version of particular algorithms is not being impacted during the tests because of ALIAS Lambda feature usage. You might have noticed in the picture shown below that prod is aliased and changed only if Step Functions flow succeeds. That allows us to test Lambda functions in the backstage and do the “main show” on the production stage at the same time.

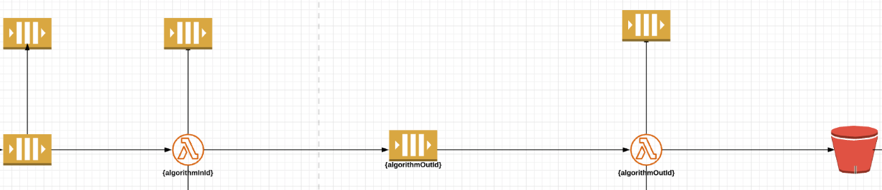

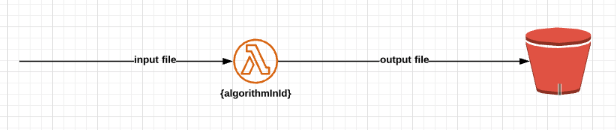

Ok, what if I wanted to test my function that was already launched with external services, but also change the type of those services for the sake of tests? In short, in our base scenario each “in” or “out” type of algorithm function takes the data from the message broker and pass it forward to either next SQS or next S3 bucket (see picture below).

Looks easy, but during the Step Functions test I want it to perform (for “in” and “out” type of functions) without any disruption in the existing base CloudFormation stack. To achieve that, I’ve described the algorithm’s zip file content that the dev team can upload:

handler.pymatadata.jsontests/packages/

In the tests directory, dev team members can add files to test their algorithm for. When the “Executor” function invokes particular “test aliases”, separately for each memory value, it thinks that the environment looks like the one shown below. How come, you may ask? I’ll explain that in the next paragraph.

Wrapping Python tests

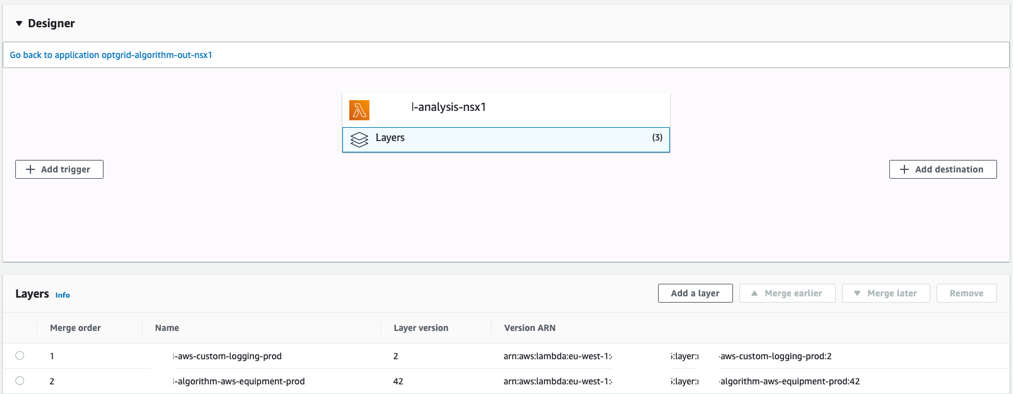

First of all,we needed to pack “code for interaction” with external services and make it available either for “in” or “out” type of algorithm. AWS Lambda Layers offered that flexibility. Below, you can see Lambda function for algorithm “in” — NSX — with a layer -aws-equipement-prod, containing all the necessary code.

From the developers’ point of view it only lacked dependency aws_equipment for base scenario (AlgorihtmWorker) and tests (algUnitTest). They didn’t need any additional knowledge about AWS services used, apart from Lambda code, Lambda function placement in a given environment, and, last but not least, a way of communicating with those services. Basically, all they wanted was to write the translation code and import code from layer.

import jsonimport requestsimport sysfrom aws_equipment import AlgorithmWorker, algUnitTestfrom protocols.protocols import get_protocol_number

To make it happen, we had to make one additional step. Namely, we needed to create class object and invoke the method for particular algorithm type: runIn or runOut. After that, everything was taking place, hidden from our eyes.

def lambda_handler(event, context):application = AlgorithmWorker(NSXParser, event, context)application.runOut()

AlgorithmWorker uses 3 arguments: your translating class name, while event and context match the Lambda handler. While this is enough for our base scenario — what about testing?

The idea we decided to follow was to add a wrapper with a “new” functionality for each “memory alias” Lambda. In this example, it was the test scenario. Having already imported dependency from aws_equipment import AlgorithmWorker, algUnitTest, we had all the code we needed to invoke it via “Extractor” function.

@algUnitTest(NSXParser)def lambda_handler(event, context):application = AlgorithmWorker(NSXParser, event, context)application.runOut()

Behind algUnitTest import we’ve hidden a piece of code that does things we mentioned before, and is being launched in the Step Functions flow. With this trick we’re simply validating (just for now, improvements will come) the code written by devs inside the AWS ecosystem. Generally, it’s an entry point for further improvements in tests area.

def algUnitTest(className):def decorator(lambda_handler):@wraps(lambda_handler)def wrapper(event, context):tmp_path = '/tmp/'result_path = 'tests/results/'results = []errors = []counter = 0try:logging.info("Event: {0}".format(event))s3 = S3(event['internalBucket'])tests_path = os.environ['LAMBDA_TASK_ROOT'] + "/tests/"files = get_file(pattern='test', path=tests_paths)if files != 0:else:logging.info("-----No test files found in: {0}".format(tests_path))return {"statusCode": 500, "body": "Missing tests files", "files": files}except (KeyError) as e:logging.critical("-----Key error: {0}".format(e))return {"statusCode": 500, "body": "-----Key error: {0}".format(e)}except (ImportError) as error:logging.critical("-----Import error: {0}".format(error))return {"statusCode": 500, "body": "-----Error path: {0}".format(error)}except (Exception) as exception:logging.critical("-----Error: {0}".format(exception))return {"statusCode": 500, "body": "-----Error: {0}".format(exception)}return lambda_handler(event, context)return wrapperreturn decorator

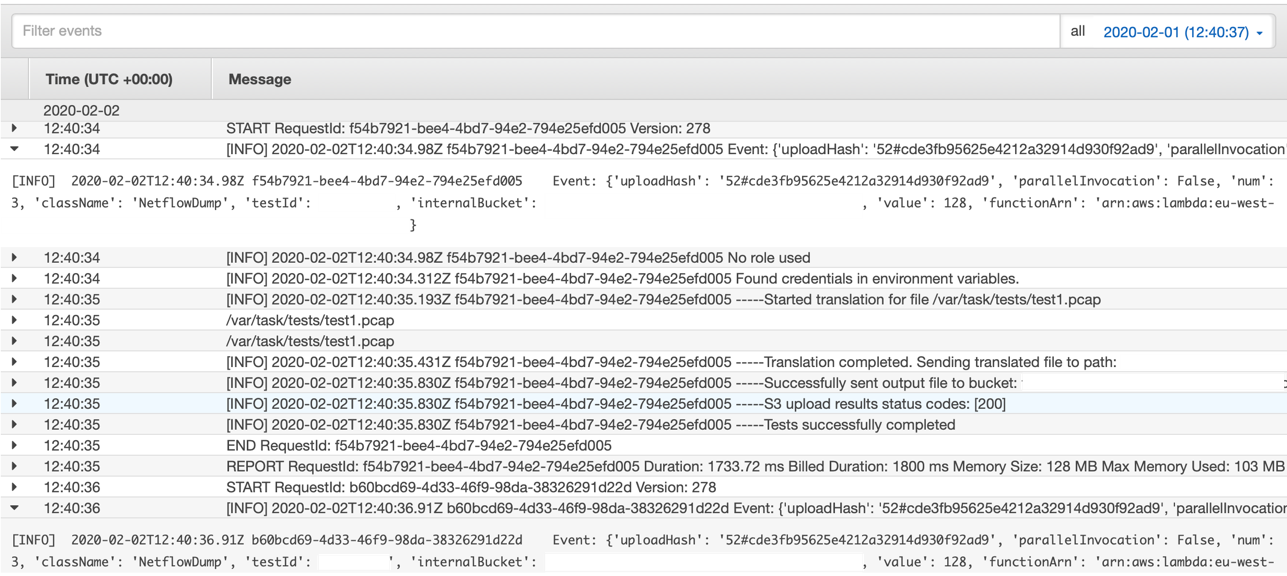

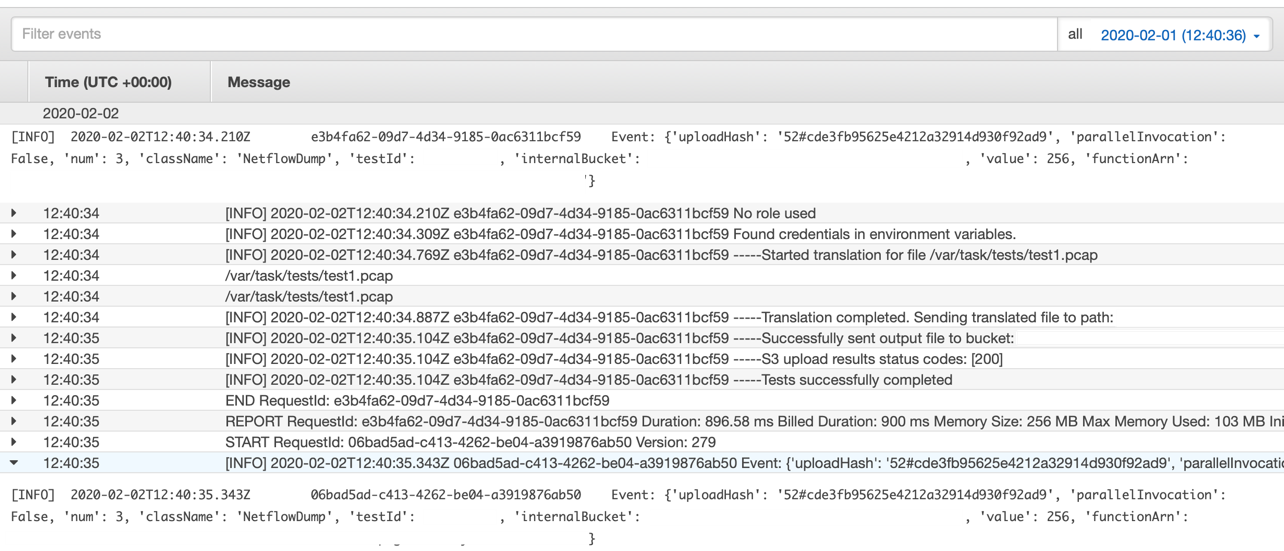

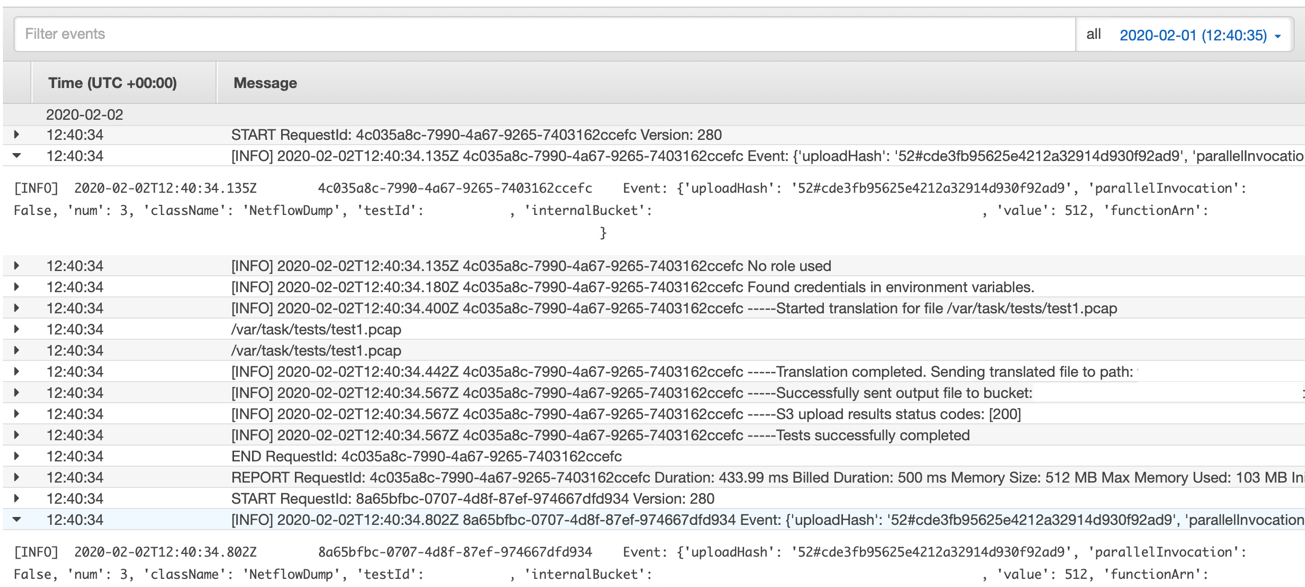

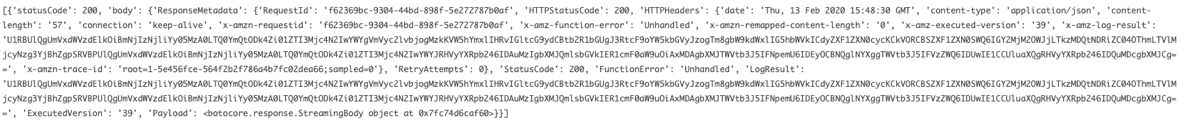

Below are the examples of the particular AWS Lambdas after the Executor’s invocation:

For 128MB of memory

For 256MB of memory

For 512MB of memory

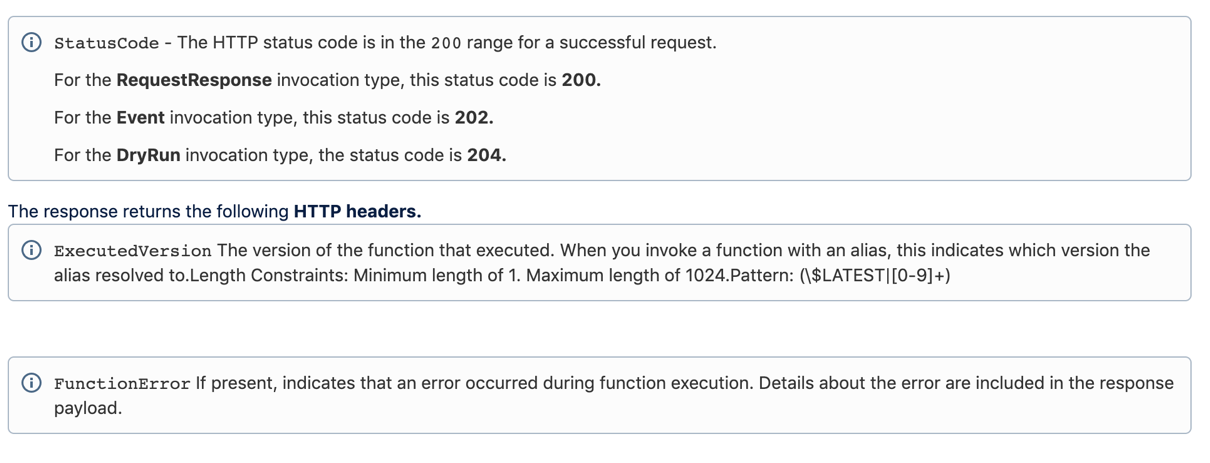

AWS documentation tells us that the RequestResponse request type must return 200 for a request to be successful.

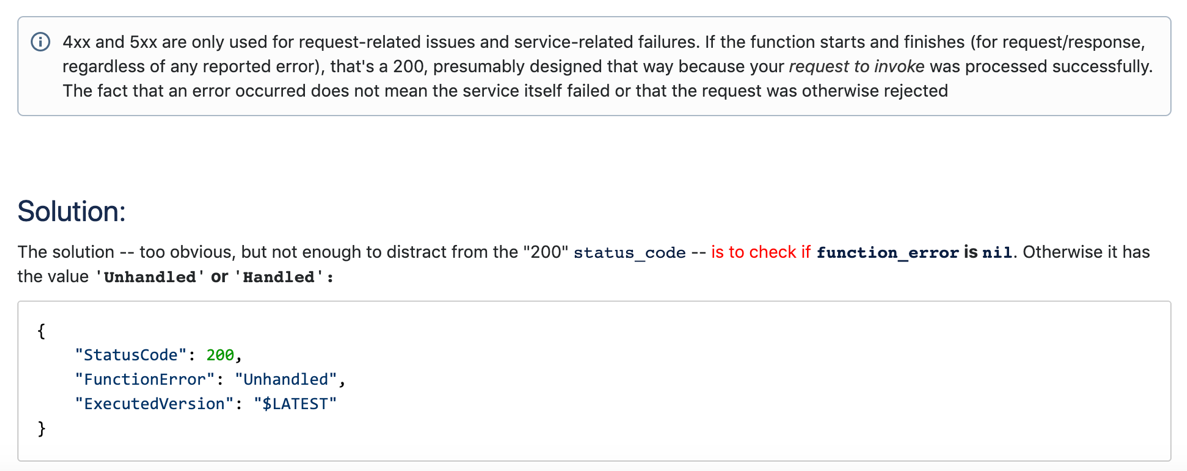

It’s worth mentioning that, in our scenario, receiving 200 responses didn’t mean that the “test” code ended successfully. To make sure your function’s code was invoked properly you have to check it against FunctionErrornull value existence. If it exists, then, unfortunately, your code failed, and you have to check whether it’s Unhandled or Handled.

Look at the logs. This one contains a FunctionError: Unhandled line that tells you: Sorry, man, not this time.

And this is how a successfully ended code, and a response, should look like:

Before the curtain falls

At this point, I want to emphasize one important thing: The whole example presented above doesn’t prove that production is a good place for testing flows, because it’s not. Thankfully, AWS Lambda allows us to do that with a little help from aliases and wrappers. Just remember that it comes with a much higher risk.

Therefore, whenever you want to do more complex tests, always follow the AWS multi-account scenario for different application environments. In the next part, we’ll talk about the automation of frontend rebuilds and learn how to prepare a flow, using AWS CI/CD services and some custom Lambdas.