Using the saga pattern with AWS Step Functions

Let's explore how we can use AWS Step Functions with the saga pattern to simplify failure handling across distributed transactions.

AWS Step Functions

Everything fails, sooner or later. No matter what type of failure we have to deal with, its aftermath is generally a pain in the ass. This is especially true within distributed microservice systems, when a typical request has to cross multiple bounded contexts (microservices with their independent databases).

It quickly becomes even more complex with serverless architectures. In the vast ocean filled with tiny Lambda functions, it’s pretty easy to come across failures. Moreover, problems may appear in connections between Lambda functions and other AWS services — the network is not reliable, after all.

For example, DynamoDB sets limits on read and write operations. When this limit is exceeded, there is a penalty either in the form of increased AWS monthly billing or request rejections. In the case of latter, we need to deal with that failure scenario — and we might have ended up with a data consistency issues if other functions have mutated the data simultaneously.

And this is precisely the example problem that we’re going to tackle today, by applying the saga pattern to workflows built in AWS Step Functions.

Distributed failures in AWS Step Functions workflows

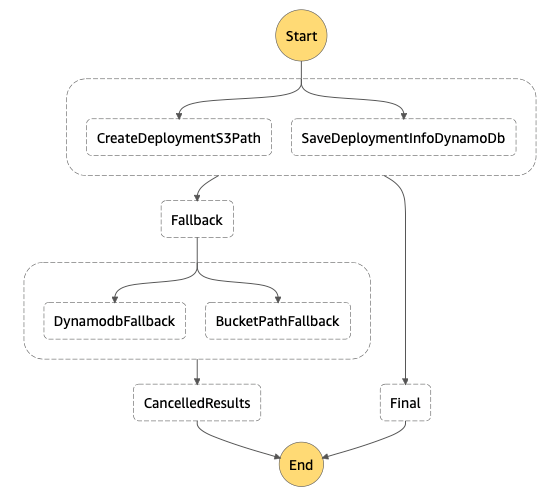

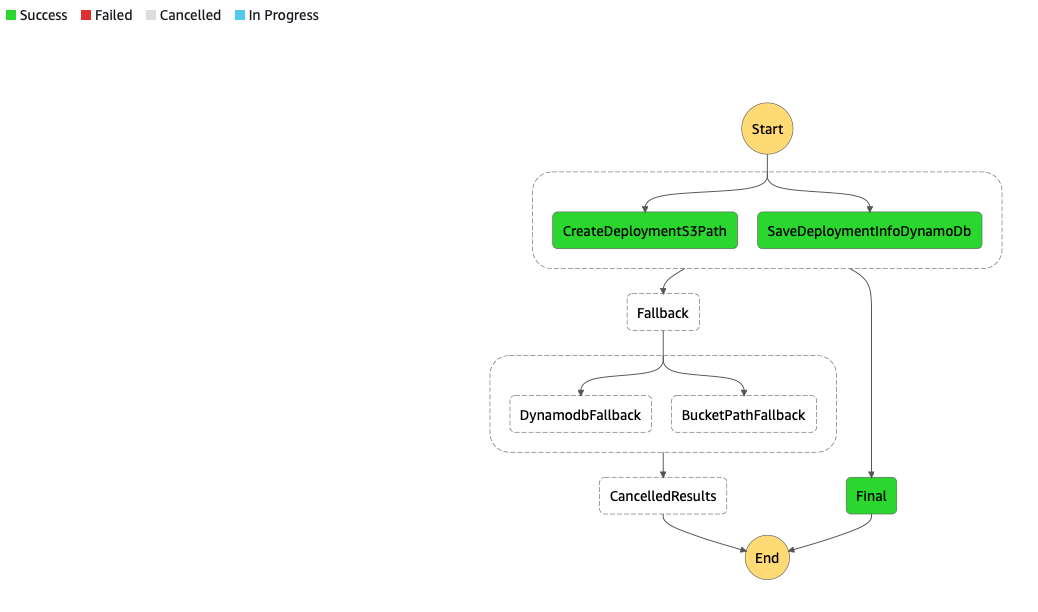

AWS Step Functions allow the user to define a state machine using Amazon States Language (ASL), which is a JSON object that defines the available states of the state machine, as well as the connections between them. AWS generates a nice looking flowchart from our ASL code which allows us to better visualize the machine, as seen here. We are going to use it as an example in this article:

AWS Step Functions — example flow

AWS Step Functions give us the ability to build activity flows (not to be mistaken with state machines) that are really helpful when we have to deal with transaction-like requests. What I have in mind can be explained by Wikipedia’s definition of an “atomic transaction”:

An atomic transaction is an indivisible and irreducible series of database operations such that either all occur, or nothing occurs. A guarantee of atomicity prevents updates to the database occurring only partially, which can cause greater problems than rejecting the whole series outright.

These activity flows consist of small steps (Lambda functions, Waiters, Choices, etc.) which constitute a singular logic flow. If such a flow puts something into S3 and simultaneously saves some metadata (like in our example), then we expect either none, or both to complete successfully. However, realistically one can fail while the other succeeds. What state does that leave our data in?

Given that any loss of consistency is completely unacceptable in most use cases, this is precisely the gap where the saga pattern comes in handy.

What’s the saga pattern anyway?

A saga (in distributed computing) could be defined as follows:

A saga is a sequence of local transactions where each transaction updates data within a single service. An external request corresponding initiates the first transaction to the system operation, and then each subsequent step is triggered by the completion of the previous one.

In essence, saga is a failure handling pattern — when any failure during one of those long-lived flows occurs, we apply the corresponding compensating actions to return to the initial state (the exact state when the saga begun).

How does the saga pattern help with distributed transactions?

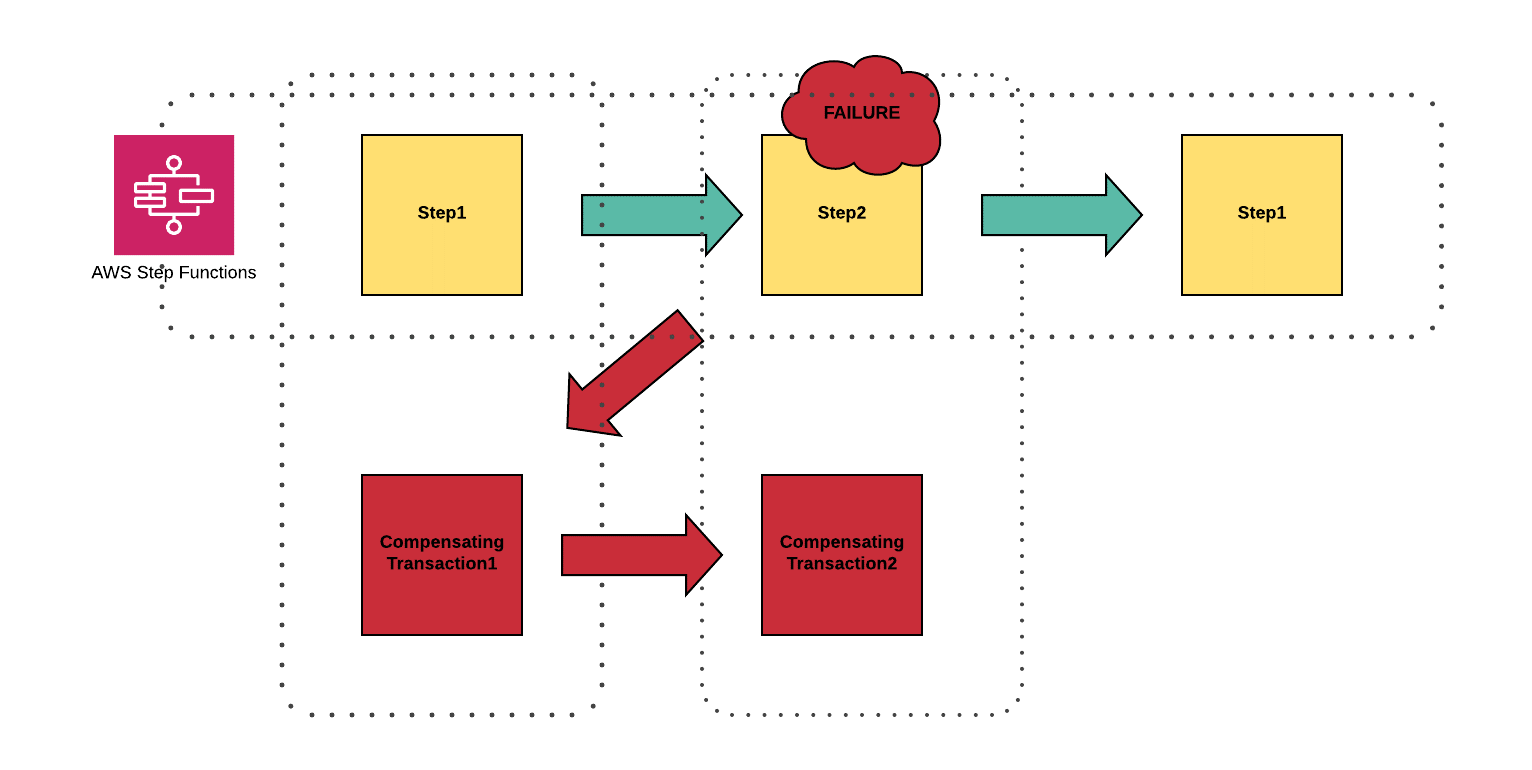

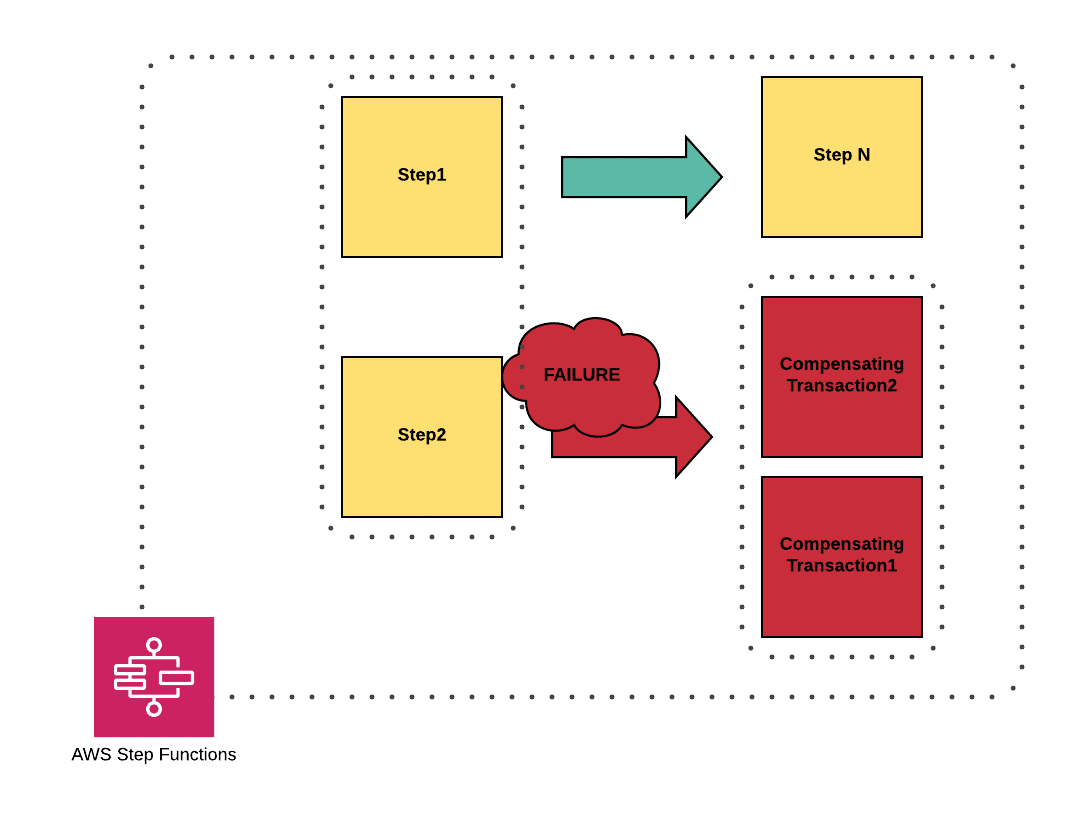

A saga in distributed computing has several steps — not unlike its namesake, which would likely have many chapters. And the key here is that at any given time, during any given chapter (step), we need to be able to simply turn back time — and undo all consequences of prior actions, to return to a pristine state.

At Chaos Gears we tend to use two scenarios: a sequential one, and a parallel one, depending on needs.

Saga pattern — sequential scenario

Saga pattern — parallel step scenario

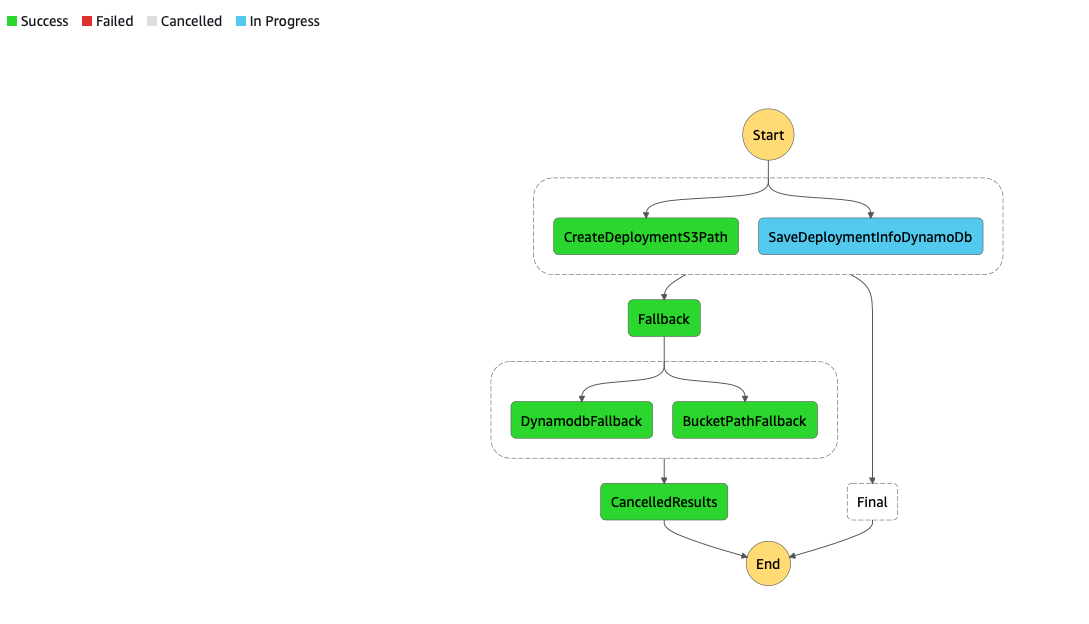

The diagram pasted at the beginning of my article covered the parallel scenario, containing a step with two Lambda Functions (DynamoDBFallback, BucketPathFallback) which generally are the compensating mirror reflections for CreateDeploymentS3Path and SaveDeploymentInfoDynamoDB. Forget about the names which have been changed for the sake of this article. I hope my dear readers have already got the point. Whenever you code a Lambda Function which is going to be used in a workflow to change the state, always think about keeping the consistency in case of failure. I don’t have to remind that keeping the idempotence in such scenario is obviously a must-have.

In case of failure

In case of success

At Chaos Gears we rely on the Serverless Framework for deployments of serverless environments. For us, the biggest benefits of the framework lie in the plugins available in its ecosystem. Basically, you don’t have to code everything from scratch. Just remember that the devil is in the details, so not every plugin will inherently fit exactly your use case.

One of the plugins available is for the configuration of AWS Step Functions. It eases the pain points of building complex flows. Also: I prefer to YAML over JSON (I consider it easier to read).

Implementation

Below, you’ll see an example flow that fits the diagram shown at the beginning of our article. I want to draw your attention to the types of the “Parallel” states which allow you to invoke several Lambda functions simultaneously. Whenever one of them fails, the whole Step is considered failed, and Fallback procedures (compensating transactions) are launched.

stepFunctions:stateMachines:Add:name: ${self:custom.app}-${self:custom.service_acronym}-add-${self:custom.stage}metrics:- executionsTimeOut- executionsFailed- executionsAborted- executionThrottledevents:- http:path: ${self:custom.api_ver}/pathmethod: postprivate: truecors:origin: "*"headers: ${self:custom.allowed-headers}origins:- "*"response:statusCodes:400:pattern: '.*"statusCode":400,.*' # JSON responsetemplate:application/json: $input.path("$.errorMessage")200:pattern: '' # Default response methodtemplate:application/json: |{"request_id": '"$input.json('$.executionArn').split(':')[7].replace('"', "")"',"output": "$input.json('$.output').replace('"', "")","status": "$input.json('$.status').replace('"', "")"}request:template:application/json: |{"input" : "{ \"body\": $util.escapeJavaScript($input.json('$')), \"contextid\": \"$context.requestId\", \"contextTime\": \"$context.requestTime\"}","stateMachineArn": "arn:aws:states:#{AWS::Region}:#{AWS::AccountId}:stateMachine:${self:custom.app}-${self:custom.service_acronym}-add-${self:custom.stage}"}definition:StartAt: CreateCustomerDeploymentStates:CreateCustomerDeployment:Type: ParallelNext: FinalOutputPath: '$'Catch:- ErrorEquals:- States.ALLNext: FallbackResultPath: '$.error'Branches:- StartAt: CreateDeploymentS3PathStates:CreateDeploymentS3Path:Type: TaskResource: arn:aws:lambda:#{AWS::Region}:#{AWS::AccountId}:function:${self:custom.app}-${self:custom.service_acronym}-function-aTimeoutSeconds: 5End: TrueRetry:- ErrorEquals:- HandledErrorIntervalSeconds: 1MaxAttempts: 2BackoffRate: 1- ErrorEquals:- States.TaskFailedIntervalSeconds: 2MaxAttempts: 2BackoffRate: 1- ErrorEquals:- States.ALLIntervalSeconds: 2MaxAttempts: 1- StartAt: SaveDeploymentInfoDynamoDbStates:SaveDeploymentInfoDynamoDb:Type: TaskResource: arn:aws:lambda:#{AWS::Region}:#{AWS::AccountId}:function:${self:custom.app}-${self:custom.service_acronym}-function-bTimeoutSeconds: 5End: TrueRetry:- ErrorEquals:- HandledErrorIntervalSeconds: 1MaxAttempts: 2BackoffRate: 1- ErrorEquals:- States.TaskFailedIntervalSeconds: 2MaxAttempts: 2BackoffRate: 1- ErrorEquals:- States.ALLIntervalSeconds: 1MaxAttempts: 2BackoffRate: 1Fallback:Type: PassInputPath: '$'Next: CancelDataCancelData:Type: ParallelInputPath: '$'Next: CancelledResultsBranches:- StartAt: DynamodbFallbackStates:DynamodbFallback:Type: TaskResource: arn:aws:lambda:#{AWS::Region}:#{AWS::AccountId}:function:${self:custom.app}-${self:custom.service_acronym}-function-b-compensatingTimeoutSeconds: 5End: TrueRetry:- ErrorEquals:- HandledErrorIntervalSeconds: 1MaxAttempts: 2BackoffRate: 1- ErrorEquals:- States.TaskFailedIntervalSeconds: 2MaxAttempts: 2BackoffRate: 1- ErrorEquals:- States.ALLIntervalSeconds: 1MaxAttempts: 2BackoffRate: 1- StartAt: BucketPathFallbackStates:BucketPathFallback:Type: TaskResource: arn:aws:lambda:#{AWS::Region}:#{AWS::AccountId}:function:${self:custom.app}-${self:custom.service_acronym}-function-a-compensatingTimeoutSeconds: 5End: TrueRetry:- ErrorEquals:- HandledErrorIntervalSeconds: 1MaxAttempts: 2BackoffRate: 1- ErrorEquals:- States.TaskFailedIntervalSeconds: 2MaxAttempts: 2BackoffRate: 1- ErrorEquals:- States.ALLIntervalSeconds: 1MaxAttempts: 2BackoffRate: 1CancelledResults:Type: SucceedFinal:Type: PassEnd: True

Summary

Establishing consistency and maintaining it across your distributed system — serverless or not — is the main challenge you face, when designing and developing a distributed architecture. It’s incredibly difficult, if not outright impossible most of the time, to handle that task without the saga pattern.

Bear in mind AWS Step Functions won’t solve all your problems, and won’t fit every serverless scenario. However, they do offer a comparatively straightforward way to simplify the complexities (and headaches) of distributed transactions — one we certainly consider a must-have in our AWS toolbox.