Building a serverless app — key technical and business decisions

After 9-months of development, it's time to to re-evaluate some technical and organizational decisions about our serverless application.

Amazon S3

Amazon SQS

Serverless Framework

Recently me and my teammate, during AWS User Group in Cracow, we’ve conducted a presentation about not always “easy and perfect” serverless world, like it’s sometimes presented. Don’t get me wrong, I am not against “serverless” buzzword, but likewise, with each new technological strategy, there are many expectations for quicker solutions for our never-ending problems. In the contemporary world, the business world has set a new goal — which is time. Therefore, everyone strives to find all possible ways to get it as much as possible to compete with other innovative companies. We’ve been flooded by two game changers: containers and serverless. Today, dear reader, let me focus on the latter one basing on the 9-month development period of our app called Gearbox.

The source of changes

Like probably most of you know our company, Chaos Gears, has been set up by 3 guys born in the on-premises world. The reason was simply a lack of impact on innovation with the usage of technology. About almost 3 years ago, I started shifting my mindset into a fully cloud-native one. Not because it was something new, not because everyone talks about that. You might be surprised with the reason I am going to say, but the first time in my life I realized that with the usage of the AWS cloud I would able to solve people’s problems, much faster than ever before with stronger attention to the problem itself rather than technology name.

In Chaos Gears, we’ve got two areas of continuous improvement: first one is solving companies problems with based on AWS cloud services and third-party tools; then second one bases on the experience gathered from our clients, and it is the development of our fully serverless app called Gearbox.

Business goals, not tools

We came up with an idea of Gearbox about one year ago. It was one of those days when you’re waking up, and the well-known truth hits you like a running train. That day I realized that the cloud goods are for everyone, but especially small innovative companies should have a “mediator” allowing them to shorten the time to value and bring their brilliant ideas to the successful “global introduction phase.” The critical point in that story is that those companies don’t have to know about all of those technological issues, instead entirely focus on business goals.

“Keep it simple, stupid” — initial decision

Deciding whether Gearbox should be allocated into the containerized world or serverless one, we’ve ended after setting some critical goals:

- Fully cloud-native project based on AWS and microservices to make it easier to maintain the logic and context of different parts,

- Bounded context, loosely coupled — allowing for automation/development per microservice,

- Perform repetitive, day-to-day duties more efficiently — some automation needed,

- Technology that directly drives business value — we don’t have much time nor many people, so do it efficiently.

We knew it was going to be cloud-native, and we wanted to entirely put our effort into the product, which might have a positive impact on its users somewhere in the future. Serverless was the one we needed. That time we weren’t aware of the challenges which were coming.

Why not containers?

I think that we hadn’t decided to use containers like Kubernetes, ECS, Fargate or other ones because of these following points:

- Administrative tasks like security fixes for containers;

- Higher entry level;

- More manual intervention;

- There are always runtime costs;

To make it clear — we would be able to manage the containerized app, but probably it would distract our attention to more infrastructural parts which we don’t have time for. If you have enough resources and do not have a problem with the points I’ve mentioned, go ahead and use containers; use anything which allows you to reach your final goal, a product.

It’s all about events

When we were starting our journey with serverless all, we knew it was: “no servers” and events. First of all, let’s take a look at the latter. My dear reader, after those 9 months, the hardest part is understanding and connecting up the events. Your mindset has to entirely switch into an event-driven model in terms of implementation, design, and thinking about your app. Look at that like a vast ocean of small events not always correlated with themselves, making the whole story even harder. That’s why important are order and the ability to combine small functions into the working ecosystem of the context-based services. Do not listen “no servers” stories, less maintenance, and all those tremendous benefits because serverless it’s not the remedy for all problems. Consider that like a powerful weapon which might ease much pain and reduce the time of release, but you have to understand why you have chosen this particular approach. What I am trying to say is, do not use serverless because it’s fancy, rather for the privilege of focusing much more on the goal and not on infrastructure, especially in the first phase of your development.

Avoid waiting and handle failures

Nothing is free nor can be taken for granted. If technology is taking away some areas from you, do expect that something is going to insist on your additional attention. With serverless, time is money. After spending some time with AWS Lambdas, we’re sure that they have to be treated and then coded as ephemeral, short-living functions. Do not ever make mistakes forcing any of your function to wait for another invoked event to be done. Doing like that leads you to waste your money, and seriously, nobody likes that. Asynchronicity is the clue. If there’s a need to call another function in the “chain”, invoke it asynchronously without expecting to get a response and put the state info somewhere else, like for example DynamoDB. Then when the whole process is finished, update the state in DynamoDB. It also worth to keep in mind that a smart pattern is to invoke functions through SNS (pub/sub service) or message broker like SQS to be somehow sure about data integrity because everything fails.

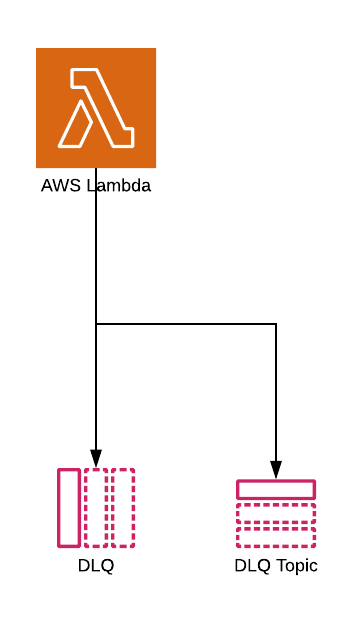

Services like SNS or SQS may save your life in terms of retries and dead letter queues (DLQ) as a source for post error analyses.

Below is an example containing a part of one of our microservices. Based on the events from S3, we put object metadata in the bucket, then extract the data from SQS via a Lambda function, and finally put the metadata into proper DynamoDB. In case of failures during the data extraction by Lambda, we rely on the DLQ to invoke another function and retry the process or gather more details about the failure.

Of course, you could use a DLQ directly with Lambda and send the event payload directly to it after exceeding a given number of allowed retries.

Cold starts

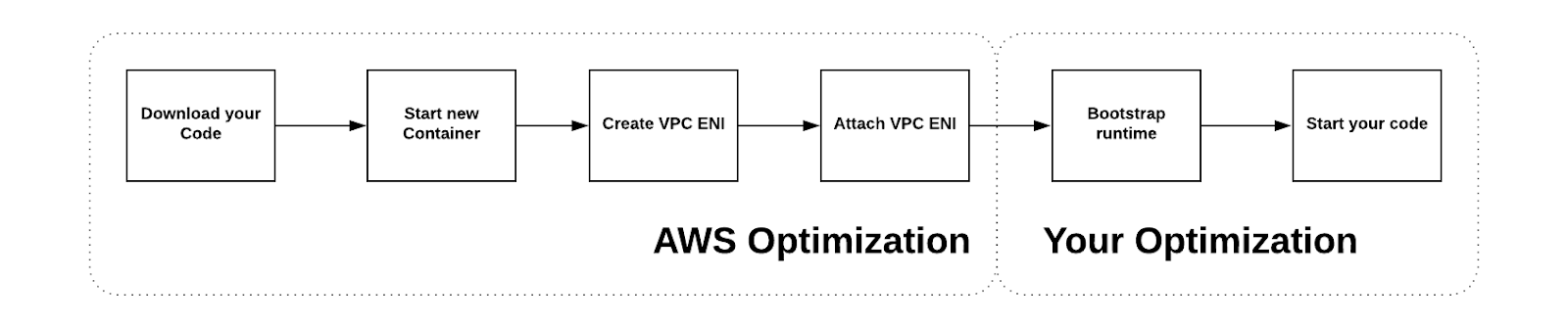

Cold starts might be your enemy regarding costs, and they happen when the first request comes in after deployment. By the deployment, I consider the phase, when the code and the configuration needed to execute a Lambda, are already stored in S3. The first request comes in, and the container is mounted with the configuration defined before, and the code to be executed, loaded into memory. Then after the request is processed, the container stays alive to be reused for subsequent requests.

Generally, the responsibility is divided into your and AWS optimization however, you have an impact on the AWS one. We know that Lambda package is limited by 50Mb but the smaller the better(time need for downloading your code from S3). We use Serverless Framework for all our serverless projects and there is an option to exclude unnecessary packages in serverless.yml file (just an example):

package:exclude:- node_modules/**- requirements.txt- resources/**

The result is, for example, the size of the package drops down from Mb to Kb.

Secondly, if you don’t have a reason to put your Lambda in a VPC then don’t do that because you’re saving a time required for creating an attachment of ENI. The reason to put your Lambda in VPC is access to a database located in private AZ or some internal security policies disallowing to launch Lambdas in public zone.

When it’s going about “bootstrap runtime” you can optimize it by taking care of code, the payload size in the event and move the logic outside the handler (function to be executed upon invocation).

Minimality — a lesson learned from Lambdas

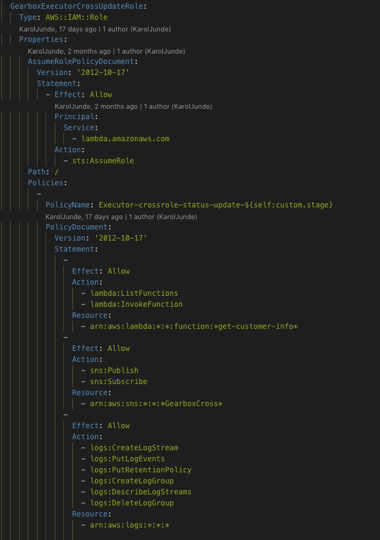

An important lesson we’ve learned during our development process was to minimise our code and keep IAM roles with relation 1 Lambda = 1 IAM role. For some of you it might be an anti-pattern, and it’s ok, but for us it saves a lot of time in terms of maintenance, debugging and changes like making IAM roles more “least-privilege”. Generally it’s much easier to work with particular Lambda and limit its access to other AWS services than sharing one IAM role for multiple Lambdas.

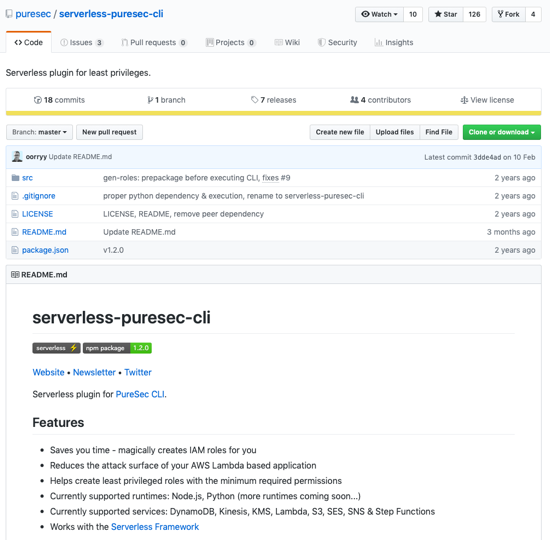

There are some plugins like “serverless-puresec-cli” to generate IAM roles for you. We haven’t tested it yet, but nice to know that such tool exists.

Source: GitHub

As I mentioned before we use Serverless Framework and create IAM roles as an external resources attached to Lambdas. Do not kill me for “*”, I know it looks awful, but a nice entry point for limiting them in the future.

Serverless — does it mean less?

Whenever we’re introduced with something containing “less”, then we’re instantly switching off our vigilance. For us, it doesn’t mean less, we knew it was going to challenge us, but besides that, it brings us much fun(even during weekends — my wife hates me for that). We learn every day and come across new unknown obstacles we didn’t know about before. Closing the first part of this article, I’ll tell you one thing — startups are all about experimenting and searching for ways to make things better.

Right now we’ve got:

- 51 Lambda functions

- Python 3.6

- 6 microservices

- 7 repos — Github

- Combination of Serverless Framework and Terraform