Sharing API Gateway endpoints to bypass CloudFormation limits

Exploring how to get around hard resource limits in AWS CloudFormation templates managed by Serverless Framework.

AWS CloudFormation

Amazon API Gateway

Serverless Framework

AWS CloudFormation has got hard limits, and one in particular can be quite annoying. I’m talking about the “maximum number of resources that you can declare in your AWS CloudFormation template”. At the time of writing, it is limited to 200. As someone who hit this limit, I am going to show you a method that worked for us to get around it.

Furthermore, we will cover how to share API Gateway endpoints and custom domains as well.

CloudFormation limits equal Serverless Framework limits

While using Serverless Framework, you deal with CloudFormation lying under the hood and becoming familiar with all constraints takes time.

Each time you type sls deploy, you launch a new CloudFormation stack or update an existing one.

Plugin: Deploydeploy ......................... Deploy a Serverless servicesdeploy function ................ Deploy a single function from the servicedeploy list .................... List deployed version of your Serverless servicedeploy list function ........... List all the deployed functions and their versions--conceal ......................... Hide secrets from the output (e.g. API Gateway key values)--stage / -s ...................... Stage of the service--region / -r ..................... Region of the service--package / -p .................... Path of the deployment package--verbose / -v .................... Show all stack events during deployment--force ........................... Forces a deployment to take place--function / -f ................... Function name. Deploys a single function (see 'deploy function')--aws-s3-accelerate ............... Enables S3 Transfer Acceleration making uploading artifacts much faster

After a stack is updated, the number of resources contained within it will be displayed in the resource summary. The count includes all resources which part of your service, not just functions — like IAM roles, database tables, S3 buckets, SQS queues, and much more.

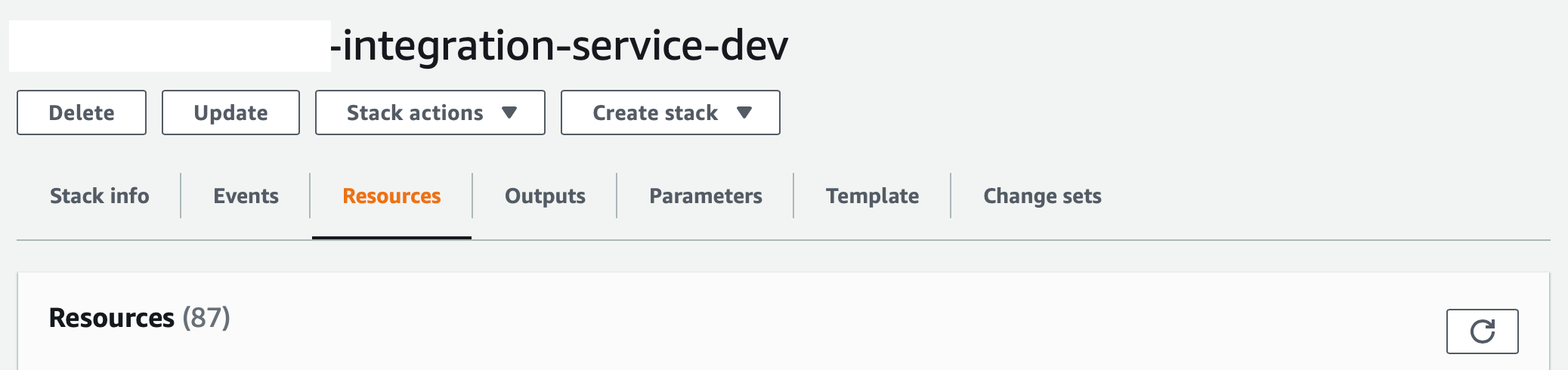

Serverless: Packaging services...Serverless: Excluding development dependencies...Serverless: Injecting required Python packages to package...Serverless: WARNING: Function {...} has timeout of 60 seconds, however, it's attached to API Gateway so it's automatically limited to 30 seconds.Serverless: Uploading CloudFormation file to S3...Serverless: Uploading artifacts...Serverless: Uploading service {...}Serverless: Validating templates...Serverless: Updating Stack...Serverless: Checking Stack update progress.........................................................Serverless: Stack update finished...Service InformationService: ...-integration-servicestage: devregion: eu-central-1stack: ...-integration-service-devresources: 87

The AWS Console also provides this information in the CloudFormation dashboard:

Unfortunately, I have missed this during development and hit almost 200 resources. In the end, the CloudFormation limit itself was not the obstacle. First of all, I had to figure out how to break the service into multiple logical services while keeping one common API Gateway.

By default, each Serverless project generates a new API Gateway.

Open source solutions

My first thought upon hitting this limit was: let’s find an out-of-the-box solution.

The most reasonable tool seemed to be github.com/dougmoscrop/serverless-plugin-split-stacks. If I was starting a project from scratch, this plugin would, hopefully, save me time and reduce my worries about limits.

However, this was not the case as I already had about 40 Lambda functions working, with some additional AWS services and new parts of the microservice in my mind. Meanwhile, the tool’s README.md clearly states:

It is a good idea to select the best strategy for your needs from the start because the only reliable method of changing strategy later on is to recreate the deployment from scratch.

No way, not on a Saturday.

That said, if you are starting with a new Serverless project, consider the plugin. Out-of-the-box, it supports different types of splits: per Lambda, per type, per Lambda group.

Moving beyond a single service

I had one main microservice contained in a single directory (see below). My idea was to retain one microservice but extract several of its components. Just to make it clear, I am not talking about extracting business logic, but a bunch of modules, a great number of Lambda functions, working with one service.

My initial directory:

├── service-a│ ├── files│ ├── functions│ ├── helpers│ ├── node_modules│ ├── package-lock.json│ ├── package.json│ ├── requirements.txt│ ├── resources│ ├── serverless.yml│ └── tests

It is worth highlighting that you can follow this pattern if your application has many nested paths (presented below with service-a and service-b) and your goal is to split them into smaller services. Although two services have been deployed via different serverless.yml files, both a and b reference the same parent path /posts.

Keep in mind that CloudFormation will throw an error if we try to generate an existing path resource. More on how to deal with that in the next paragraph.

service: service-afunctions:create:handler: posts.createevents:- http:method: postpath: /posts

service: service-bfunctions:create:handler: posts.createCommentevents:- http:method: postpath: /posts/{id}/comments

After the split, I’ve ended with directories like the ones shown below, each having its own functions and sharing some AWS resources.

├── service-a-module-1│ ├── files│ ├── functions│ ├── helpers│ ├── node_modules│ ├── package-lock.json│ ├── package.json│ ├── requirements.txt│ ├── resources│ ├── serverless.yml│ └── tests└── service-a-module-2├── functions├── helpers├── package-lock.json├── package.json├── requirements.txt├── resources├── serverless.yml└── tests

Now that we’ve got a basic structure to conquer stack limits, let’s talk about sharing API Gateway endpoints and custom domains.

Sharing custom domains in API Gateway

If you create multiple API services via serverless.yml, they will all have unique API endpoints. You can assign different base paths for your services. For example, api.example.com/service-a can point to one service, while api.example.com/service-b can point to another one. But if you try to split up your service-a, you’ll need to figure out how to share your custom domain across them.

- service-a-api for ⇒ GET

api.example.com/service-a/{bookingId} - service-a-api for ⇒ POST

api.example.com/service-a - service-a-api for ⇒ PUT

api.example.com/service-a/{bookingId} - service-b-api for ⇒ POST

api.example.com/service-b

So, what’s the issue?

Generally, each path part is a different API Gateway object, and a path part is a child resource of the preceding part. So, the aforementioned path part /service-a is basically a child resource of /. and /service-a/{bookingId} is a child resource of /service-a.

Going further, we would like the service-b-api to have the /service-b path. This would be a child resource of /. However, / is created in the service-a service. So, we need to find a way to share the resource across services.

The solution I went for was…

Sharing API Gateway endpoints

As my case was referring to sharing the same API endpoint among logical modules, I started with the Serverless Framework documentation. Given it contains only a brief explanation without detailed examples, I’ve decided to search further. I divided my problem into separate parts and focused on the endpoint I had created via initial service-a directory.

Understanding that all child and root resources have their own “IDs”…

b6xm330b00.execute-api.eu-west-1.amazonaws.com/dev pointing to bqdplee0re, /v1 pointing to i2315j… I knew I had to find a way to keep two different microservice modules (defined in separate serverless.yml files), pointing to one, common endpoint. I made some attempts based on the Serverless Framework docs, but each time I’ve tried to deploy service-a-module-2 via sls deploy, I got an error stating that I was trying to generate an existing path resource /v1.

The actual solution turned out to be simple:

- Create API Gateway endpoint in the first service module,

- Create API Gateway

PathPartresource in the first service module, - Share root and, if needed, child path parts,

- Import outputs in the second module,

- If you’re sharing child path, use

restApiResourcesin the module you’re sharing to, - Configure your paths in Lambda functions/Step Functions in both modules.

provider:name: awsruntime: python3.6region: eu-west-1stage: devmemorySize: 128logRetentionInDays: 14apiGateway:minimumCompressionSize: 1024restApiResources:v1: !Ref VersionPathtimeout: 60versionFunctions: trueapi_verFunctions: trueenvironment:log_level: ${self:custom.log-level}tags:Owner: chaosgearsProject: ${self:custom.app}Service: ${self:custom.service_acronym}Environment: ${self:custom.stage}functions:get:name: ${self:custom.app}-${self:custom.service_acronym}-gethandler: functions/get.lambda_handlerrole: GetMetadataruntime: python3.6memorySize: 128reservedConcurrency: 10environment:tablename: ${self:custom.tablename}tags:Name: ${self:custom.app}-${self:custom.service_acronym}-getlayers:- arn:aws:lambda:${self:provider.region}:#{AWS::AccountId}:layer:custom-logging-${self:custom.stage}:${self:custom.logging-layer-version}events:- http:path: ${self:custom.api_ver}/tenant/{id}method: getprivate: trueintegration: lambda

Additionally,I had to deploy, in external files, resource for child API Gateway path (/v1). Plus, output values that I wanted to export (ApiGatewayId, rootpathId referencing to / and childpath/v1) had to be shared with service-a-module-2:

Resources:VersionPath:Type: AWS::ApiGateway::ResourceProperties:RestApiId:Ref: ApiGatewayRestApiParentId:Fn::GetAtt: [ApiGatewayRestApi, RootResourceId]PathPart: ${self:custom.api_ver}Outputs:ApiGatewayRestApiId:Value:Ref: ApiGatewayRestApiExport:Name: ${self:custom.stage}-${self:custom.app}-${self:custom.service_acronym}-restapi-idApiGatewayRestApiRootResourceId:Value:Fn::GetAtt:- ApiGatewayRestApi- RootResourceIdExport:Name: ${self:custom.stage}-${self:custom.app}-${self:custom.service_acronym}-root-idApiGatewayResourceVersionPath:Value:Ref: VersionPathExport:Name: ${self:custom.stage}-${self:custom.app}-${self:custom.service_acronym}-path

Where the child path is defined by PathPart:${self:custom.api_ver}.

provider:name: awsruntime: python3.6region: eu-west-1stage: devmemorySize: 128logRetentionInDays: 14apiGateway:restApiId:'Fn::ImportValue': ${self:custom.stage}-${self:custom.app}-${self:custom.service_acronym}-restapi-idrestApiRootResourceId:'Fn::ImportValue': ${self:custom.stage}-${self:custom.app}-${self:custom.service_acronym}-root-idrestApiResources:/v1:'Fn::ImportValue': ${self:custom.stage}-${self:custom.app}-${self:custom.service_acronym}-pathtimeout: 60versionFunctions: trueapi_verFunctions: trueenvironment:log_level: ${self:custom.log-level}tags:Owner: chaosgearsProject: ${self:custom.app}Service: ${self:custom.service_acronym}Environment: ${self:custom.stage}functions:package-update:name: ${self:custom.app}-${self:custom.service_acronym}-package-updatehandler: functions/package_update.lambda_handlerrole: PackageUpdateMetadataruntime: python3.6memorySize: 128reservedConcurrency: 10environment:tablename: ${self:custom.tablename}tags:Name: ${self:custom.app}-${self:custom.service_acronym}-package-updatelayers:- arn:aws:lambda:${self:provider.region}:#{AWS::AccountId}:layer:custom-logging-${self:custom.stage}:${self:custom.logging-layer-version}events:- http:path: ${self:custom.api_ver}/packagemethod: putprivate: trueintegration: lambda

It has to be defined in the same region as service-a-module-1 because of CloudFormation outputs imports.

As you’ve noticed, service-a-module-1 has a function ready to be invoked via path:

events:- http:path: ${self:custom.api_ver}/tenant/{id}

Whereas service-a-module-2 has a path defined within a different serverless.yml file:

events:- http:path: ${self:custom.api_ver}/package

Which URL versioning scheme you ultimately choose for this — if any — is, of course, up to you. We went with versioned URLs for their practical flexibility in the long term, and our advice would be for you to do the same.

Lessons learned

- CloudFormation stack limits may be inconvenient during “serverless” project development. But if you anticipate the scale of resources per microservice, this shouldn’t bother you at all.

- Endpoint sharing and custom domain sharing can be implemented in the same way. I’ve described the former but the pattern is the same for the latter as well.

- There is one drawback:

service-a-module-2is dependent on theservice-a-module-1API Gateway resource.