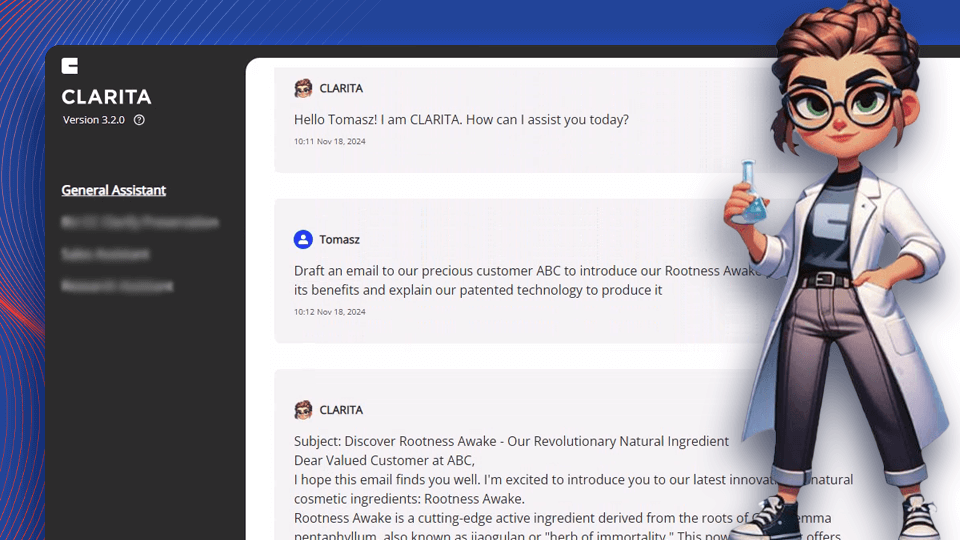

Meet Clarita, Clariant's generative AI built on AWS

Learn how Clariant, a global specialty chemicals leader, increased productivity with GenAI and help from Chaos Gears' AI experts.

with AI chatbot

content and summaries

generative AI models

for GenAI deployments

Clariant is a global specialty chemicals company based in Switzerland that produces care chemicals, catalysts, and adsorbents and additives. After the public launch of generative AI tools in late 2022, Clariant saw an opportunity to use such chatbot capabilities to boost its in-house business research, innovation, and efficiency.

Working together with Amazon Web Services (AWS) and AWS Partner Chaos Gears, Clariant’s internal cloud architecture and engineering team developed a prototype generative AI platform and rolled that out to users across the business in less than 3 months. It has additional proofs of concept in development for users in both R&D and sales.

Opportunity The time was right to explore generative AI

With over 1,500 chemical products across two dozen industries, Clariant manages large amounts of data that could offer insights for researchers, salespeople, and others in the business. It also believed that a chatbot trained on its internal organizational data could be useful for its 10,000-plus employees.

The initial idea we came up with was research and development, where we have a lot of scientific papers that we have to search through and analyze

, says Ariel Syrko, Head of Architecture at Clariant. But there are plenty of other use cases, like for IT, where there is a lot of documentation for the help desk and user self-service

. Another motivation for the project, he adds, was to ensure that employees who wanted to use generative AI would have a secure sandbox based on Clariant’s internal data, rather than feeding proprietary — or even confidential — data into a public generative AI tool.

The timing for the project was also right because Clariant had completed a global migration of its IT infrastructure to AWS in 2022, setting up processes, standards, and a skilled internal team for cloud architecture and engineering. The migration gave Clariant new cloud capabilities, meaning that it could easily use the many AWS products and services related to machine learning (ML) and AI. To make the most of those capabilities, Clariant knew it would need support from a vendor with experience with both AWS and AI/ML, so it turned to Chaos Gears, which it had worked with on other projects over the previous couple of years.

I knew they had good specialists in the area of cloud and machine learning. We had received very good support in different areas of engineering, and that’s how we ended up working with them on generative AI as well.

Solution GenAI built on a modular foundation

Working remotely with Clariant, Chaos Gears began the project by assessing what the company’s goals were and what cloud architecture standards it had worked out to that point. Clariant’s architecture and engineering team had already created a generative AI architecture framework and prototype for an AI chatbot, so Chaos Gears started building a platform that would serve as the foundation for that and for any other tools that Clariant decided to roll out later. The goal was to create a solid base that could be easily adapted to multiple generative AI applications using modular building blocks. Chaos Gears also trained Clariant’s team to further build their in-house generative AI skills.

One of the biggest challenges was to review and, where necessary, enrich data sources that would be used to generate generative AI responses

, says Tomasz Dudek, Head of Data and AI, and Solutions Architect for Chaos Gears.

There’s a lot of data and it varies in quality

, says Dudek. You need to spend a lot of time filtering to improve and enrich its quality. Also, some companies have data from 10 years ago that’s no longer relevant. That’s the main challenge around data in literally every project

.

most well-known employee.

Rather than training a custom large language model (LLM) from the ground up, Clariant and Chaos Gears used retrieval-augmented generation (RAG) on Amazon Bedrock to build the solution. A managed service that offers a choice of high-performing foundation models (FMs), Amazon Bedrock lets companies customize responses to generative AI queries by using RAG to first search their databases for the most relevant information. It then uses the LLM to produce human-readable answers based on that information.

You don’t expect the model to know your company, but you expect it to understand the language to know how to extract knowledge out of text

, says Dudek. So instead of training a model, after a user asks a question, you find relevant documents in the database, provide that to the model, and tell the model: «This is the knowledge you need to have in order to answer this question»

.

In less than 3 months, Clariant had a working generative AI platform in place. It then rolled out the first tool — an internal chatbot — to about 1,000 employees across the organization. Working with Chaos Gears and AWS, it continues to develop that tool, adding new features and functionalities. It’s also working on proofs of concept for other tools to support both the R&D and sales teams.

Outcome A flexible platform for generative AI experiments

Clariant now has a flexible platform on which it can build new generative AI tools to use across different parts of the business. The platform is also secure — it can be used only within Clariant’s network, and every API it uses is on a separate AWS account, ensuring that datasets are fully isolated from one another.

Because of the platform’s modular architecture, Clariant can launch new projects for testing in less than 4 hours. The modular approach also makes it possible for Clariant to apply updates or refinements from one project to other generative AI projects in a single day. We have building blocks so, when new use cases come on, we can reuse those elements

, Syrko says. It’s not like we are building from scratch for new projects. That enables much faster delivery

.

So far, responses to the company chatbot have been 85 percent positive, with users describing it as “helpful.” Syrko says it’s been interesting to watch as employees have refined their queries so that they receive better, more relevant results. Clariant is continually fine-tuning the chatbot based on user feedback to further improve both the results and the user experience. Syrko adds that people are finding the tool especially useful for tasks like writing emails, summarizing documents, and creating meeting minutes.

Clariant is also pleased with the expert support it has received from both Chaos Gears and AWS.

To be honest, the speed and agility with which Clariant, AWS, and Chaos Gears could deliver the solution together exceeded our expectations. I’m happy with the results and I foresee this as just the beginning of the journey.

Core tech

Amazon Bedrock

Amazon SageMaker

Amazon Textract

AWS AppFlow

AWS Step Functions

AWS Lambda

Amazon EC2

Amazon API Gateway

Elastic Load Balancing

Amazon OpenSearch

Amazon Redshift

Amazon DynamoDB

Amazon S3